Thomas Fel

@napoolar

Explainability, Computer Vision, Neuro-AI. Research Fellow @KempnerInst, @Harvard. Prev. @tserre lab, @Google, @GoPro. Crêpe lover.

ID: 831571293392732162

https://thomasfel.me 14-02-2017 18:30:21

1,1K Tweet

1,1K Takipçi

650 Takip Edilen

Do Intermediate Tokens Produced by LRMs (need to) have any semantics? Our new study "Beyond Semantics: The Unreasonable Effectiveness of Reasonless Intermediate Tokens" lead by kstechly, Karthik Valmeekam Atharva & Vardhan Palod dives into this question 🧵 1/

Shreyas Gite Ville 🤖 the robotics field needs an observability layer to scan models and datasets. the most convenient way would be an extension of CRAFT for multimodal inputs. huggingface.co/papers/2211.10… Thomas Fel

Looking forward to speaking today at Harvard’s Kempner Institute at Harvard University NeuroAI conference on theories of learning, creativity and reasoning. Looks like a great set of speakers. Virtual attendance is possible: kempnerinstitute.harvard.edu/frontiers-in-n…

Around CVPR for the next 2 days—if you're into interpretability, SAEs, complexity, or just wanna know how cool Kempner Institute at Harvard University is, hit me up 👋

NEW: Asma Ghandeharioun (Asma Ghandeharioun) of Google DeepMind demonstrates techniques to enhance interpretability of large language models, including the Patchscopes framework. Watch the video: youtu.be/Og8FTqUrvtA #NeuroAI2025 #AI #ML #LLMs #NeuroAI

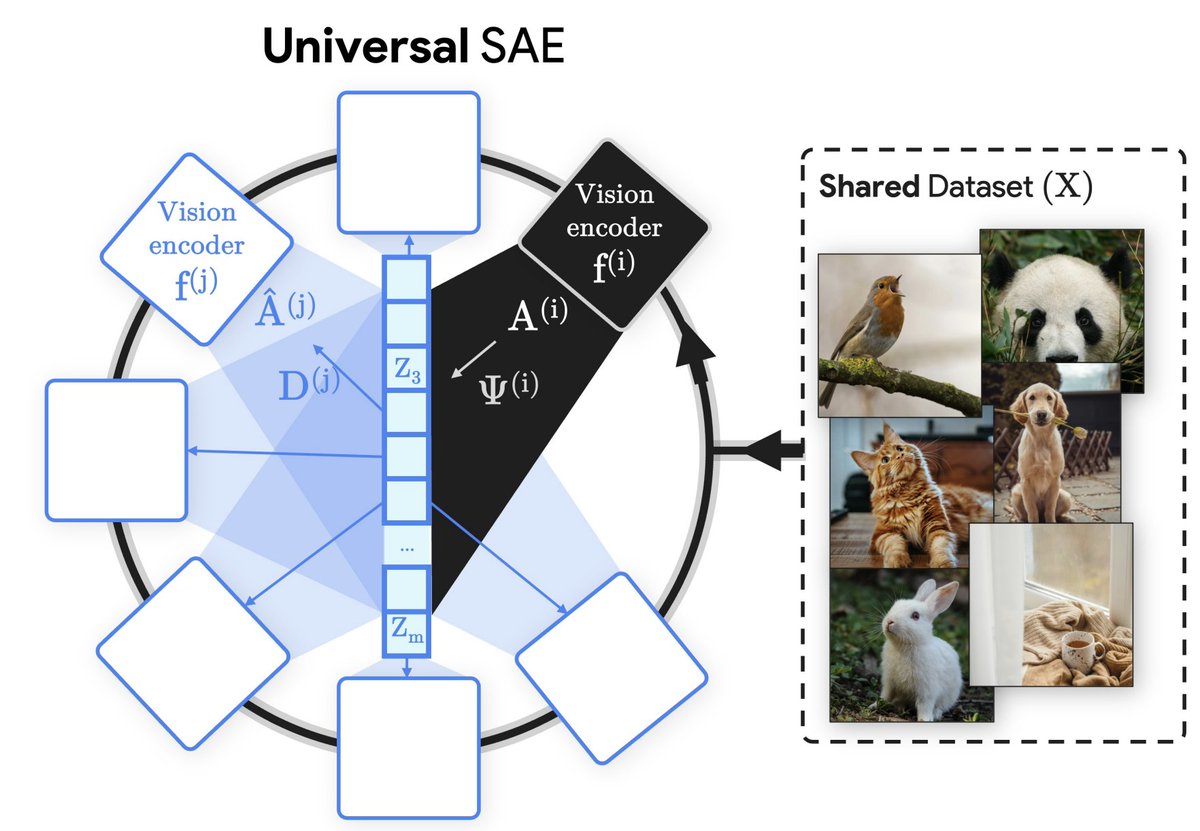

*Universal Sparse Autoencoders* by Harry Thasarathan Thomas Fel Matthew Kowal Kosta Derpanis They train a shared SAE latent space on several vision encoders at once, showing, e.g., how the same concept activates in different models. arxiv.org/abs/2502.03714

NEW: George Alvarez (George Alvarez) of Harvard Psychology and the #KempnerInstitute shows how long-range feedback projections can enhance the alignment between #ML models and human visual processing. Watch the video: youtu.be/Ju_eD0Jwa8Q #NeuroAI20205 #AI #neuroscience #NeuroAI

The Kempner Institute congratulates its research fellows Isabel Papadimitriou (Isabel Papadimitriou) and Jenn Hu (Jennifer Hu) for their faculty appointments (UBC Linguistics & JHU Cognitive Science) and celebrates their innovative research. Read more here: bit.ly/448heBy #AI #LLMs