Chirag Nagpal

@nagpalchirag

ID: 107757999

http://cs.cmu.edu/~chiragn 23-01-2010 16:43:38

1,1K Tweet

1,1K Takipçi

728 Takip Edilen

Excited to share that the Machine Learning and Optimization team at Google DeepMind India is hiring Research Scientists and Research Engineers! If you're passionate about cutting-edge AI research and building efficient, elastic, and safe LLMs, we'd love to hear from you. Check

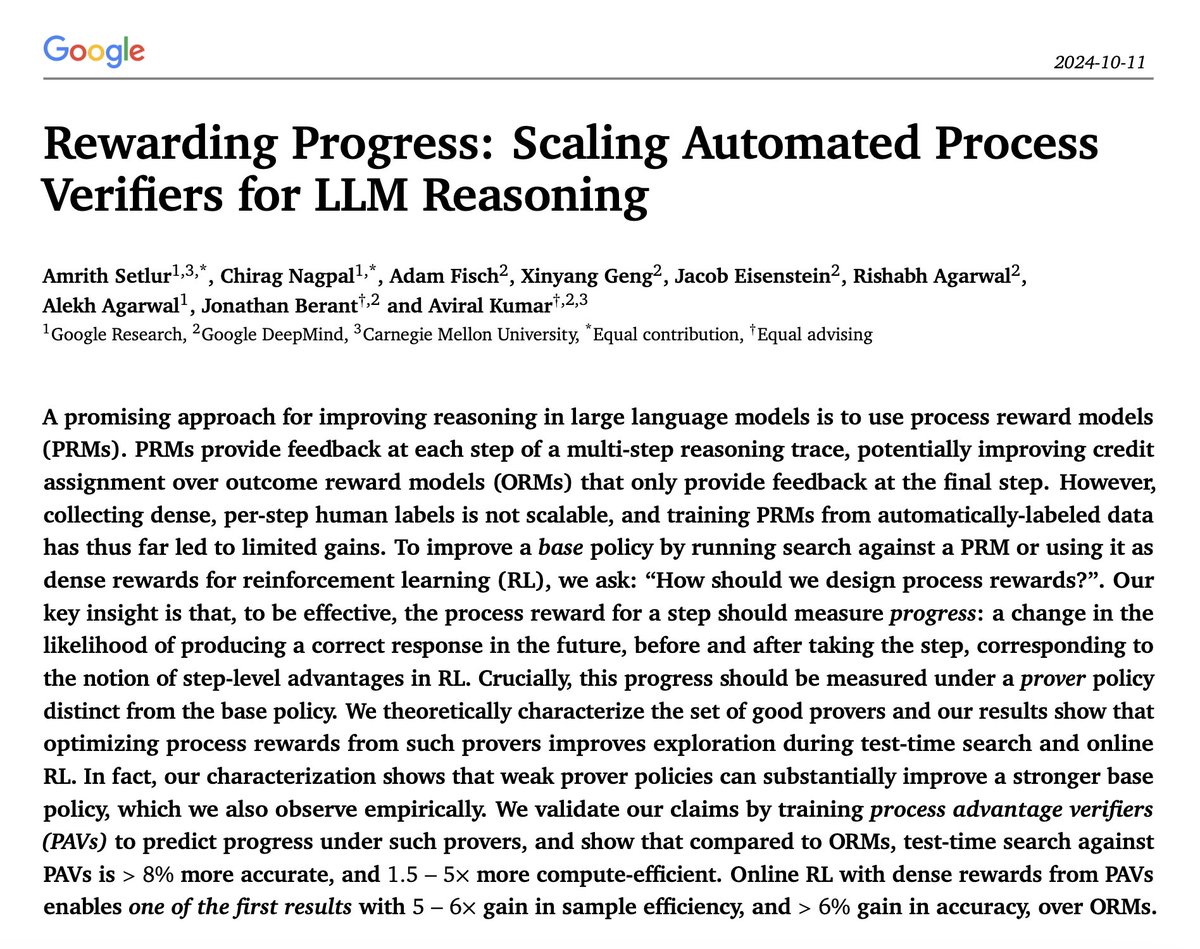

🚨New paper led by Amrith Setlur on process rewards for reasoning! Our PRMs that model specific notion of "progress" reward (NO human supervision) improve: - compute efficiency of search by 1.5-5x - online RL by 6x - 3-4x vs past PRM results arxiv.org/abs/2410.08146 How? 🧵👇

My student's paper was accepted in NeurIPS Conference workshop but the CanadianPM authorities denied his visa saying that he doesn't have ties outside Canada (his entire family is in India) and that attending the most important machine learning conference isn't legitimate business. 😭