Mohsen Fayyaz

@mohsen_fayyaz

PhD Student @ UCLA

#NLProc #MachineLearning

ID: 984716101211828224

http://mohsenfayyaz.github.io 13-04-2018 08:53:08

17 Tweet

172 Takipçi

385 Takip Edilen

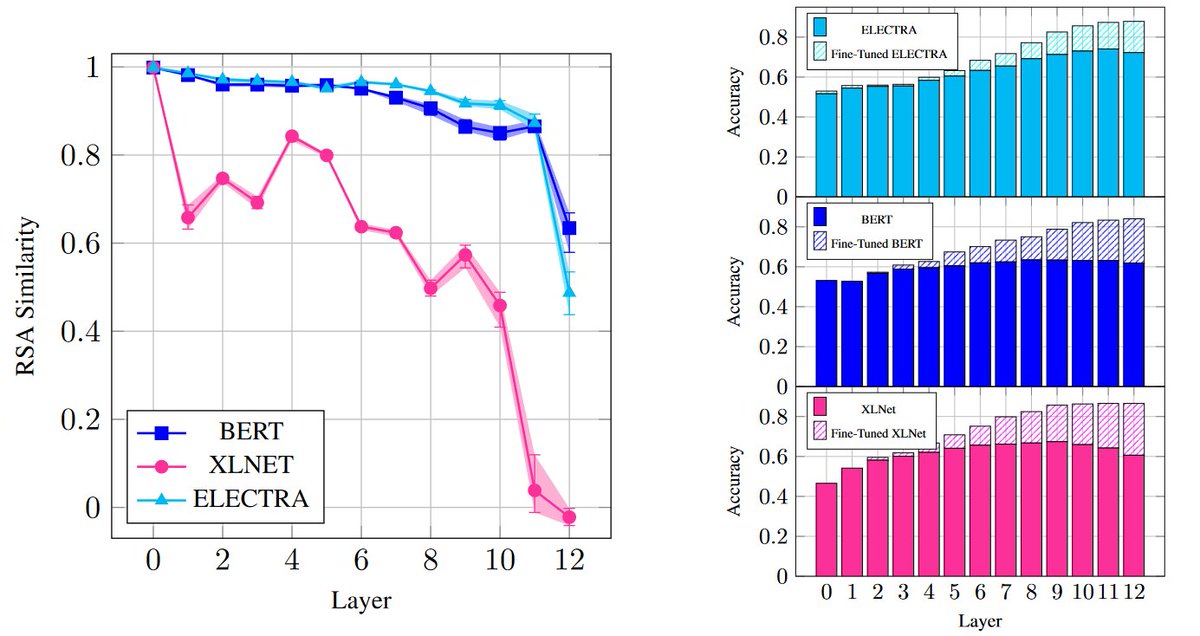

#EMNLP2021 Presenting "Not All Models Localize Linguistic Knowledge in the Same Place" BlackboxNLP poster session 3 (Gather.town) Nov 11 14:45 Punta Cana (UTC-4) 📝Paper: aclanthology.org/2021.blackboxn… with Ehsan Aghazade Ali Modarressi Hosein Mohebbi Taher Pilehvar #BlackboxNLP

🎉I‘m delighted to announce that our (w/ Mohsen Fayyaz, Ehsan Aghazadeh, Yadollah Yaghoobzadeh & Taher Pilehvar) paper “DecompX: Explaining Transformers Decisions by Propagating Token Decomposition” has been accepted to the #ACL2023 🥳🥳 Preprint coming soon📄⏳

Check out our (w/ Mohsen Fayyaz, Ehsan Aghazadeh, Yadollah Yaghoobzadeh & Taher Pilehvar) #ACL2023 paper “DecompX: Explaining Transformers Decisions by Propagating Token Decomposition” 📽️ Video, 💻 Code, Demo & 📄 Paper: github.com/mohsenfayyaz/D… arxiv.org/abs/2306.02873 (🧵1/4)

Mohsen Fayyaz's recent work showed several critical issues of dense retrievers favoring spurious correlations over knowledge, which makes RAG particularly vulnerable to adversarial examples. Check out more details 👇