Michael Wornow

@michaelwornow

Computer Science PhD Student @ Stanford

ID: 1633929360662216704

https://michaelwornow.net/ 09-03-2023 20:34:59

122 Tweet

379 Takipçi

129 Takip Edilen

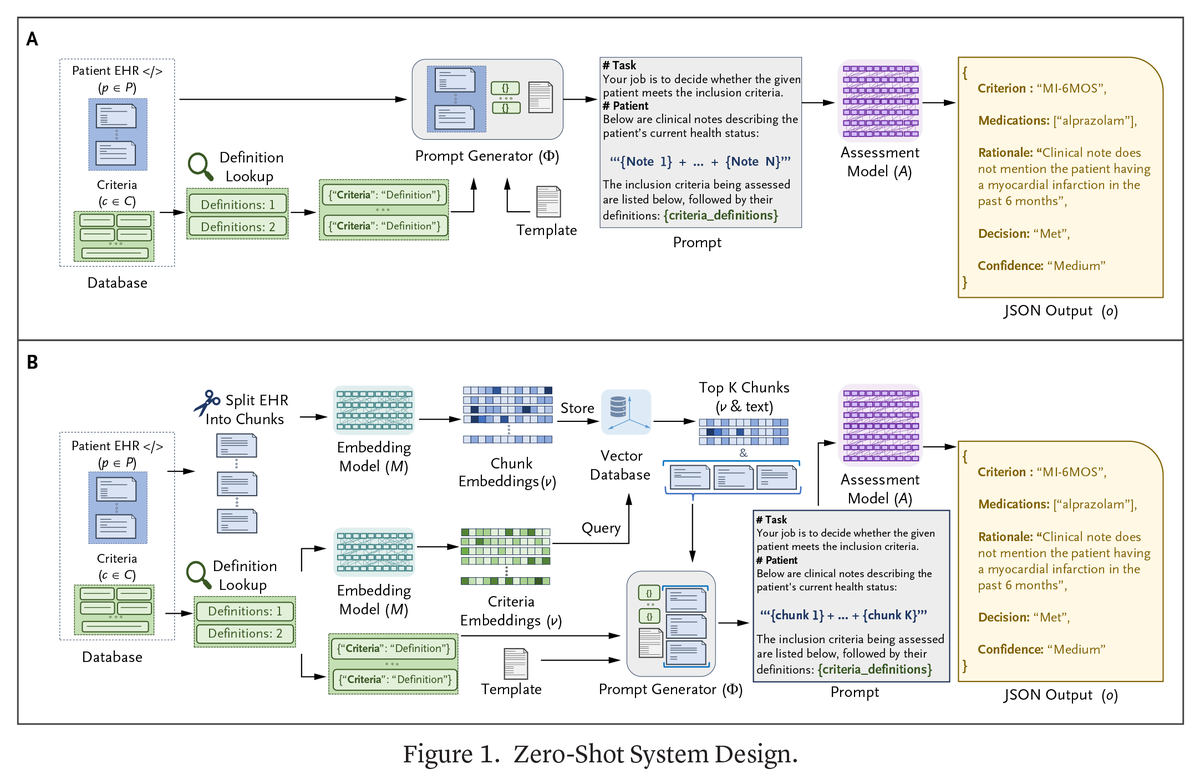

Medical record to finding a clinical trial through AI? Using "out-of-the-box" "zero-shot" AI model NEJM AI ai.nejm.org/doi/10.1056/AI… Interesting study Stanford University Should all clinicians and patients be using this when no one else is offering a state-of-the-art trial? In cancer,

Great to see MAMBA architecture evaluated on EHR-related tasks and robust analysis of EHR context complexities in this new paper with a fun title Michael Wornow arxiv.org/pdf/2412.16178

In a Case Study, Michael Wornow et al. investigate the accuracy, efficiency, and interpretability of using LLMs for clinical trial patient matching, with a focus on the zero-shot performance of these models to scale to arbitrary trials. Learn more: nejm.ai/4fM0Gmv