Mathis Pink

@mathispink

👀🧠(x) | x ∈ {👀🧠,🤖}

PhD student @mpi_sws_

trying to trick rocks into thinking and remembering.

ID: 1299449849637728257

28-08-2020 20:53:17

92 Tweet

350 Takipçi

2,2K Takip Edilen

Excited to share our new preprint bit.ly/402EYEb with Mariya Toneva, Norman Lab, and Manoj Kumar @[email protected]), in which we ask if GPT-3 (a large language model) can segment narratives into meaningful events similarly to humans. We use an unconventional approach: ⬇️

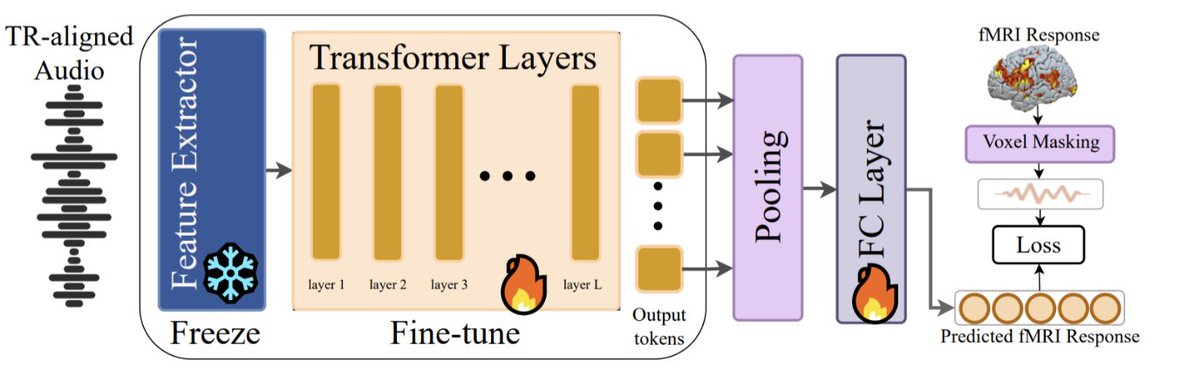

We are so excited to share the first work that demonstrates consistent downstream improvements for language tasks after fine-tuning with brain data!! Improving semantic understanding in speech language models via brain-tuning arxiv.org/abs/2410.09230 W/ Dietrich Klakow, Mariya Toneva

🚨Excited to share our latest work published at Interspeech 2025: “Brain-tuned Speech Models Better Reflect Speech Processing Stages in the Brain”! 🧠🎧 arxiv.org/abs/2506.03832 W/ Mariya Toneva We fine-tuned speech models directly with brain fMRI data, making them more brain-like.🧵

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions. Work with Zejin Lu | 陆泽金 Sushrut Thorat and Radoslaw Cichy arxiv.org/abs/2507.03168