Mark Kurtz

@markurtz_

AI @ @RedHat, former CTO @ @NeuralMagic (acquired), active ML researcher and contributor

ID: 855942805956554752

23-04-2017 00:34:02

179 Tweet

356 Takipçi

95 Takip Edilen

.Mark Kurtz, CTO of Neural Magic, shares insights on building a career in AI, from hands-on expertise to understanding AI’s limitations. He emphasizes mentorship and open-source projects in advancing education. Read his tips for impactful AI solutions!👇 medium.com/authority-maga…

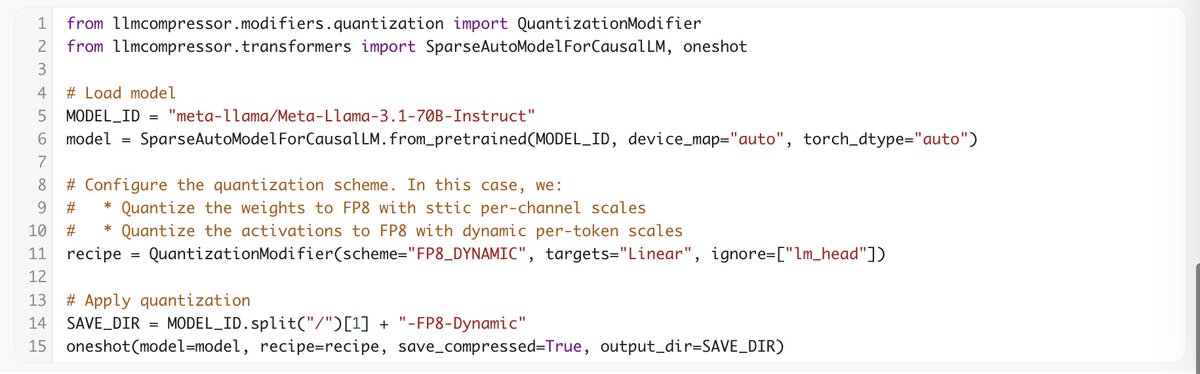

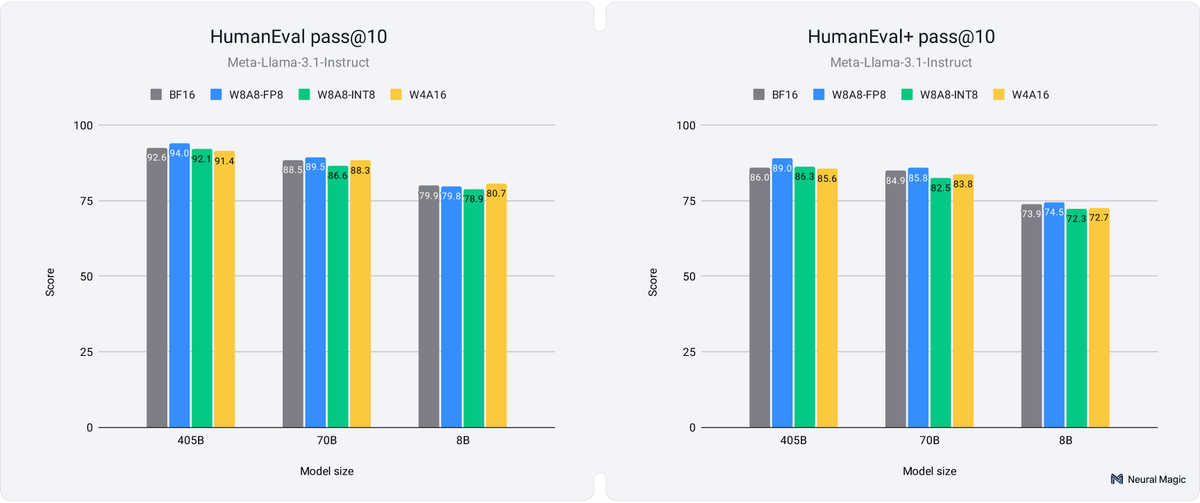

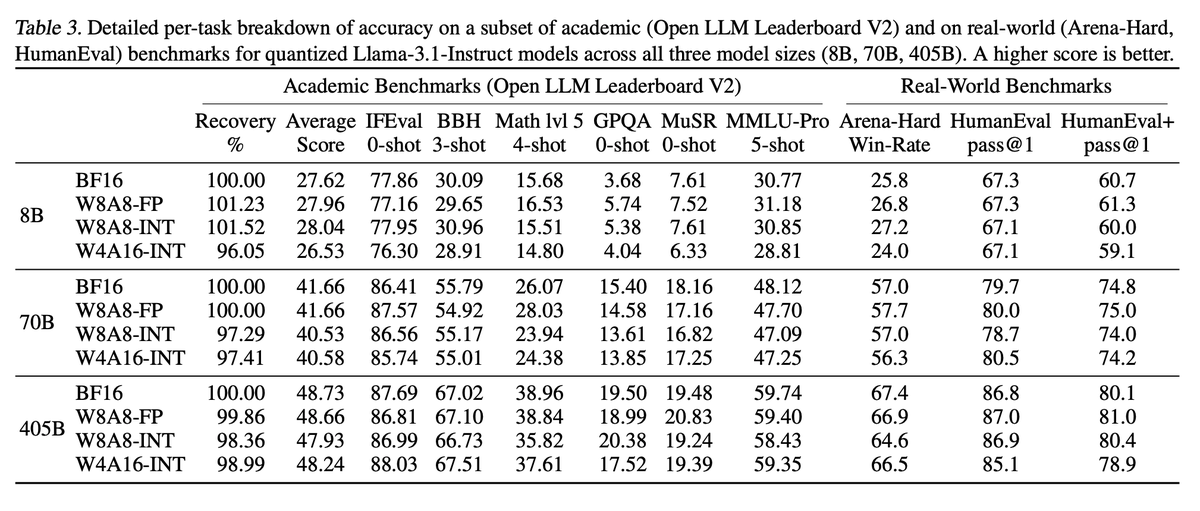

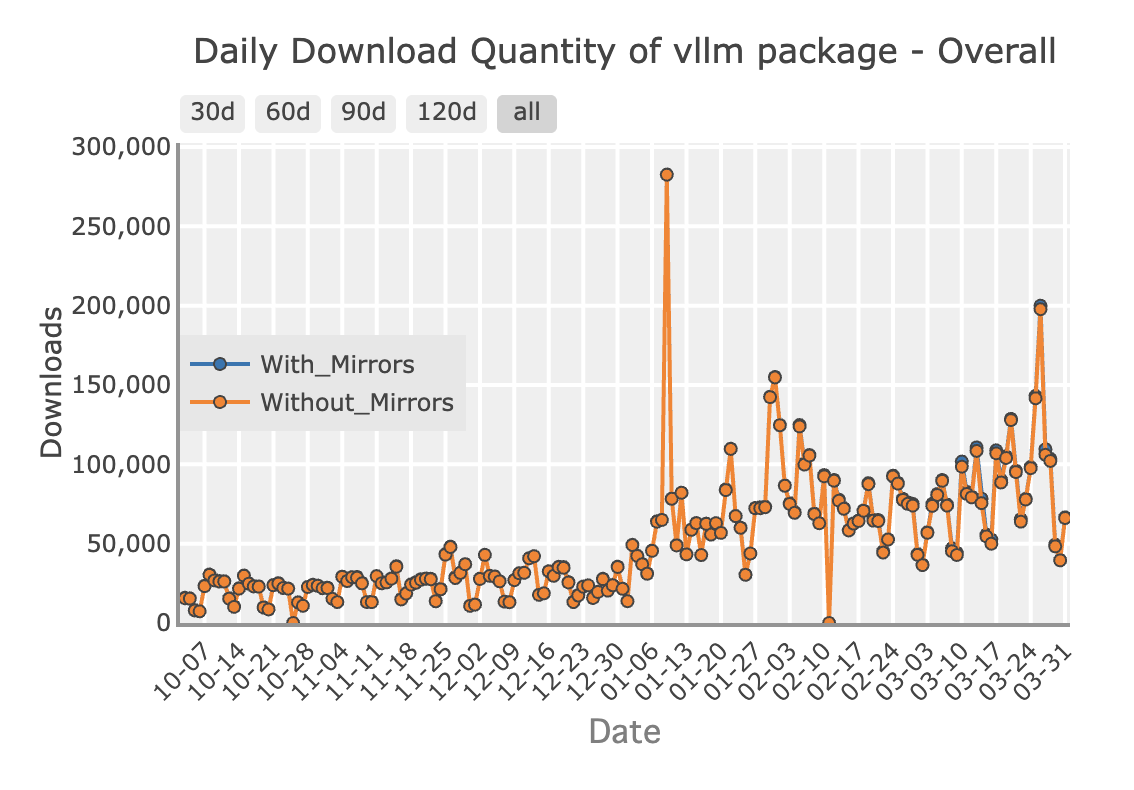

Join us this Friday for Random Samples, a weekly AI talk series from Red Hat AI Innovation Team. Topic: The State of LLM Compression — From Research to Production We’ll explore quantization, sparsity, academic vs. real-world benchmarks, and more. Join details in comments 👇

Missed the session? No worries! Watch the recording on YouTube: youtube.com/live/T8XDkZuv7… View the slides: docs.google.com/presentation/d… Questions? Drop them in comments and Mark Kurtz will get back to you.