Alexia Jolicoeur-Martineau

@jm_alexia

AI Researcher at the Samsung SAIT AI Lab 🐱💻

I build generative models for images, videos, text, tabular data, NN weights, molecules, and video games.

ID: 839820726777544705

http://ajolicoeur.wordpress.com 09-03-2017 12:50:39

8,8K Tweet

11,11K Takipçi

1,1K Takip Edilen

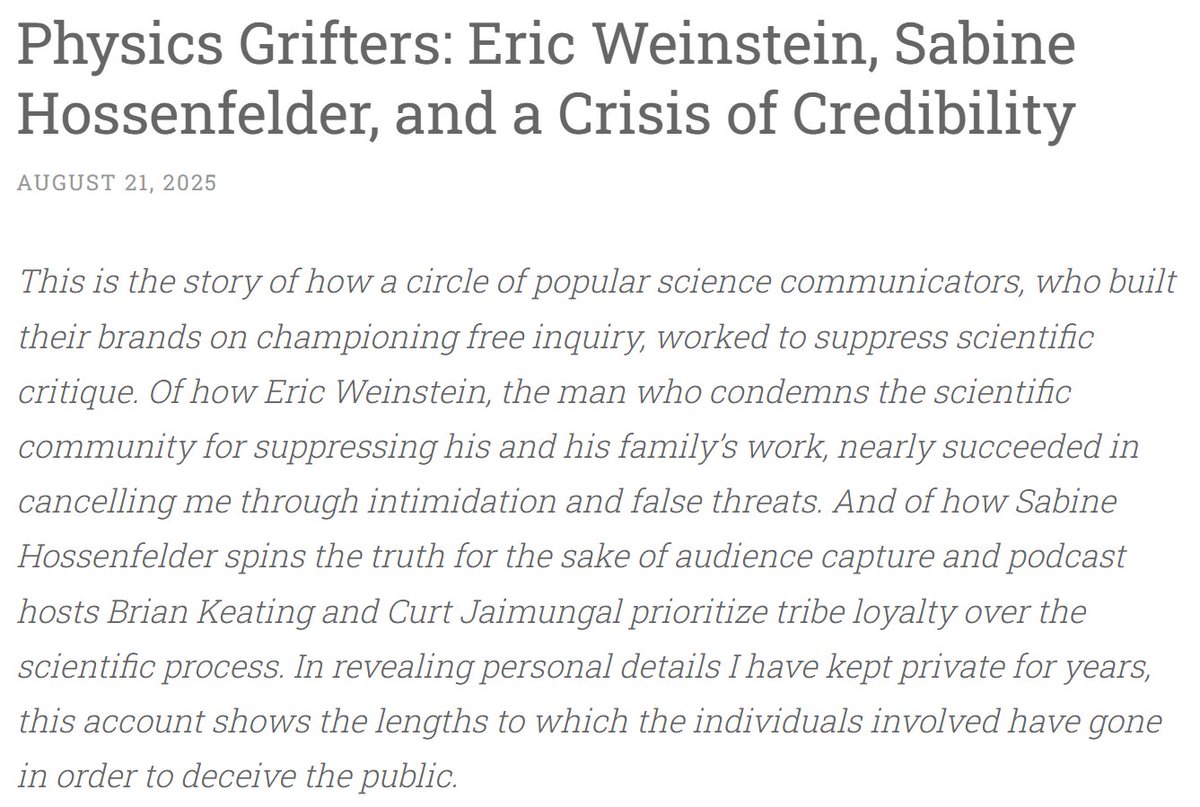

I'm breaking my silence. For years, I was quiet about how Eric Weinstein, Sabine Hossenfelder, Prof. Brian Keating & Curt Jaimungal suppressed my scientific critique. They preach free inquiry but practice censorship. This is the story of their hypocrisy. 🧵 timothynguyen.org/2025/08/21/phy…