Haomeng Zhang

@haomengz99

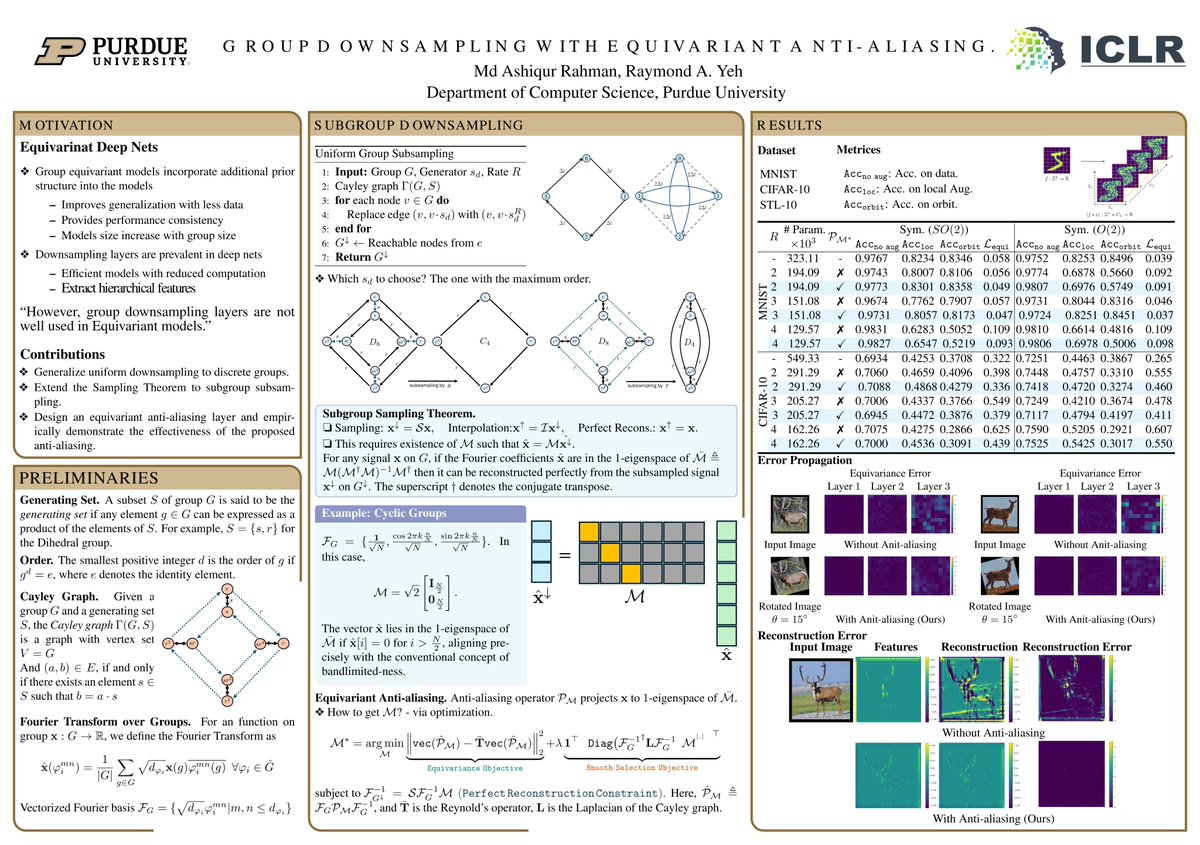

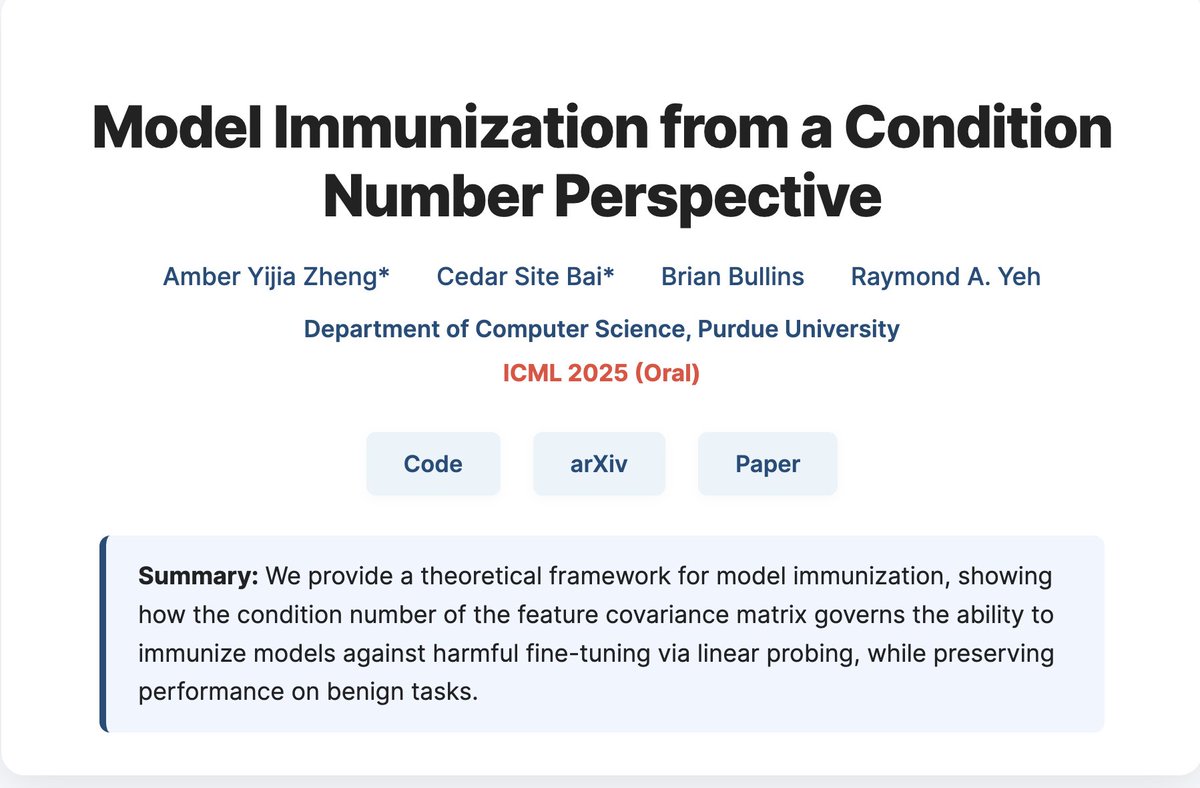

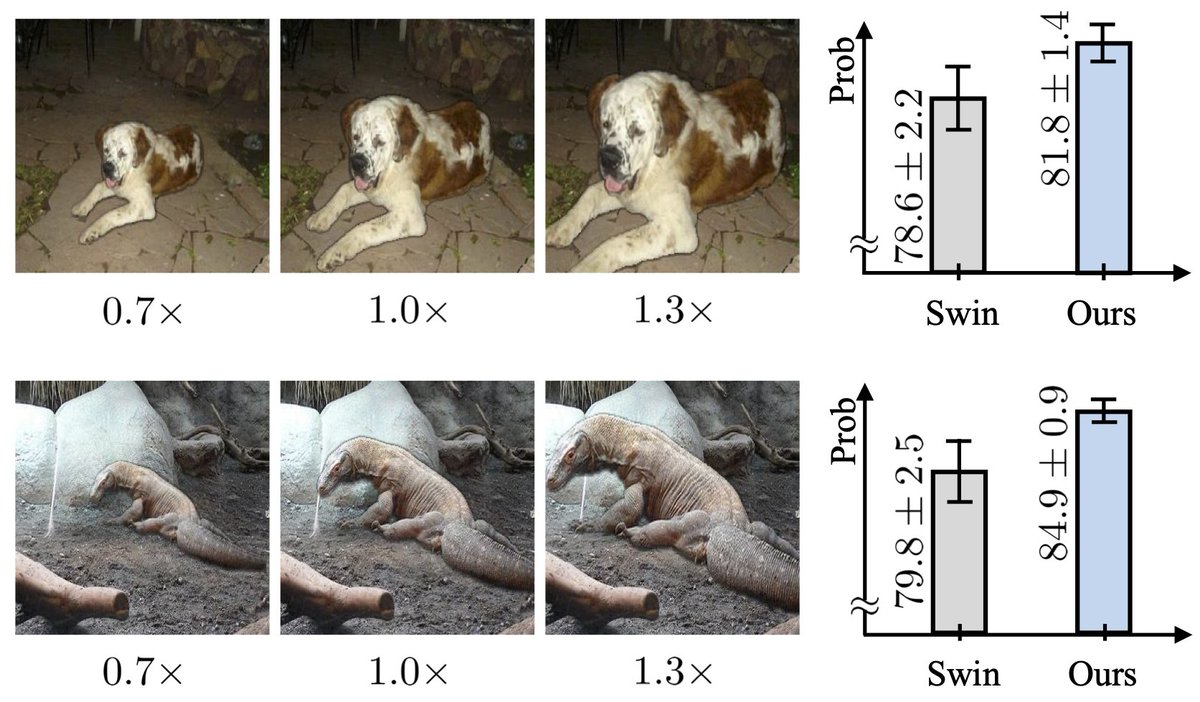

Ph.D. @PurdueCS working on Computer Vision & Robotics; @IllinoisCS @UMichCSE @sjtu1896 Alumni

ID: 1238672762547449857

https://haomengz.github.io/ 14-03-2020 03:46:26

49 Tweet

387 Takipçi

966 Takip Edilen

Honored to be recognized as a top reviewer for NeurIPS Conference 2024! neurips.cc/Conferences/20… #neurips2024