Andrea de Varda

@devarda_a

Postdoc at MIT BCS, interested in language(s) in humans and LMs

ID: 1506315259337973767

22-03-2022 17:02:32

101 Tweet

340 Takipçi

466 Takip Edilen

1/7 If you're at CogSci 2025, I'd love to see you at my talk on Friday 1pm PDT in Nob Hill A! I'll be talking about our work towards an implemented computational model of noisy-channel comprehension (with jacob lou hoo vigly ⍼, Ted Gibson, Language Lab MIT, and Roger Levy).

Is the Language of Thought == Language? A Thread 🧵 New Preprint (link: tinyurl.com/LangLOT) with Alexander Fung, Paris Jaggers, Jason Chen, Josh Rule, Dr Yael Benn, CoCoSci MIT, steven t. piantadosi, Rosemary Varley, Ev (like in 'evidence', not Eve) Fedorenko 🇺🇦 1/8

(1)💡NEW PUBLICATION💡 Word and construction probabilities explain the acceptability of certain long-distance dependency structures Work with Curtis Chen and Ted Gibson, Language Lab MIT Link to paper: tedlab.mit.edu/tedlab_website… In memory of Curtis Chen.

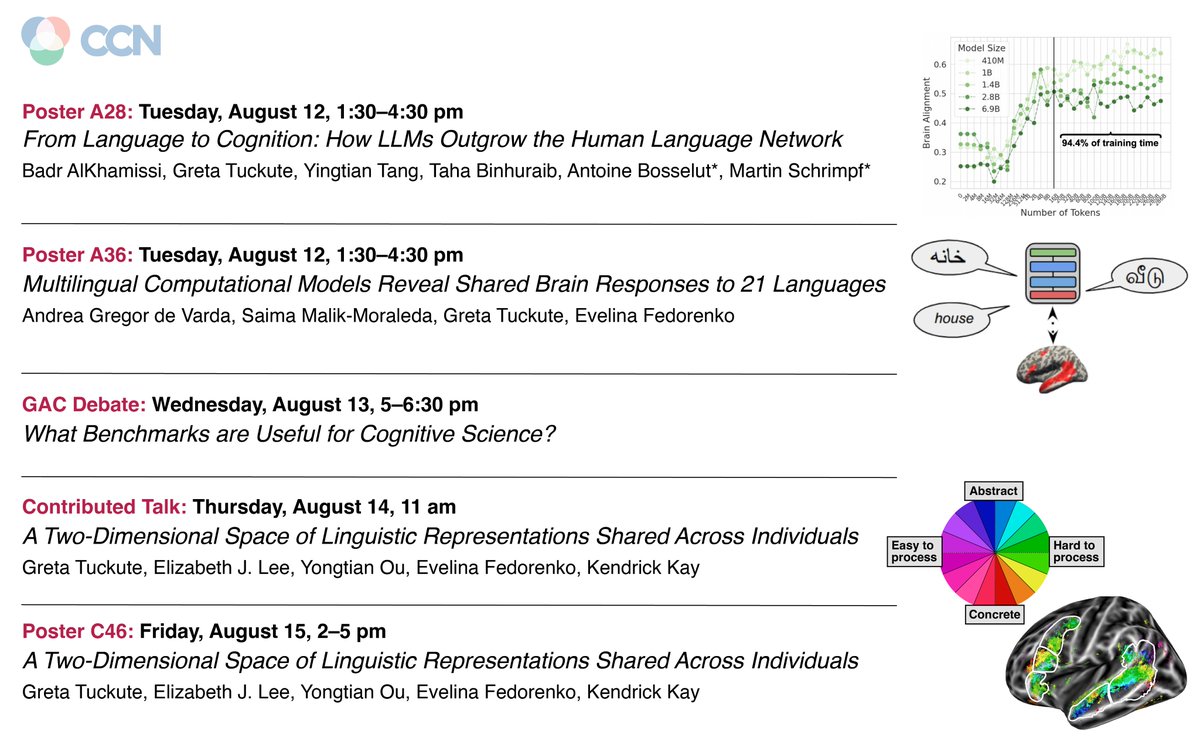

Can't wait for #CCN2025! Drop by to say hi to me / collaborators! Badr AlKhamissi Yingtian Tang Taha Binhuraib 🦉 Antoine Bosselut Martin Schrimpf Andrea de Varda Saima Malik-Moraleda Ev (like in 'evidence', not Eve) Fedorenko 🇺🇦 Elizabeth L cvnlab

Road to Bordeaux ✈️🇫🇷 for #SLE2025! Where I am going to present IconicITA!! An Abstraction Project ERC-StG Università di Bologna and Università degli Studi di Milano-Bicocca joint project on iconicity ratings ☑️ for the Italian language across L1 and L2 speakers! M.bolognesi Beatrice Giustolisi Ani.ravelli Andrea de Varda Chiara Saponaro

Are there conceptual directions in VLMs that transcend modality? Check out our COLM spotlight🔦 paper! We analyze how linear concepts interact with multimodality in VLM embeddings using SAEs with Chloe H. Su, @napoolar, Sham Kakade and Stephanie Gil arxiv.org/abs/2504.11695

As our lab started to build encoding 🧠 models, we were trying to figure out best practices in the field. So Taha Binhuraib 🦉 built a library to easily compare design choices & model features across datasets! We hope it will be useful to the community & plan to keep expanding it! 1/

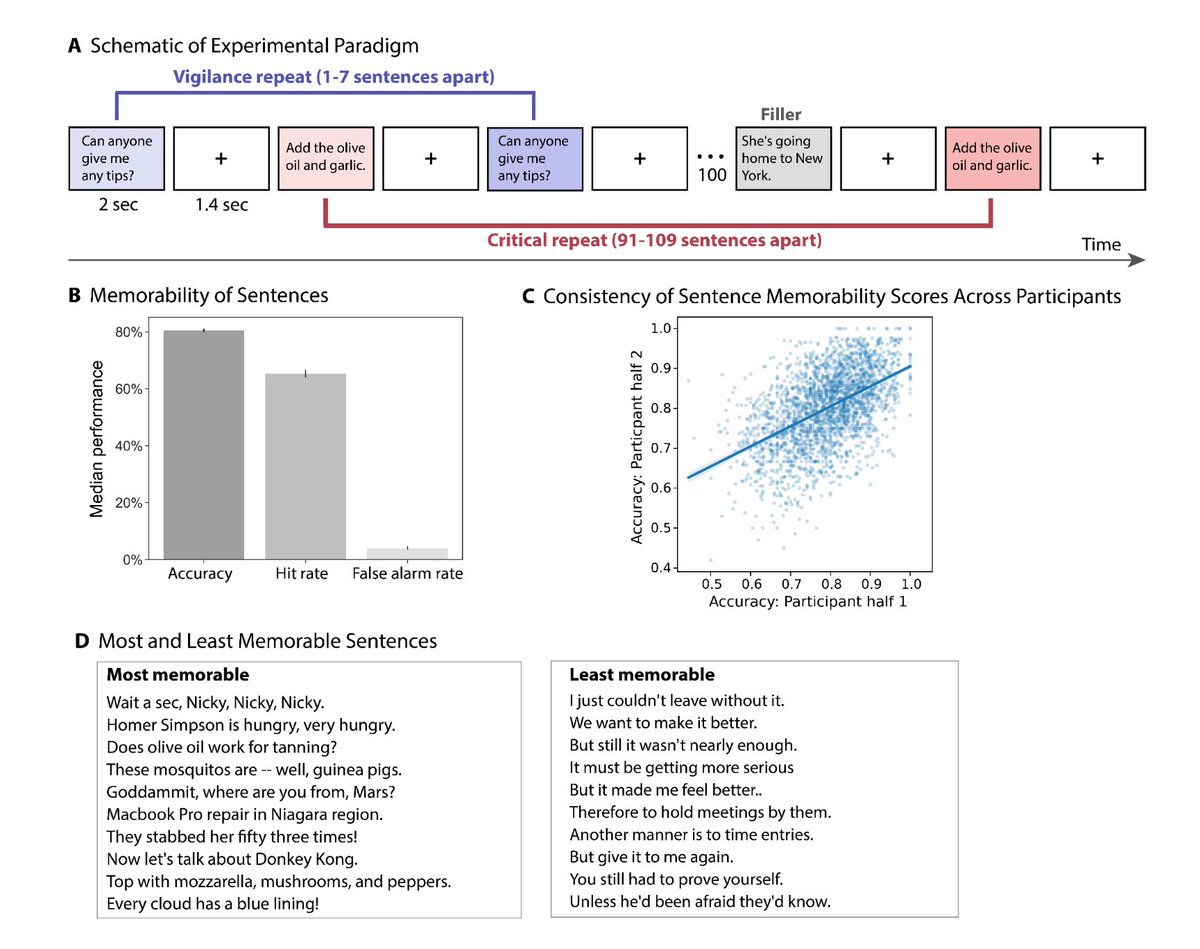

What makes some sentences more memorable than others? Our new paper gathers memorability norms for 2500 sentences using a recognition paradigm, building on past work in visual and word memorability. Greta Tuckute Bryan Medina Ev (like in 'evidence', not Eve) Fedorenko 🇺🇦