Christian Internò

@chrisinterno

AI Scientist at @unibielefeld (@HammerLabML) and @Honda Research Institute EU, Visiting Researcher at @CSHL at the Department of Computational Neuroscience.

ID: 1838837077745766402

https://github.com/ChristianInterno 25-09-2024 07:05:20

9 Tweet

41 Takipçi

559 Takip Edilen

You're into neuroscience and AI? 🧠 🤖 You're working on the mathematics that drives biological and artificial neural networks? We want to hear from you! Submit to NeurReps 2025 at NeurIPS Conference! 📅 Deadline: Aug 22 📄 Two tracks: 9p proceedings & 4p extended abstracts

Very proud of our summer intern Isabela Camacho Isa Camacho giving her final presentation 🧠 –and for everyone in the group who made this a great summer with awesome science 🧬 and even better vibes 🌞 #cshl #neuroai

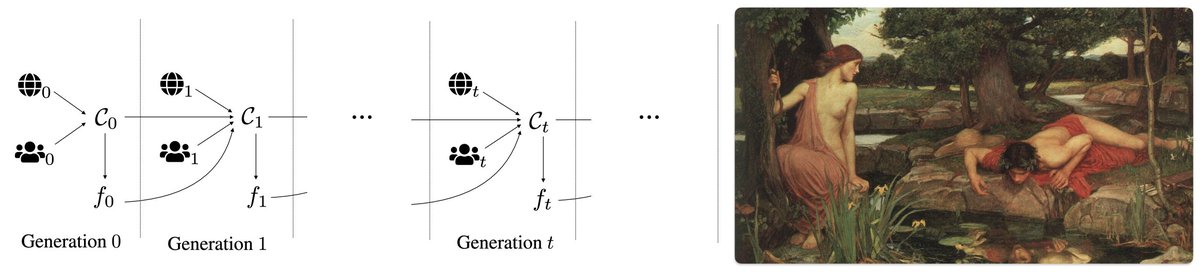

Judea Pearl Treatment Effect estimation requires priors defining candidate effects. Without priors there are not ‘computable causal claims’, i.e., what is the treatment affecting, in the sense of maximal causal abstraction with semantic interpretation? Example: what is the effect of a

Boris Sobolev Similarly, AI-powered experiments raise new questions, challenging epistemic robustness. If the premises are wrong, e.g., biased measurements of the world, causal reasoning is insufficient, even misleading, It requires climbing Judea Pearl 's ladder from the very bottom, where

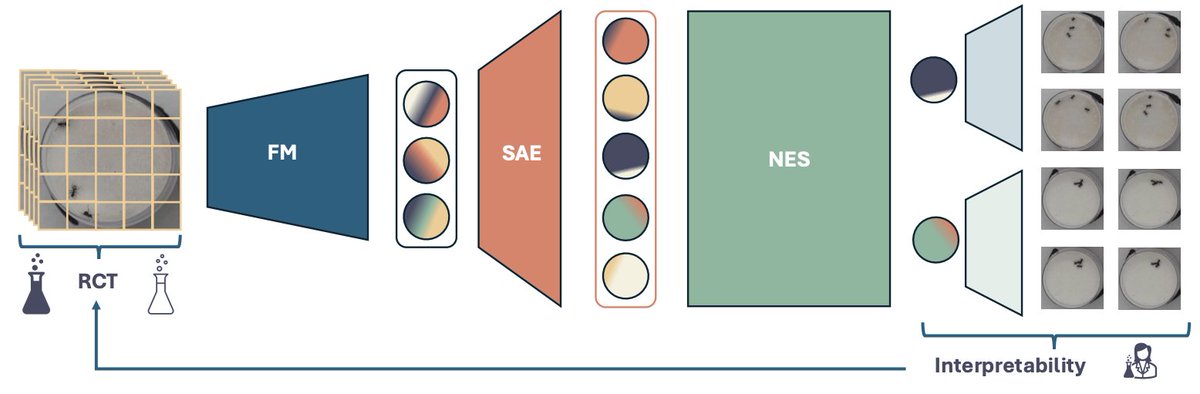

That's it. If you train SAEs, try adding whitening. It might work better 👍 Awesome job Ashwin Saraswatula *watch out he is on the gradschool market 🌟 [11/11]

NeurIPS should always be held in San Diego. #NeurIPS2025 NeurIPS Conference