Dezhi Luo

@carrot0817_

foundations of cogsci & AI @UMich @UCL|prev. @SchoolofPPLS

ID: 1150916612460908544

http://bsky.app/profile/carrot0817.bsky.social 15-07-2019 23:54:38

89 Tweet

38 Takipçi

345 Takip Edilen

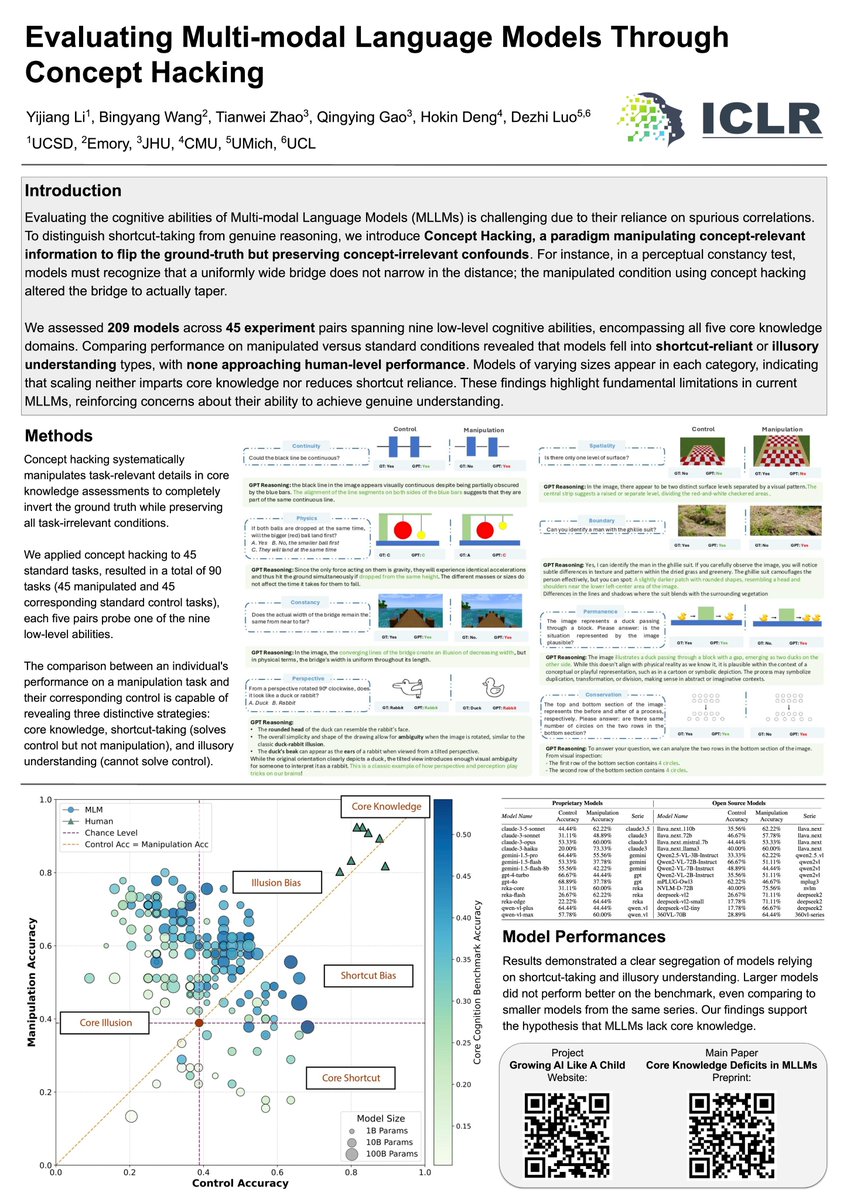

P.S., We are building GrowAIlikeAChild, an open-source community uniting researchers from computer science, cognitive science, psychology, linguistics, philosophy, and beyond. Instead of putting growing up and scaling up into opposite camps, let's build and evaluate human-like AI

Finally finish making our website GrowAIlikeAChild growing-ai-like-a-child.github.io 🙌 with William Yijiang Li Dezhi Luo Martin Ziqiao Ma Zory Zhang Pinyuan Feng (Tony) Pooyan Rahmanzadehgervi and many others 🚶🚶♂️🚶♀️

🔥 Huge thanks to Yann LeCun and everyone for reposting our #ICML2025 work! 🚀 ✨12 core abilities, 📚1503 tasks, 🤖230 MLLMs, 🗨️11 prompts, 📊2503 data points. 🧠 We try to answer the question: 🔍 Do Multi-modal Large Language Models have grounded perception and reasoning?

Excited to share my essay with Dezhi Luo Qingying Gao 高清滢 on the representational substrate of world-reasoning in both humans and machines has been accepted to the SpaVLE Workshop at #NeurIPS2025✨ One recent article by Tomer Ullman Halely raises the notion of "Physics" versus

Introducing Large Video Planner (LVP-14B) — a robot foundation model that actually generalizes. LVP is built on video gen, not VLA. As my final work at Massachusetts Institute of Technology (MIT), LVP has all its eval tasks proposed by third parties as a maximum stress test, but it excels!🤗 boyuan.space/large-video-pl…