Mrinal Mathur

@bobthemaster

Research Engineer @Google |@BytedanceTalk | @Amazon | @Apple | @CenterTrends | @ARM

ID: 114763112

16-02-2010 14:45:27

8,8K Tweet

363 Takipçi

639 Takip Edilen

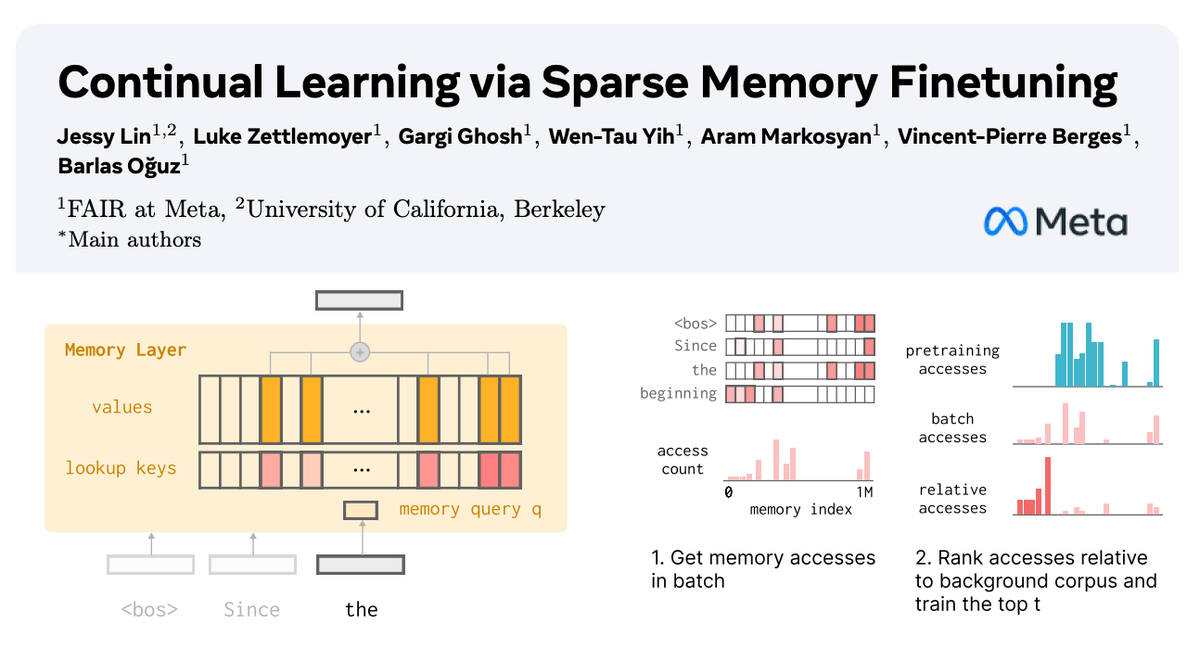

🧠 How can we equip LLMs with memory that allows them to continually learn new things? In our new paper with AI at Meta, we show how sparsely finetuning memory layers enables targeted updates for continual learning, w/ minimal interference with existing knowledge. While full

colab notebook for on-policy distillation 👇🔗 (for those without Thinking Machines tinker access) train qwen-0.6b with OPD to get from 38% -> 60% on GSM8K works for models without the same tokenizer!

Tiny Recursion Models 🔁 meet Amortized Learners 🧠 After Alexia Jolicoeur-Martineau’s great talk, realized our framework mirrors it: recursion (Nₛᵤₚ=steps, n,T=1), detach grads but new obs each step → amortizing over long context Works across generative models, neural processes, & beyond

- Test-time Adaptation of Tiny Recursive Models - New Paper, and the Trelis Submission Approach for the 2025 ARC Prize Competition! In brief: - Alexia Jolicoeur-Martineau's excellent TRM approach does not quite fit in the compute constraints of the ARC Prize competition - BUT, if you take a