BigScience Large Model Training

@bigsciencellm

Follow the training of "BLOOM 🌸", the @BigScienceW multilingual 176B parameter open-science open-access language model, a research tool for the AI community.

ID: 1502036410081202180

https://bigscience.notion.site/BigScience-176B-Model-Training-ad073ca07cdf479398d5f95d88e218c4 10-03-2022 21:40:30

129 Tweet

8,8K Takipçi

1 Takip Edilen

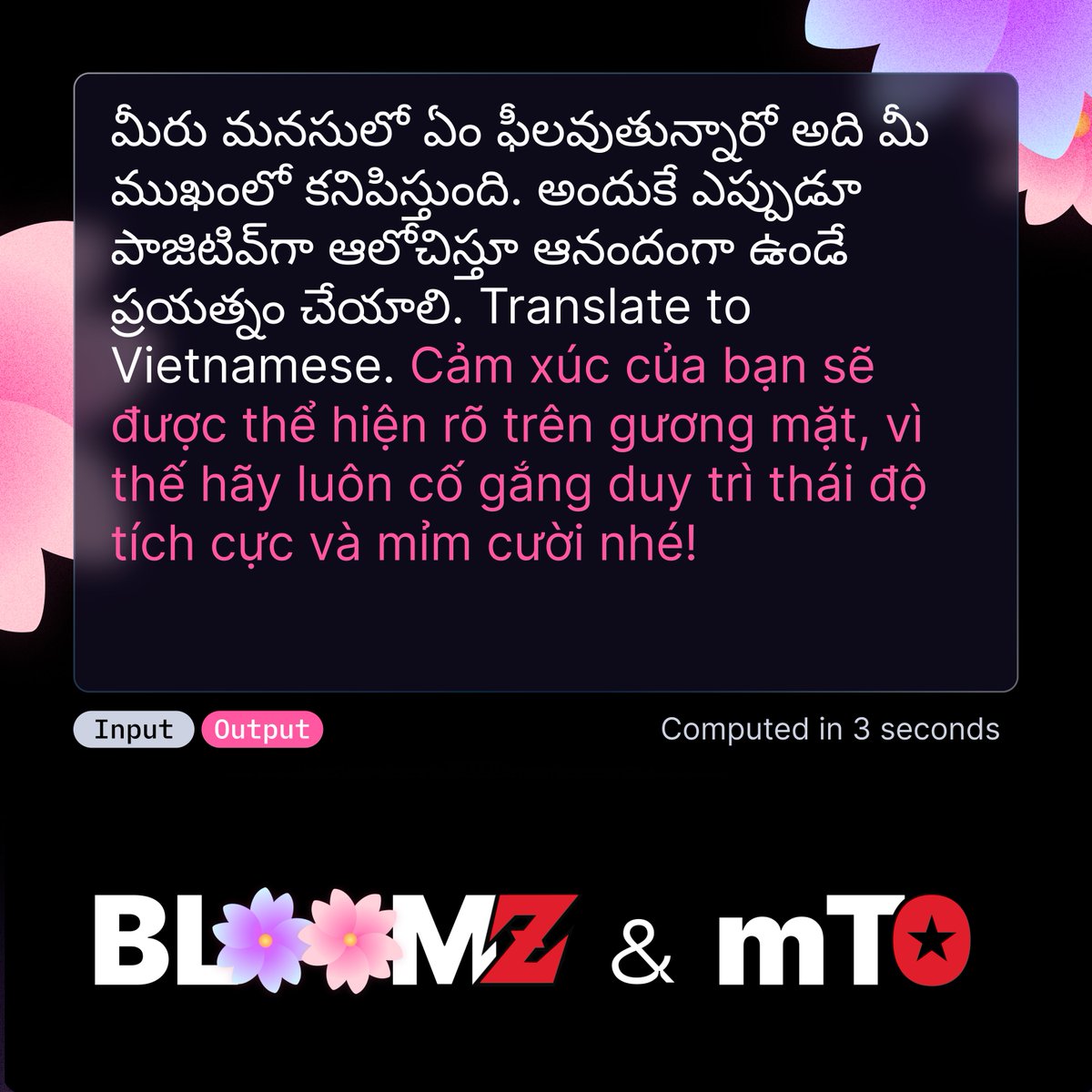

🌸BigScience Research Workshop BLOOM's intermediate checkpoints have already shown some very cool capabilities! What's great about BLOOM is that you can ask it to generate the rest of a text - and this even if it is not yet fully trained yet! 👶 🧵 A thread with some examples

The Technology Behind BLOOM Training🌸 Discover how BigScience Research Workshop used Microsoft Research DeepSpeed + NVIDIA Megatron-LM technologies to train the World's Largest Open Multilingual Language Model (BLOOM): huggingface.co/blog/bloom-meg…

What do Stability AI Emad #stablediffusion & BigScience Research Workshop Bloom - aka the coolest new models ;) - have in common? They both use a new gen of ML licenses aimed at making ML more open & inclusive while keeping it harder to do harm with them. So cool! huggingface.co/blog/open_rail