Kaj Bostrom

@alephic2

NLP geek with a PhD from @utcompsci, now @ AWS. I like generative modeling but not in an evil way I promise. Also at bsky.app/profile/bostro… He/him

ID: 1069745340444635136

https://bostromk.net 04-12-2018 00:08:58

102 Tweet

343 Takipçi

421 Takip Edilen

LLMs are used for reasoning tasks in NL but lack explicit planning abilities. In arxiv.org/abs/2307.02472, we see if vector spaces can enable planning by choosing statements to combine to reach a conclusion. Joint w/ Kaj Bostrom Swarat Chaudhuri & Greg Durrett NLRSE workshop at #ACL2023NLP

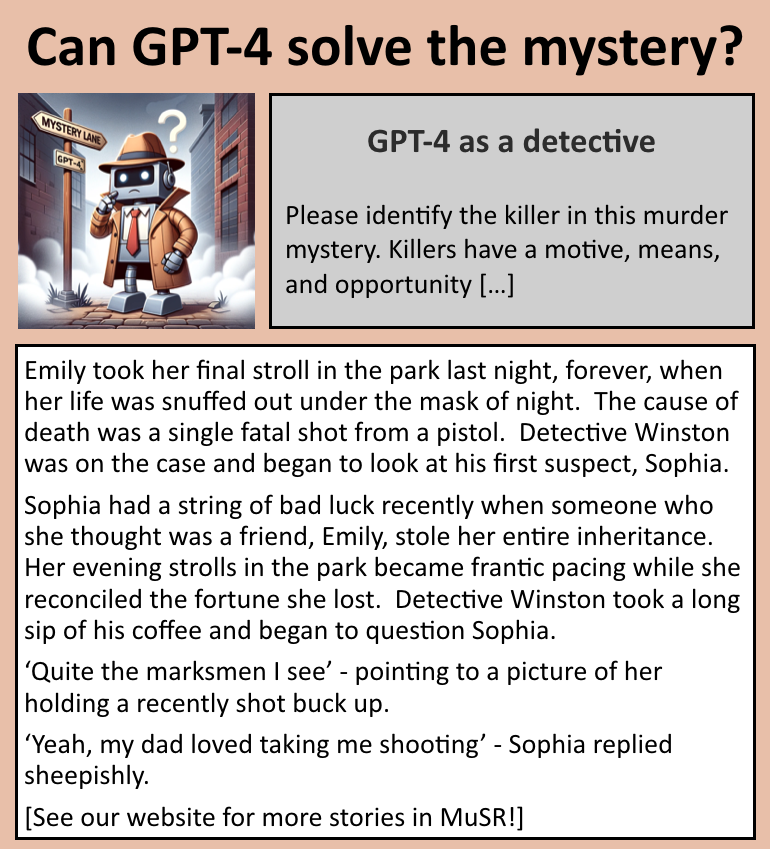

GPT-4 can write murder mysteries that it can’t solve. 🕵️ We use GPT-4 to build a dataset, MuSR, to test the limits of LLMs’ textual reasoning abilities (commonsense, ToM, & more) 📃 arxiv.org/abs/2310.16049 🌐 zayne-sprague.github.io/MuSR/ w/ Xi Ye Kaj Bostrom Swarat Chaudhuri Greg Durrett

Super excited to bring ChatGPT Murder Mysteries to #ICLR2024 from our dataset MuSR as a spotlight presentation! A big shout-out goes to my coauthors, Xi Ye Kaj Bostrom Swarat Chaudhuri and Greg Durrett See you all there 😀