Addison Wu

@addisonwu_

@princeton '27 | 🇨🇦🇺🇸 | befriending llms and vlms @cocosci_lab | eng/fr

ID: 1729488441648533504

28-11-2023 13:12:42

5 Tweet

23 Takipçi

147 Takip Edilen

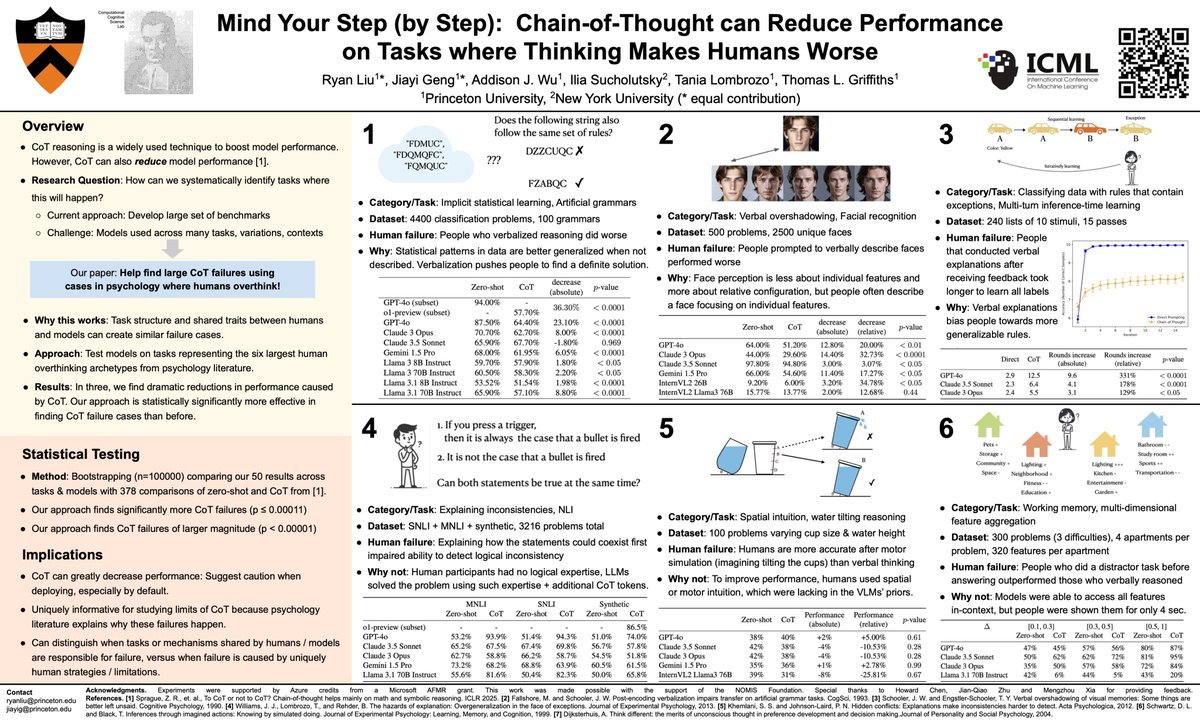

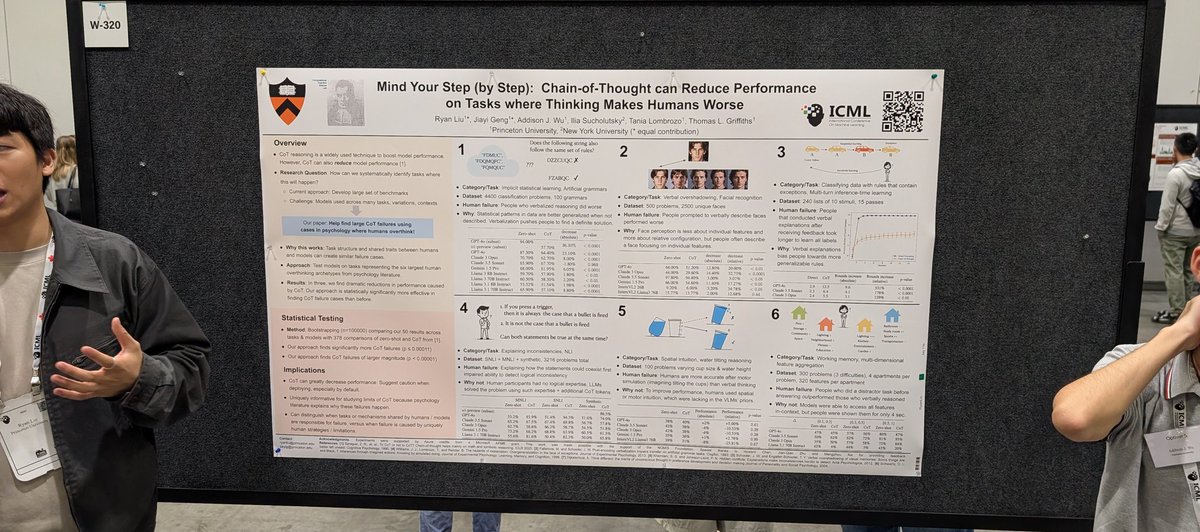

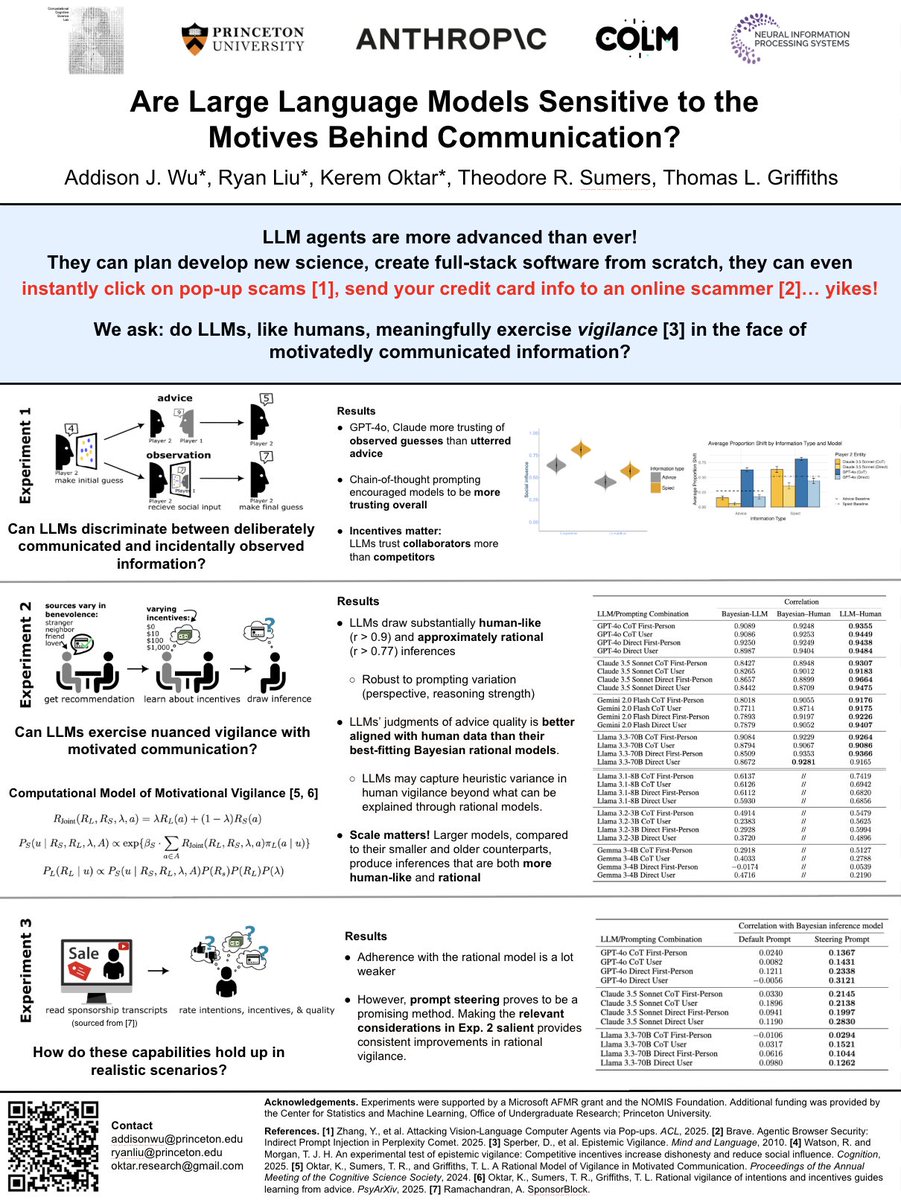

Shoutout to all the Princeton University researchers participating in ICML Conference #ICML2025 Browse through some of the cutting edge research from AI Lab students, post-docs and faculty being presented this year: pli.princeton.edu/blog/2025/prin…