Alex Wettig

@_awettig

PhD@princeton trying to make sense of language models and their training data

ID: 1549104683955785728

https://www.cs.princeton.edu/~awettig/ 18-07-2022 18:52:37

167 Tweet

796 Takipçi

492 Takip Edilen

Want state-of-the-art data curation, data poisoning & more? Just do gradient descent! w/ Andrew Ilyas Ben Chen Axel Feldmann Billy Moses Aleksander Madry: we show how to optimize final model loss wrt any continuous variable. Key idea: Metagradients (grads through model training)

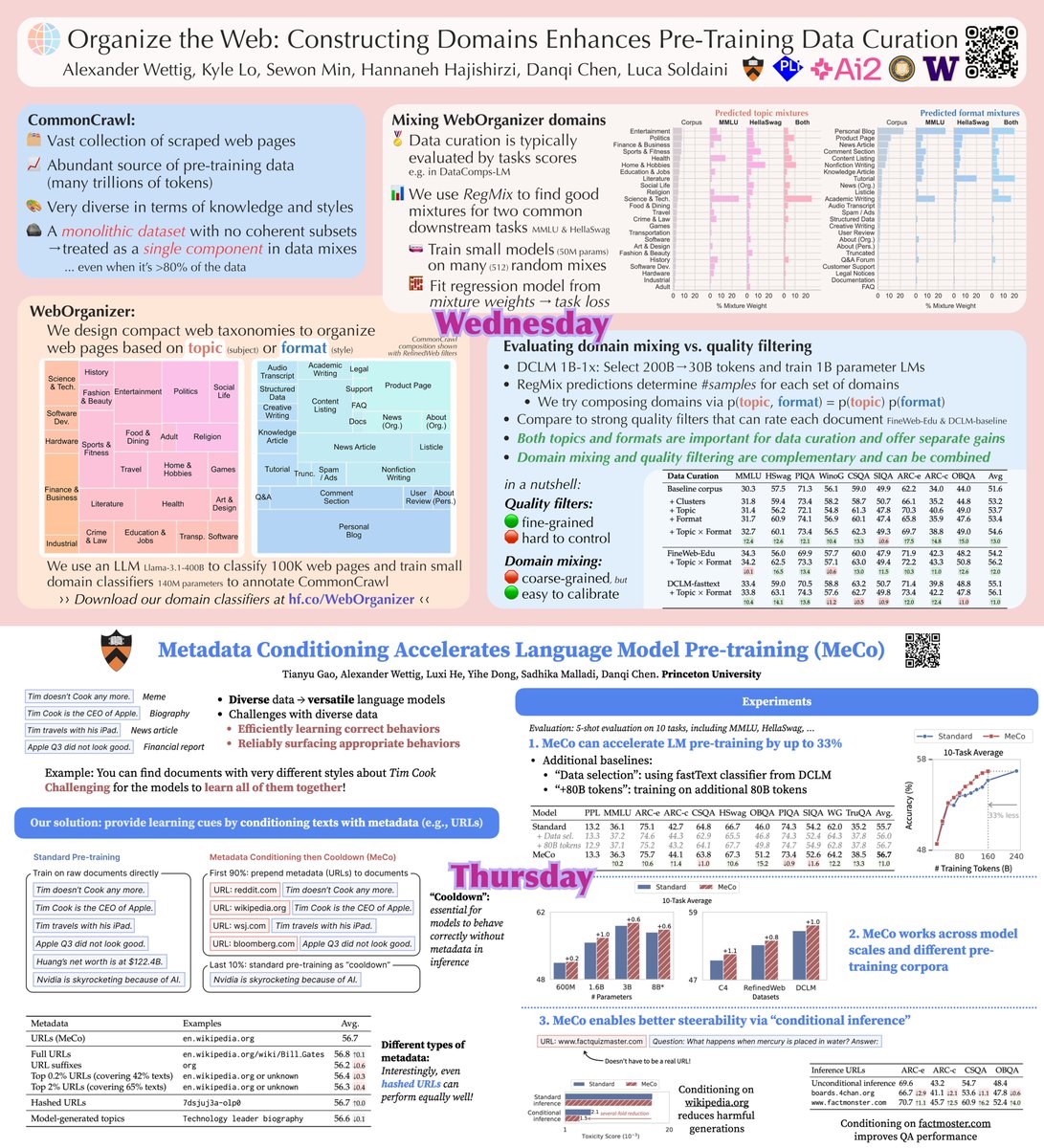

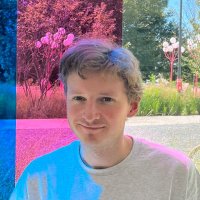

Presenting two posters at ICML over the next two days: - Both at 11am - 1:30pm - Both about how to improve pre-training with domains - Both at stall # E-2600 in East Exhibition Hall A-B (!) Tomorrow: WebOrganizer w/ Luca Soldaini 🚗 ICML 2025 & Kyle Lo @ ICML2025 Thursday: MeCo by Tianyu Gao