Ivan Fioravanti ᯅ

@ivanfioravanti

Co-founder and CTO of @CoreViewHQ GenAI/LLM addicted, Apple MLX, Ollama, Microsoft 365, Azure, Kubernetes, Investor in innovation

ID: 43874767

01-06-2009 12:21:31

23,23K Tweet

12,12K Followers

1,1K Following

Ivan Fioravanti ᯅ and now it's free to use omg x.com/YashasGunderia…

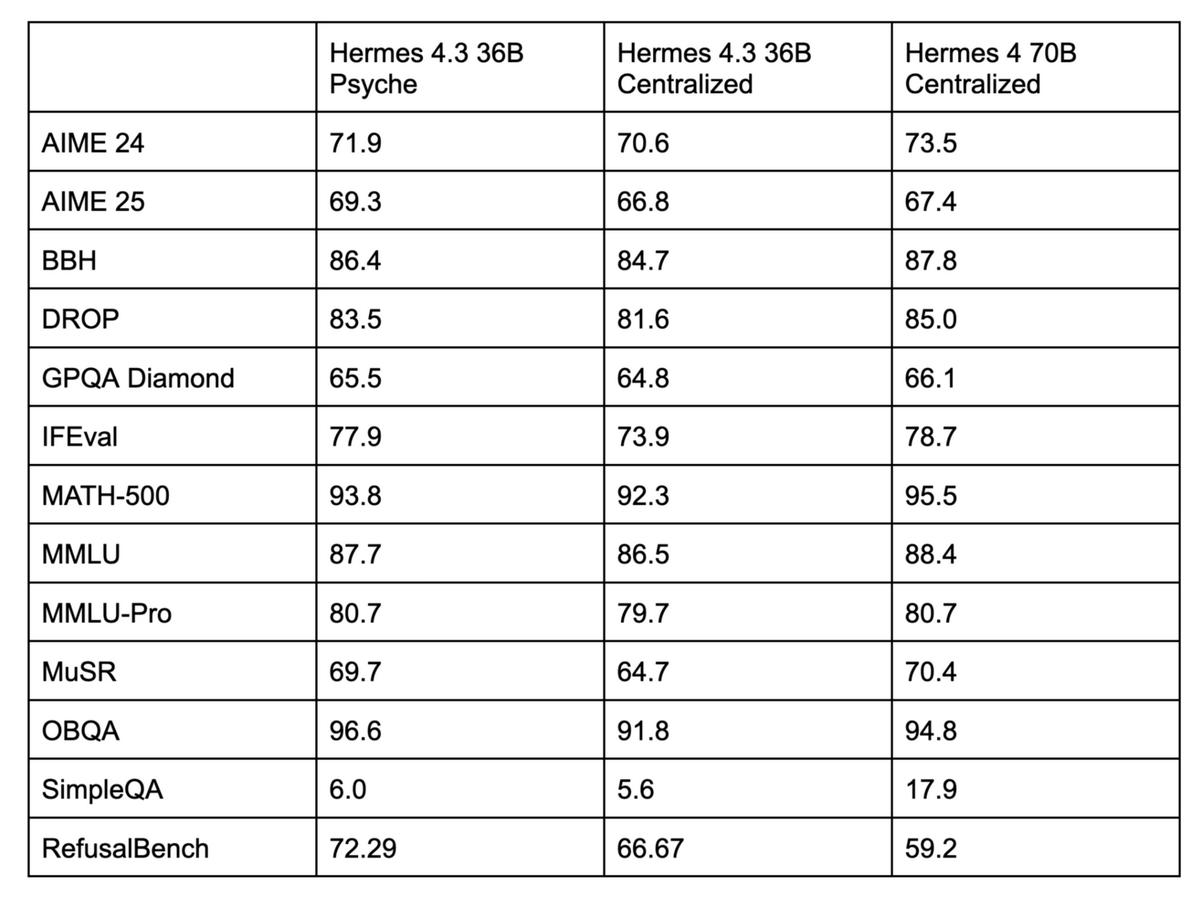

always feels weird seeing decentralize baseline outperforming the centralized baseline? i can vaguely remember something similar on diloco when you don't fully decay the learning rate (not 100% sure about this), any thought on why this is happening Teknium (e/λ) emozilla Bowen Peng

This is a must have for any MLX lover! And following Gökdeniz Gülmez is another one 🚀