Sanjeev Satheesh

@issanjeev

ID: 31904918

16-04-2009 15:41:02

1,1K Tweet

478 Takipçi

388 Takip Edilen

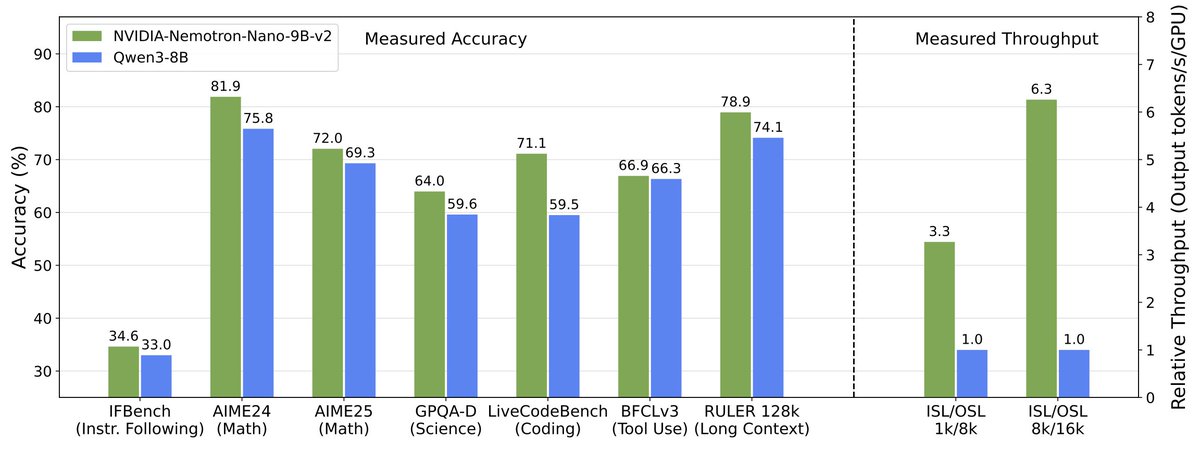

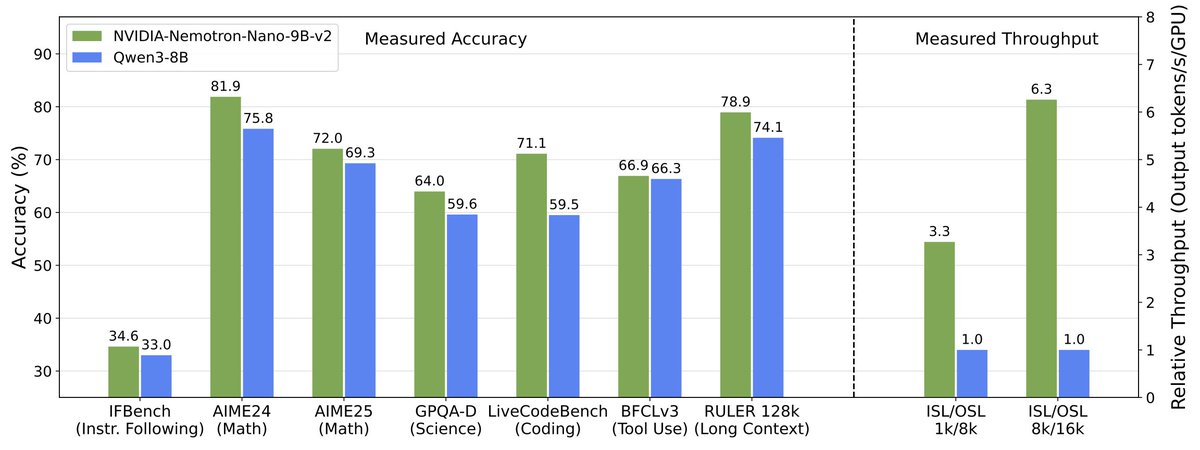

NVIDIA just released Nemotron Nano v2 - a 9B hybrid SSM (Mamba) that is 6X faster than similarly sized models, while also being more accurate. Ready for commercial use, all available on Hugging Face 💾 Nemotron Nano 2 is a 9B hybrid Mamba Transformer for fast reasoning, up to

It’s true: The rise of #AI is impacting jobs, and for tenured workers it signals a benefit. But at least for one very specific group of workers. Using ADP payroll data, Erik Brynjolfsson and his team at Stanford’s Stanford Digital Economy Lab found that employment among young adults whose work is exposed