Imperial NLP

@imperial_nlp

We are the Natural Language Processing community here at Imperial College London.

BFF with @EdinburghNLP

#NLProc

ID: 1767989744464486400

13-03-2024 19:03:16

72 Tweet

562 Followers

1,1K Following

It was a pleasure to host Nishant Balepur at Imperial College London this week!

Congrats to Mingxue (Mercy) Xu for this new work accepted to SLLM at ICLR 2025!

Many congratulations to Matthieu Meeus Marek Rei and coauthors for the best paper award at SaTML Conference 🎉

You can find me at ICLR 2025 in Singapore, where I’ll be presenting my work “Latent Representation Encoding and Multimodal Biomarkers for Post-Stroke Speech Assessment” on Sunday 27th, Hall 4 #6 and on Monday 28th, Peridot 201&206 🥳 ICLR 2026 Imperial NLP Imperial EEE

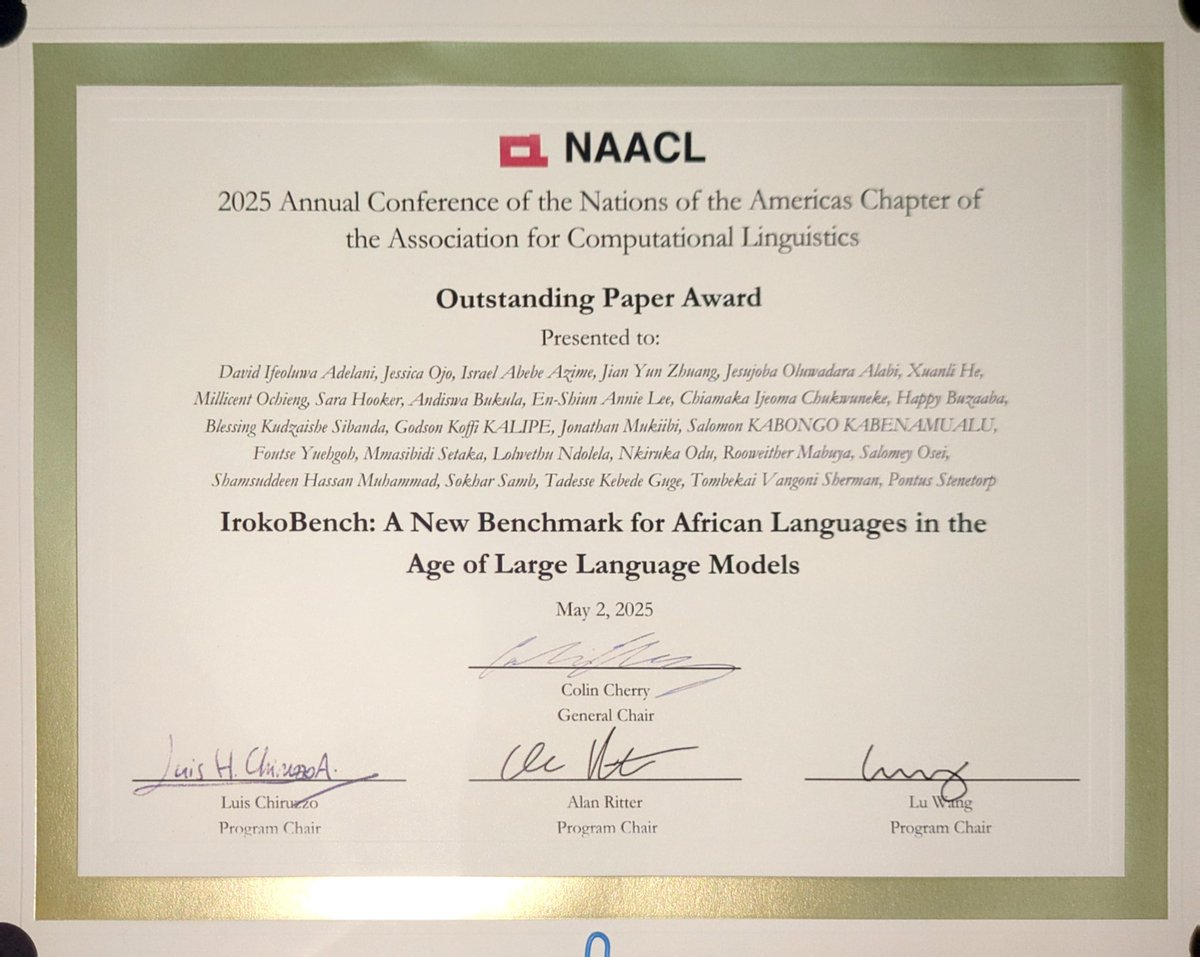

Congratulations to the authors of IrokoBench funded by Lacuna Fund for winning one of the Outstanding Paper Award at #NAACL2025 with Happy B. Kasumba Shamsuddeen Hassan Muhammad, PhD Israel Abebe | እስራኤል አበበ Rooweither Mabuya Andie Bukula

Happening Thursday at Imperial College London London. imperial.ac.uk/events/193952/…

(1/9) LLMs can regurgitate memorized training data when prompted adversarially. But what if you *only* have access to synthetic data generated by an LLM? In our ICML Conference paper, we audit how much information synthetic data leaks about its private training data 🐦🌬️