Niðal نضال

@imleslahdin

What's the Kolmogorov Complexity of Small Language Models?

DM me papers on LLMs, AI/ML, Data Science. Doing a PhD. Not so serious. Nidhal Selmi.

ID: 3227845866

http://nselmi.com 27-05-2015 05:28:13

19,19K Tweet

1,1K Followers

1,1K Following

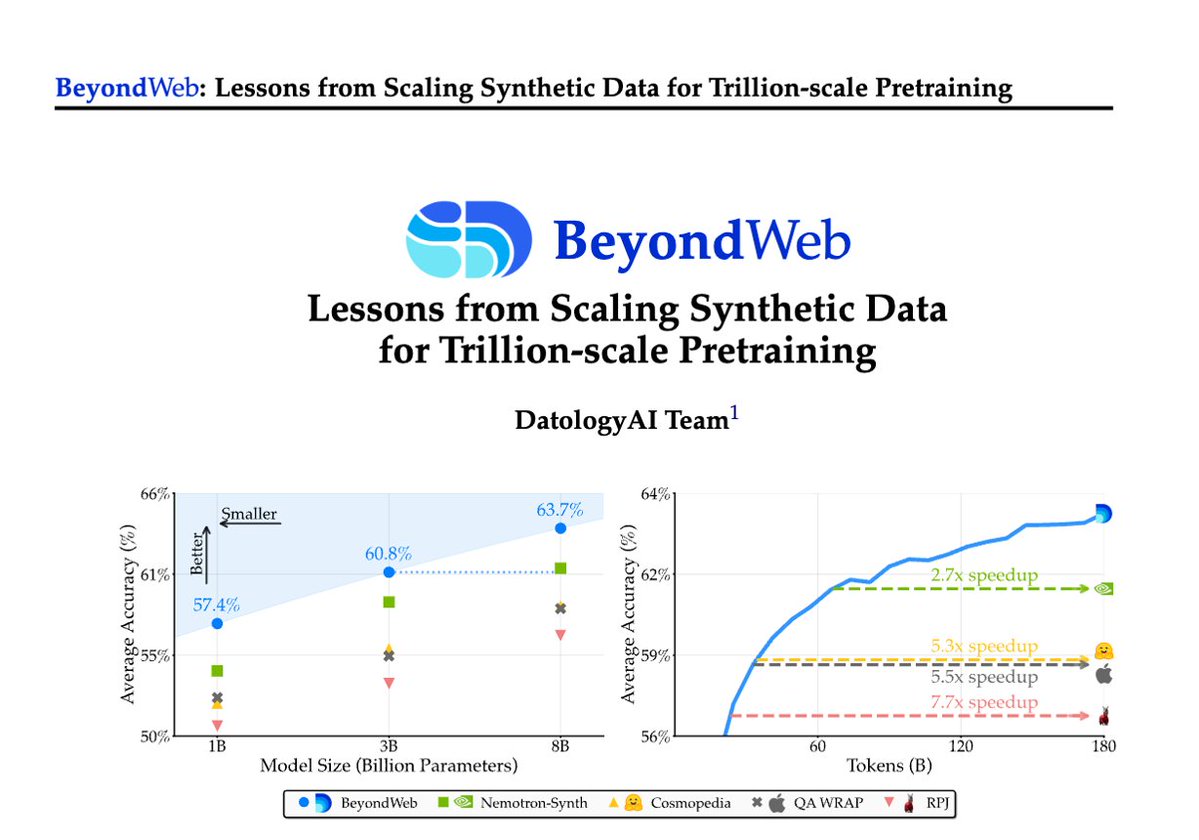

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance