Hunter Lang

@hunterjlang

phd student at @MIT_CSAIL working in self/weak supervision, nlp with @David_Sontag. he/him

ID: 959854738023047168

https://web.mit.edu/~hjl/www/ 03-02-2018 18:22:57

88 Tweet

317 Followers

325 Following

Can AI tell us when it can predict better than humans and when humans are better? In our AISTATS23 (oral) with MIT-IBM Watson AI Lab paper we build AI models that 1) complement humans and 2) can defer to humans when necessary arxiv.org/abs/2301.06197

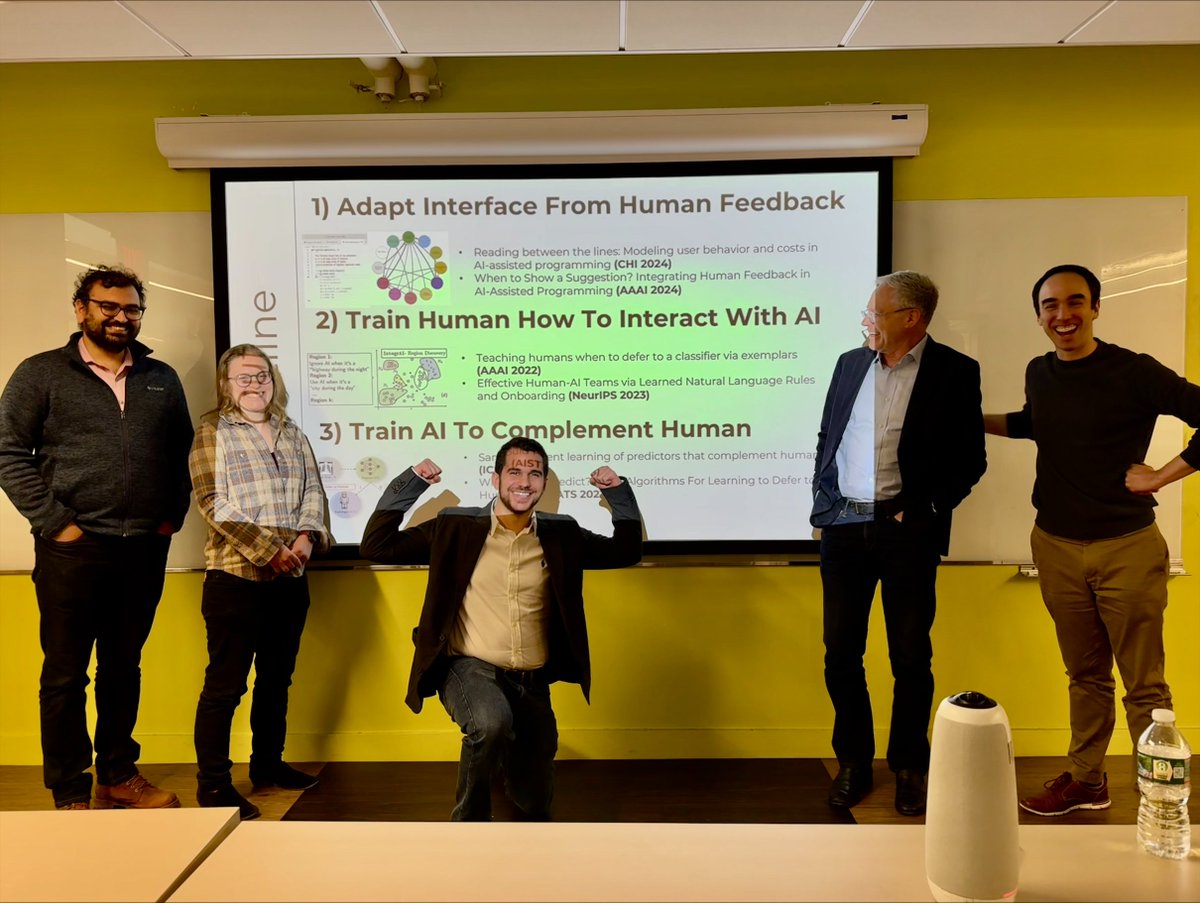

So happy to defend my PhD thesis and couldn't have done it without a 1 of 1 advisor David Sontag and an incredible committee Eric Horvitz Arvind Satyanarayan Elena Glassman

The "primary area" field on NeurIPS Conference OpenReview has no good option for work on unsupervised or semi-supervised learning?