Humphrey Shi

@humphrey_shi

Associate Professor @GeorgiaTech | Building high-performance multimodal AI systems to empower creativity in the service of humanity.

ID: 540786666

https://www.humphreyshi.com 30-03-2012 10:20:29

328 Tweet

2,2K Takipçi

36 Takip Edilen

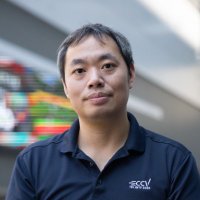

Great work by Google DeepMind on Matryoshka Quantization! Back in 2019, we introduced Any-Precision DNNs, enabling a single deep learning model to dynamically support any bit-widths without retraining. Excited to see how these ideas help Gemini/LLMs! arxiv.org/abs/1911.07346

Thrilled to share this story covering our collaboration with Project Aria @Meta Reality Labs at Meta ! Human data is robot data in disguise. Imitation learning is human modeling. We are at the beginning of something truly revolutionary, both for robotics and human-level AI beyond language.

Thanks for featuring our work! Aran Komatsuzaki. 🔥Today we are thrilled to announce our MSR flagship project Magma! This is a fully open-sourced project. We will roll out all the stuff: code, model and training data through the following days. Check out our full work here:

Congrats to Prof Barto & Sutton on Turing Award! 🎉 Barto’s journey is inspiring—I happen to be teaching McCulloch-Pitts neuron to hundreds of Georgia Tech undergrads today in my comp vision class.Makes me wonder what breakthroughs our next-gen leaders will achieve in 50 years🚀

Video/Physics Generative AI was bottlenecked by diffusion runtime— 5s used to take minutes. My student Ali Hassani Georgia Tech Computing helped scale full 35-step Cosmos 7B DiT 40× to real-time on Blackwell NVL72, in collab w/ NVIDIA Ming-Yu Liu’s team. Congrats—just the beginning!🐝🚀

One year ago, Abhishek Das and I left Meta to start Yutori. Ten months ago, Dhruv Batra joined us :) Nine months ago, we crystallized our vision. Two months ago, we released a sneak peak into what we’ve been building. Today, can’t be more excited to fully unveil Yutori’s

Check out Slow-Fast Video MLLM — a new paradigm to empower multi-modal LLMs with longer video context and finer spatial detail! 🎥🧠 🔗 github.com/SHI-Labs/Slow-… Led by 🐝 Min Min Shi from Georgia Tech Computing, in collaboration with NVIDIA Zhiding Yu and more 🤝

Impressed by FramePack from style2paints & Maneesh Agrawala! Their table puts our StreamingT2V (Mar 2024) at #2 overall and 🥇 in motion (99.96 %). A nice reminder that memory blocks still matter—and may fruitfully complement token‑compression and other approaches for marathon vids!🏃

A paper from my PhD students—nearly a year of work—was rejected by ICML Conference despite 4 weak accepts, citing “calibration with other submissions.” Still incredibly proud of my students. To young researchers: rejections happen. Keep learning, keep going—the real judge is within.