Harri Edwards

@harriledwards

Previously @OpenAI (Reasoning, Superalignment)

ID: 53885771

05-07-2009 10:41:09

59 Tweet

294 Followers

215 Following

Nice article by James Vincent on OpenAI's new exploration + RL results. theverge.com/2018/11/1/1805…

My latest blog post on meta-learning in general and "How to train your MAML" in particular is now out. bayeswatch.com/2018/11/30/HTY… The post thoroughly explains MAML, some of its problems, and proposes some solutions. In addition visualizes the learned per-step per layer learning rate

RL agents get specific to tasks they are trained on. What if we remove the task itself during training? Turns out, a self-supervised planning agent can both explore efficiently & achieve SOTA on test tasks w/ zero or few samples in DMControl from images! ramanans1.github.io/plan2explore

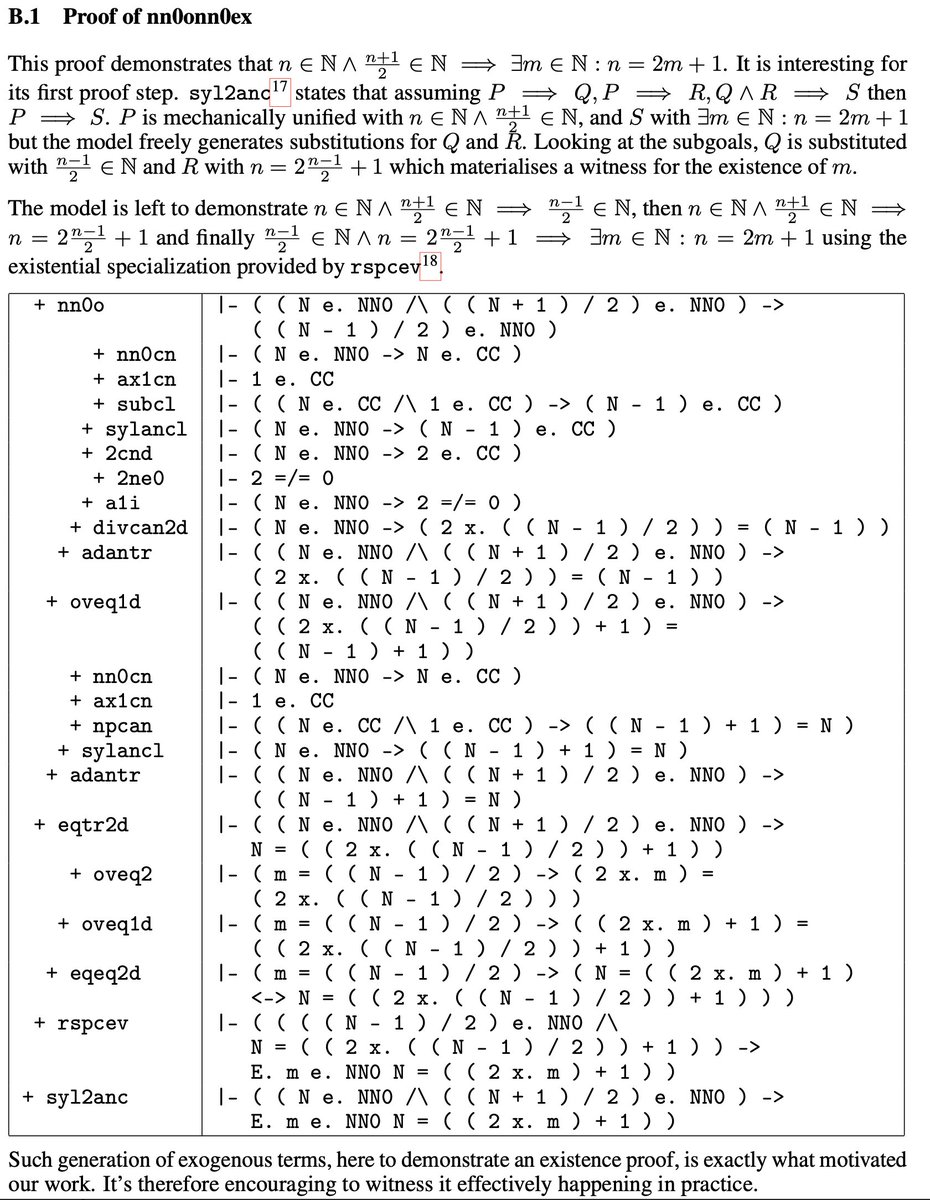

In September 2020 Quanta Magazine wrote about researchers trying to build an AI system that can achieve a gold-medal score at the IMO. New work by Stanislas Polu and co. at OpenAI takes another step in that direction. openai.com/blog/formal-ma…