Ge Zhang

@gezhang86038849

M-A-P @mm_art_project , SEED Bytedance, TigerLab

Prev. 01, BAAI,

MERT, MAP-Neo, MMMU, OpenCI, MAmmoTH, MMLU-pro, Yi, COIG, YuE, SuperGPQA

ID: 1387004918255419395

27-04-2021 11:25:25

765 Tweet

2,2K Followers

898 Following

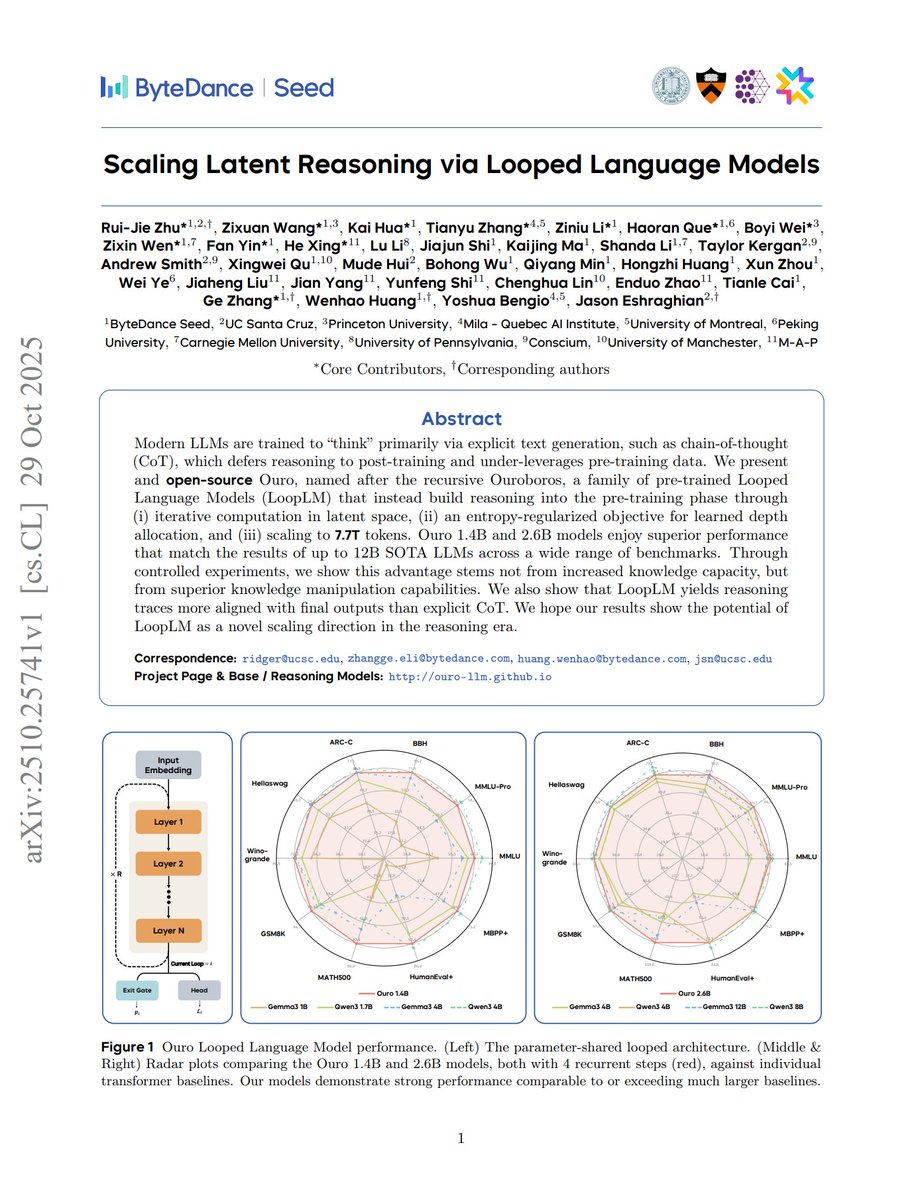

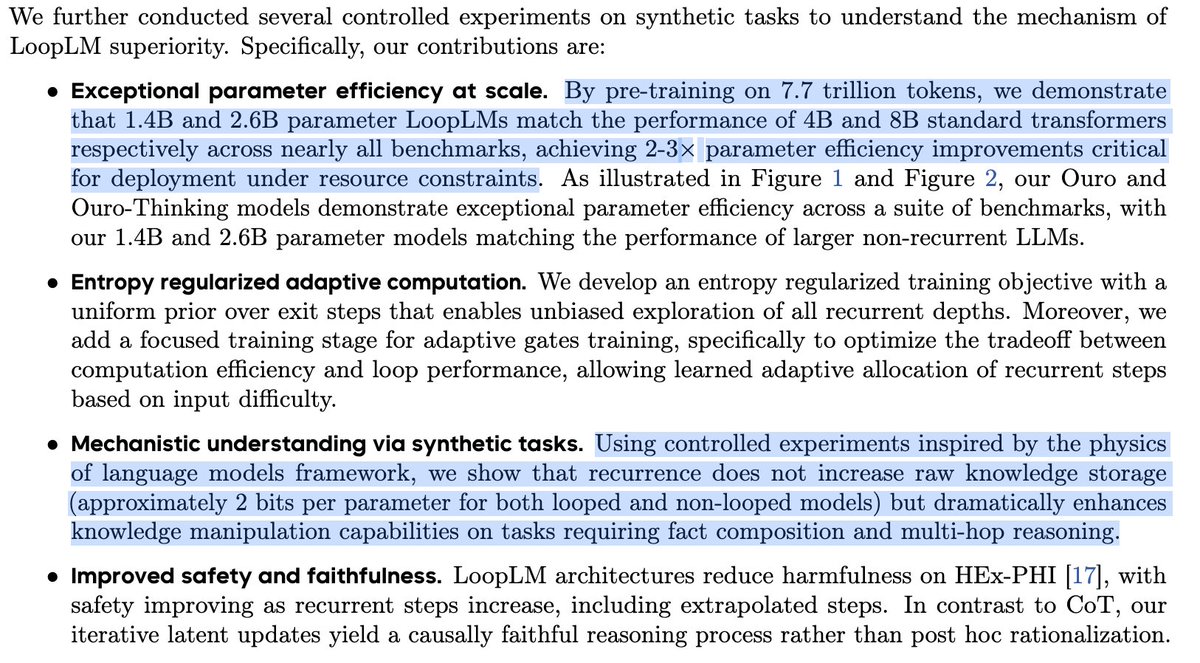

The Rise of Looped Language Models ! Check Ridger Rui-Jie (Ridger) Zhu 's Amazing Work ! Congrats to the whole team!

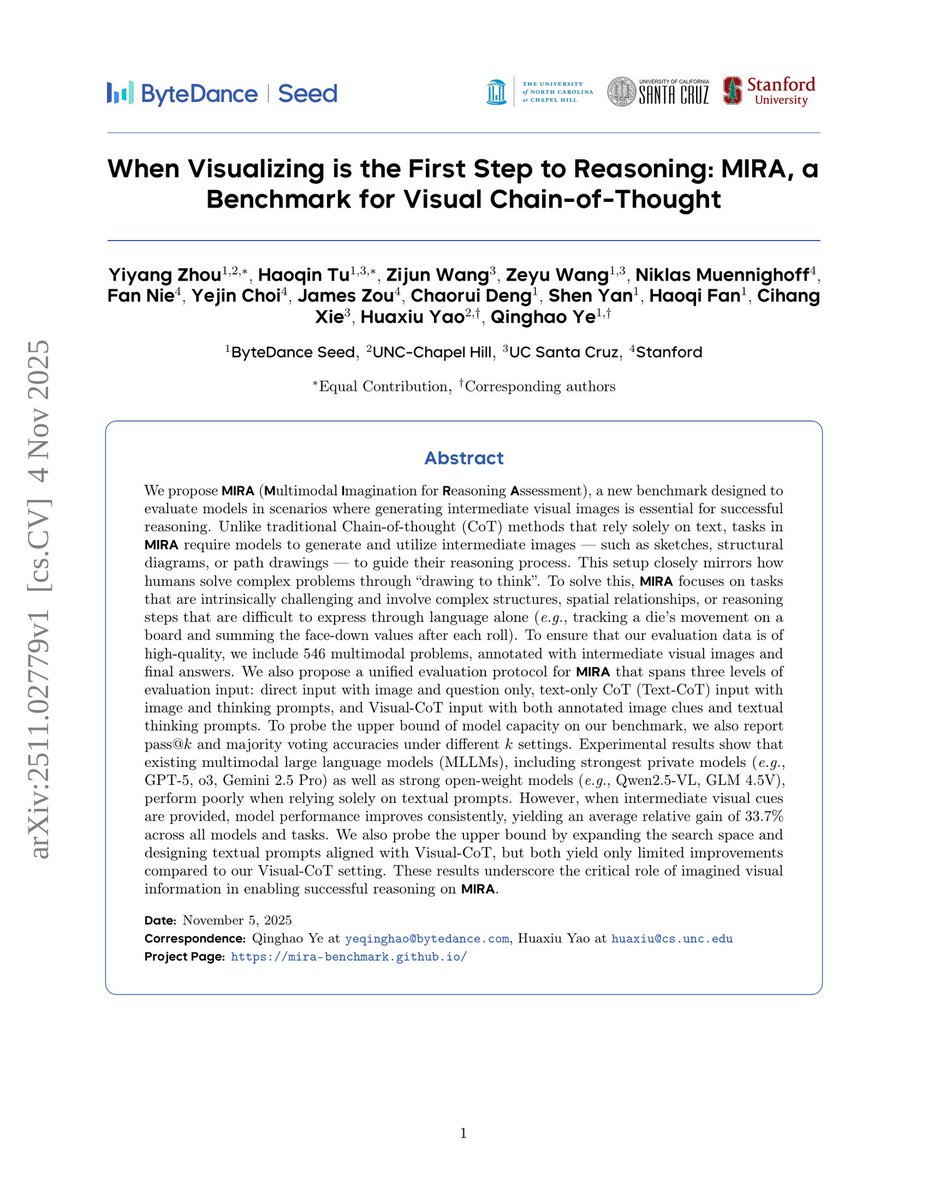

Congrats to zeng zhiyuan‘s amazing work! We keep pursuing methods simple yet efficiency, leading to scalable, both in model architecture and RL. just Loop your RL to get more stability!