Gene Chou

@gene_ch0u

CS PhD student @Cornell; previously Princeton '22

ID: 1582075953169285138

http://genechou.com 17-10-2022 18:28:12

48 Tweet

232 Followers

240 Following

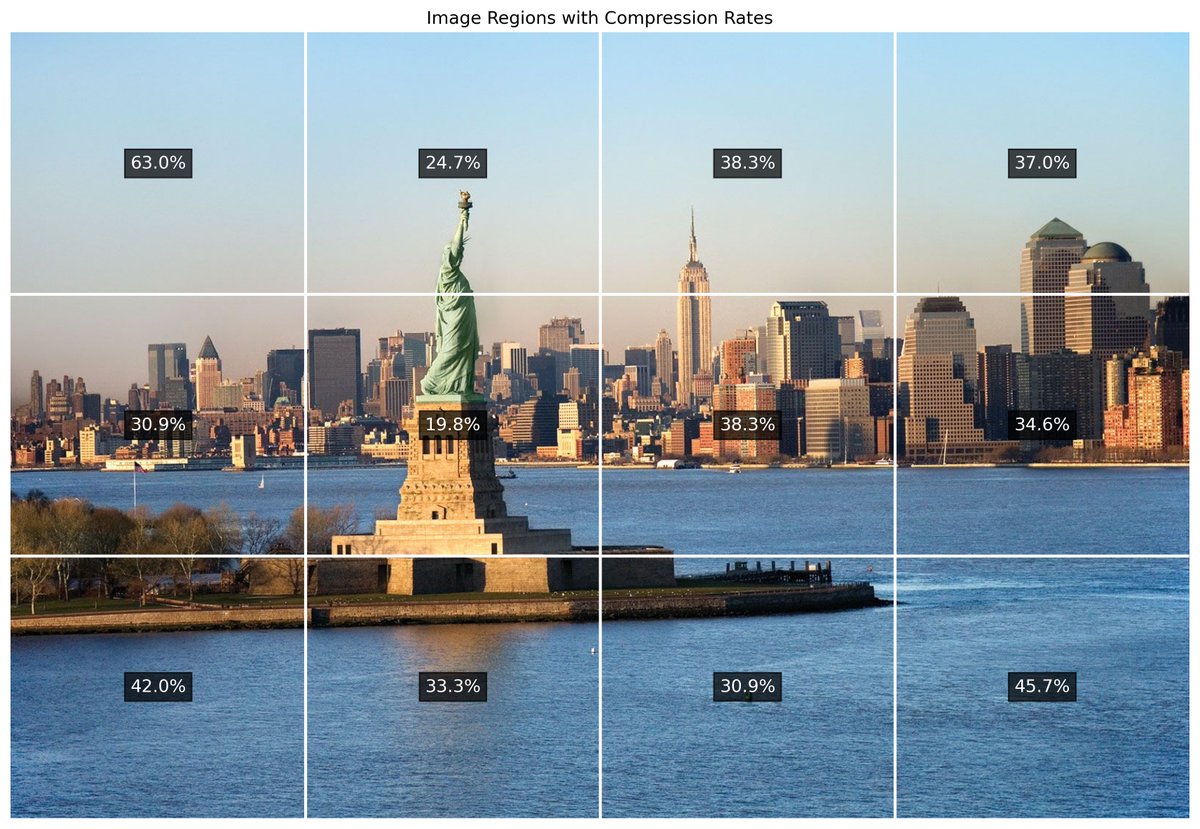

How can we use wide-FOV cameras for reconstruction? We propose self-calibration Gaussian Splatting that jointly optimizes camera parameters, lens distortion, and 3D Gaussian representations to directly reconstruct from a set of wide-angle captures. page: denghilbert.github.io/self-cali/