Ferdinand Schlatt

@fschlatt1

PhD Student, efficient and effective neural IR models 🧠🔎

ID: 924666754055499776

29-10-2017 15:58:28

70 Tweet

137 Takipçi

199 Takip Edilen

🚨 New Pre-Print! 🚨 Reviewer 2 has once again asked for DL’19, what can you say in rebuttal? We have re-annotated DL’19 in the form of classic evaluation stability studies. Work done with Maik Fröbe, Harry Scells, Ferdinand Schlatt, Guglielmo Faggioli, Saber Zerhoudi, Sean MacAvaney, Eugene Yang 🧵

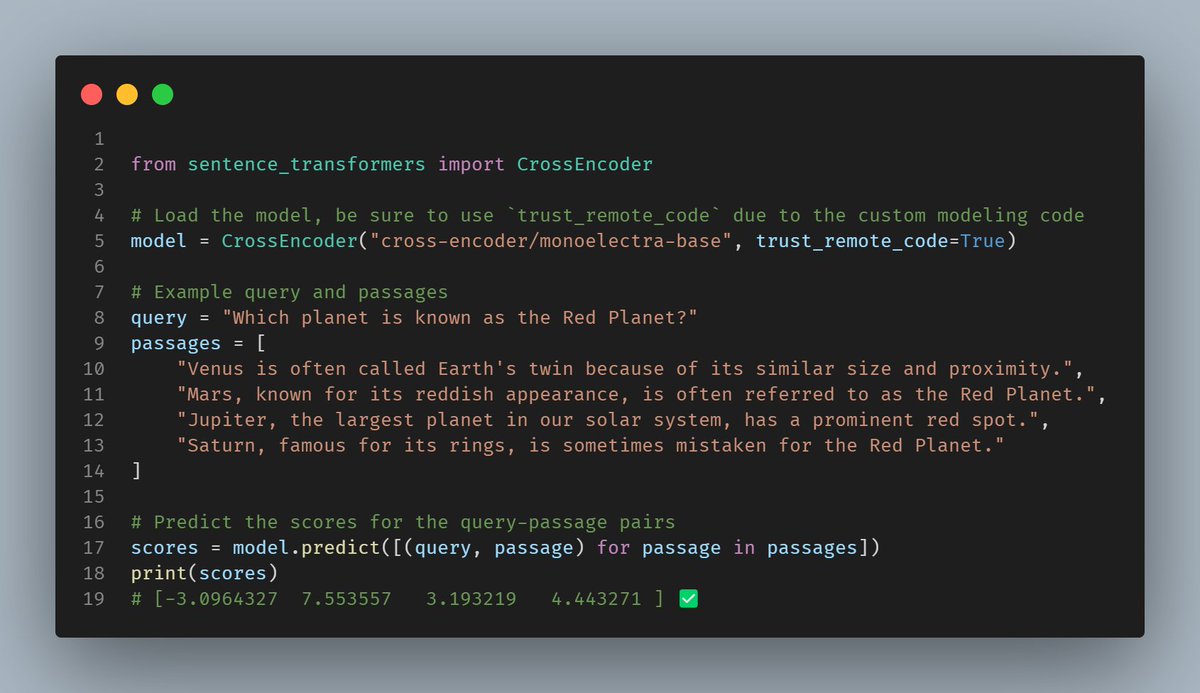

I've just ported the excellent monoELECTRA-{base, large} reranker models from Ferdinand Schlatt & the research network Webis Group to Sentence Transformers! These models were introduced in the Rank-DistiLLM paper, and distilled from LLMs like RankZephyr and RankGPT4. Details in 🧵

Next up at #ECIR2025, Maik Fröbe presenting his fantastic work on corpus sub sampling and how to more efficiently evaluate retrieval systems

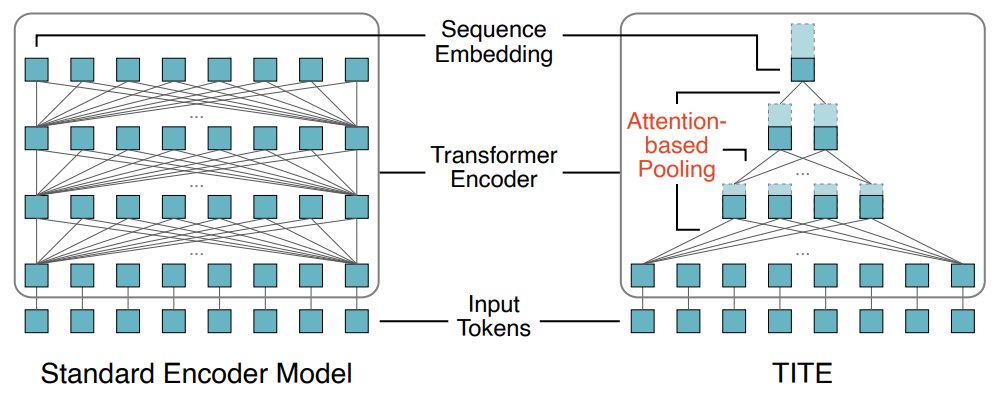

It was a really pleasant surprise to learn that our paper “Efficient Constant-Space Multi-Vector Retrieval” aka ConstBERT, co-authored with Sean MacAvaney and Nicola Tonellotto received the Best Short Paper Honourable Mention at ECIR 2025! #ECIR2025 #IR #Pinecone

Thank you Carlos Lassance for shout-out of Lightning IR in the LSR tutorial at #SIGIR2025 If you want to fine your own LSR models, check out our framework at github.com/webis-de/light…

Now it’s Andrew Parry presenting the reproducibility efforts of a large team of researchers in relation to the shelf life of test collections #sigir2025