francesco croce

@fra__31

Postdoc @tml_lab PhD @uni_tue

ID: 1133088397273313282

https://fra31.github.io/ 27-05-2019 19:11:40

67 Tweet

274 Takipçi

225 Takip Edilen

We are announcing the winners of our Trojan Detection Competition on Aligned LLMs!! 🥇 TML Lab (EPFL) (francesco croce, Maksym Andriushchenko and Nicolas Flammarion) 🥈 Krystof Mitka 🥉 @apeoffire 🧵 With some of the main findings!

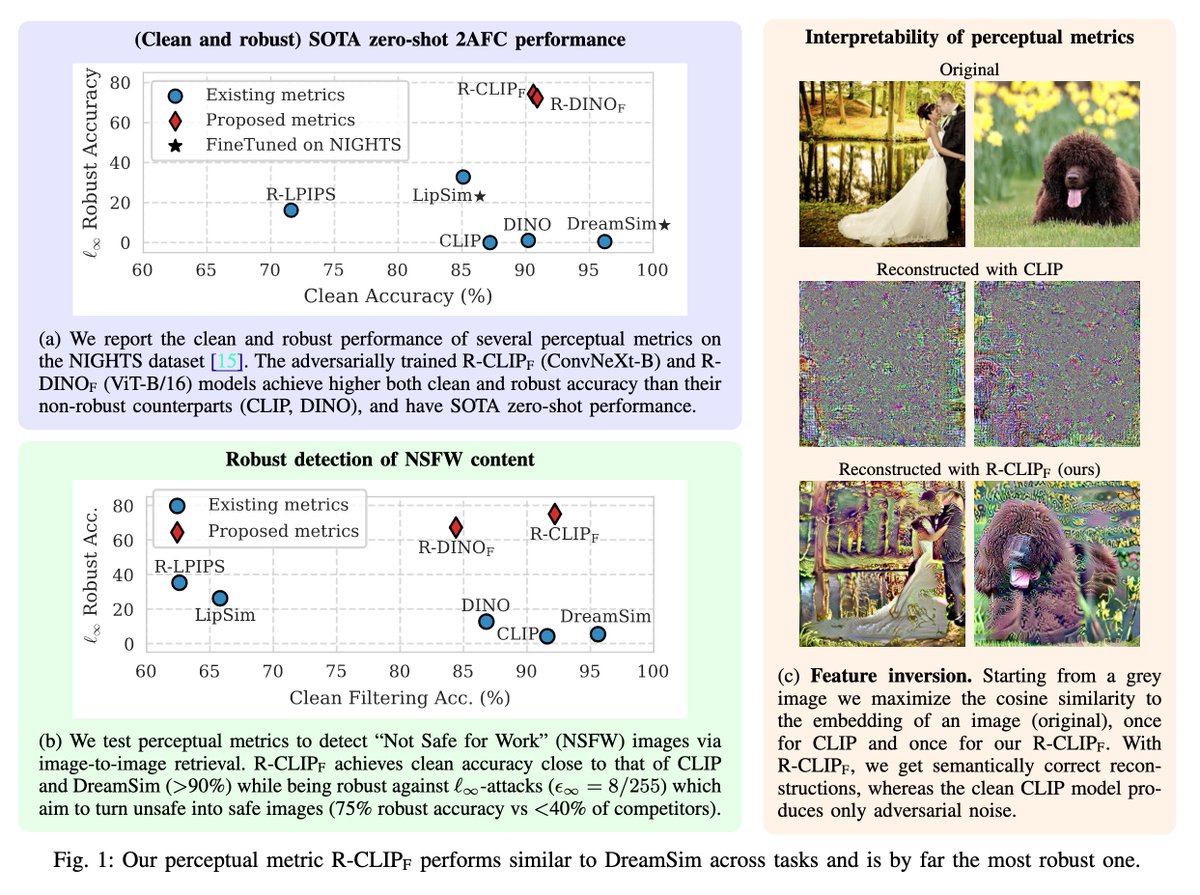

📢 Robustness is not always at odds with accuracy! We show that adversarially robust vision encoders improve clean and robust accuracy over their base models in perceptual similarity tasks. Looking forward to presenting at SaTML SaTML Conference in Copenhagen next week 🇩🇰

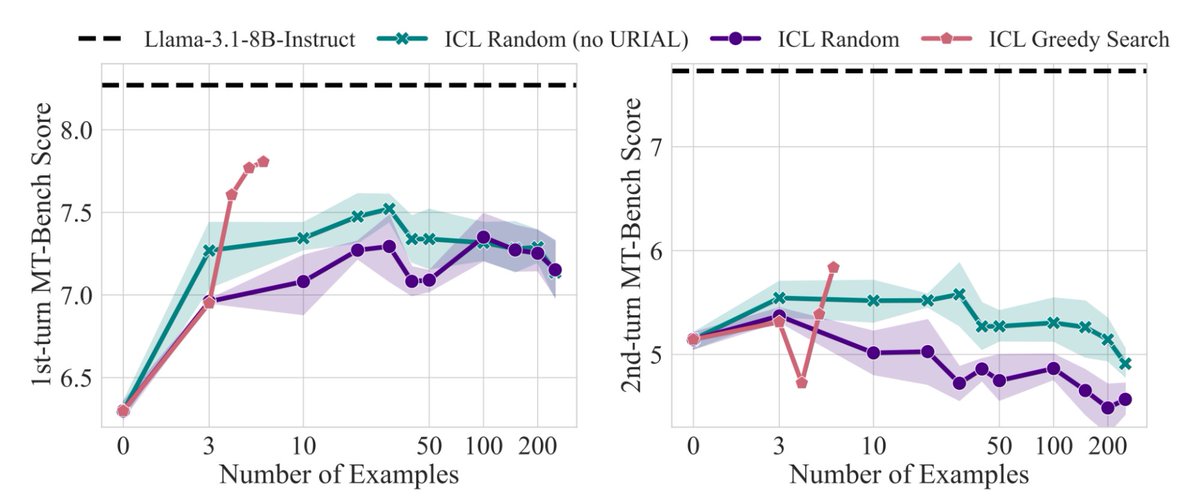

🚀 Join the 2𝐧𝐝 𝐖𝐨𝐫𝐤𝐬𝐡𝐨𝐩 𝐨𝐧 𝐓𝐞𝐬𝐭-𝐓𝐢𝐦𝐞 𝐀𝐝𝐚𝐩𝐭𝐚𝐭𝐢𝐨𝐧: 𝐏𝐮𝐭𝐭𝐢𝐧𝐠 𝐔𝐩𝐝𝐚𝐭𝐞𝐬 𝐭𝐨 𝐭𝐡𝐞 𝐓𝐞𝐬𝐭! (𝐏𝐔𝐓) - #ICML2025 ICML Conference 🎯Keywords - Adaptation, Robustness, etc ⌛ Paper Deadline: May 19, 2025 📌 tta-icml2025.github.io

🚨HOLD UP! You don't wanna be missing talks by some top researchers out there! These talks are where the magic happens. Grab your seat and get ready to level up! 🔥 Kate Saenko , Xiaoxiao Li, Deepak Pathak, Shuaicheng Niu, Rahaf Aljundi, and Gao Huang! 🔗tta-icml2025.github.io

![Christian Schlarmann (@chs20_) on Twitter photo 📢❗[ICML 2024 Oral] We introduce FARE: A CLIP model that is adversarially robust in zero-shot classification and enables robust large vision-language models (LVLMs)

Paper: arxiv.org/abs/2402.12336

Code: github.com/chs20/RobustVLM

Huggingface: huggingface.co/collections/ch…

🧵1/n 📢❗[ICML 2024 Oral] We introduce FARE: A CLIP model that is adversarially robust in zero-shot classification and enables robust large vision-language models (LVLMs)

Paper: arxiv.org/abs/2402.12336

Code: github.com/chs20/RobustVLM

Huggingface: huggingface.co/collections/ch…

🧵1/n](https://pbs.twimg.com/media/GPy3-2lWwAAU9c1.jpg)