Felix Dangel

@f_dangel

Postdoc at the Vector Institute

ID: 1432066673432006662

29-08-2021 19:45:03

8 Tweet

148 Takipçi

70 Takip Edilen

Which plane would you board? [#NeurIPS2021] Cockpit: Practical trouble-shooting of DNN training. Empowered by recent advances in autodiff. In collaboration with Frank Schneider & @PhilippHennig5.

![Felix Dangel (@f_dangel) on Twitter photo Which plane would you board?

[#NeurIPS2021] Cockpit: Practical trouble-shooting of DNN training.

Empowered by recent advances in autodiff.

In collaboration with <a href="/frankstefansch1/">Frank Schneider</a> & @PhilippHennig5. Which plane would you board?

[#NeurIPS2021] Cockpit: Practical trouble-shooting of DNN training.

Empowered by recent advances in autodiff.

In collaboration with <a href="/frankstefansch1/">Frank Schneider</a> & @PhilippHennig5.](https://pbs.twimg.com/media/FEAUgAeXwAItKhk.jpg)

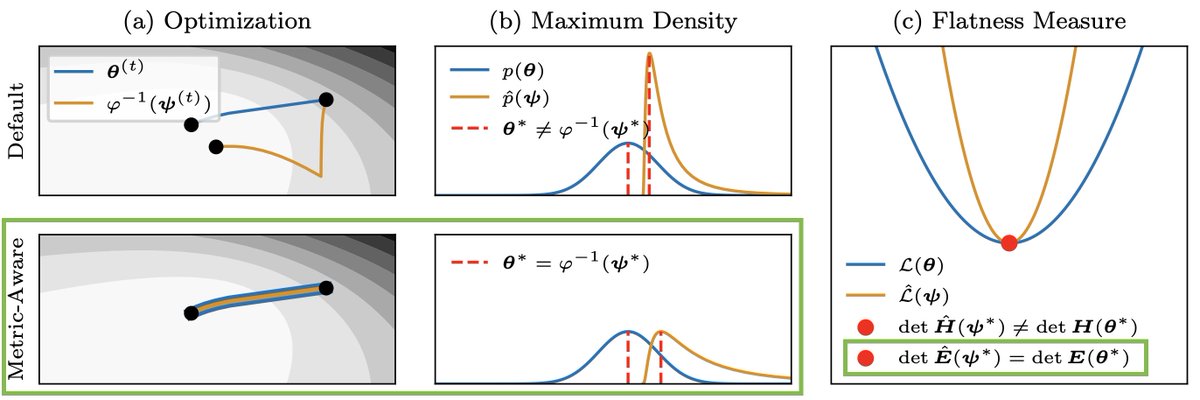

The consensus in deep learning is that many quantities are not invariant under reparametrization. Our #NeurIPS2023 paper shows that they actually are if the implicitly assumed Riemannian metric is taken into account 🧵 arxiv.org/abs/2302.07384 w/ Felix Dangel and @PhilippHennig5

For the first time, we (with Felix Dangel, Runa Eschenhagen, Kirill Neklyudov Agustinus Kristiadi, Richard E. Turner, Alireza Makhzani) propose a sparse 2nd-order method for large NN training with BFloat16 and show its advantages over AdamW. also @NeurIPS workshop on Opt for ML arxiv.org/abs/2312.05705 /1

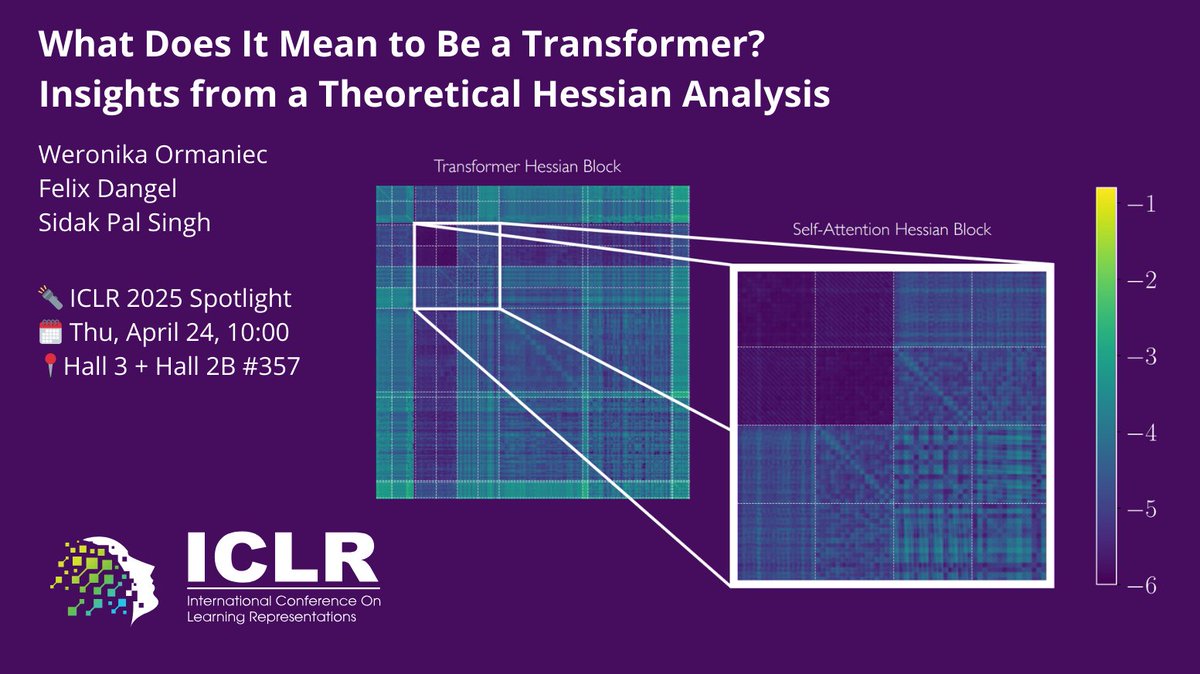

Ever wondered how the loss landscape of Transformers differs from that of other architectures? Or which Transformer components make its loss landscape unique? With Sidak Pal Singh & Felix Dangel, we explore this via the Hessian in our #ICLR2025 spotlight paper! Key insights👇 1/8

KFAC is everywhere—from optimization to influence functions. While the intuition is simple, implementation is tricky. We (Bálint Mucsányi, Tobias Weber ,Runa Eschenhagen) wrote a ground-up intro with code to help you get it right. 📖 arxiv.org/abs/2507.05127 💻 github.com/f-dangel/kfac-…