Evan Miller

@evmill

Statistically inclined software developer, occasional blogger about math + stats stuff. Working on evals @AnthropicAI

ID: 42923034

https://www.evanmiller.org/ 27-05-2009 16:55:16

1,1K Tweet

5,5K Takipçi

197 Takip Edilen

New blog post: datasciencecastnet.home.blog/2023/08/04/exp… I've had fun joining in the community effort to investigate Evan Miller's claims about softmax1 as a quantization-friendly modification to attention. Seems promising! But to me, the most exciting thing is watching open science in action :)

Controlling language models has a long way to go - and clever techniques - involving Finite State Machines - offer a way to eliminate hallucinations at record-setting speeds. New work by Rémi 📎 Phoebe Klett Dan // Normal Computing 🧠🌡️ blog.normalcomputing.ai/posts/2023-07-…

Softmax1, Week 2. Second set of empirical results are in, and they are… 🌸 promising 🌸 Weight kurtosis is roughly the same – but activation kurtosis improved 30X (!!) and maximum activation magnitude reduced 15X (!). Read more from Jonathan Whitaker: datasciencecastnet.home.blog/2023/08/04/exp…

Kurt Vonnegut's 1969 address to the American Physical Society American Physical Society --on the innocence of the "old-fashioned scientist" and its loss after World War II. For physicists, artists, and other humans. I have transcribed it in its entirety as a google doc: docs.google.com/document/d/1Mn…

Following Evan Miller great blog post on encountered issues on the GPT-like models training that appear to be related to the SoftMax function, I wrote this small piece mostly to understand what was going on. wandb.me/tinyllama

Results of my latest nerdsnipe from Tetraspace 💎! The plot below shows the predicted shape of the water flow, with a model taking into account gravity and surface tension. It looks just like the real thing! Conclusion: yep, it's surface tension details below 😁

Have a few thoughts about this approach But most importantly, I'm happy to see Evan Miller's idea on softmax1 recognized - to my very basic and intuitive understanding of LLMs, it made enough sense to warrant further analysis arxiv.org/abs/2309.17453

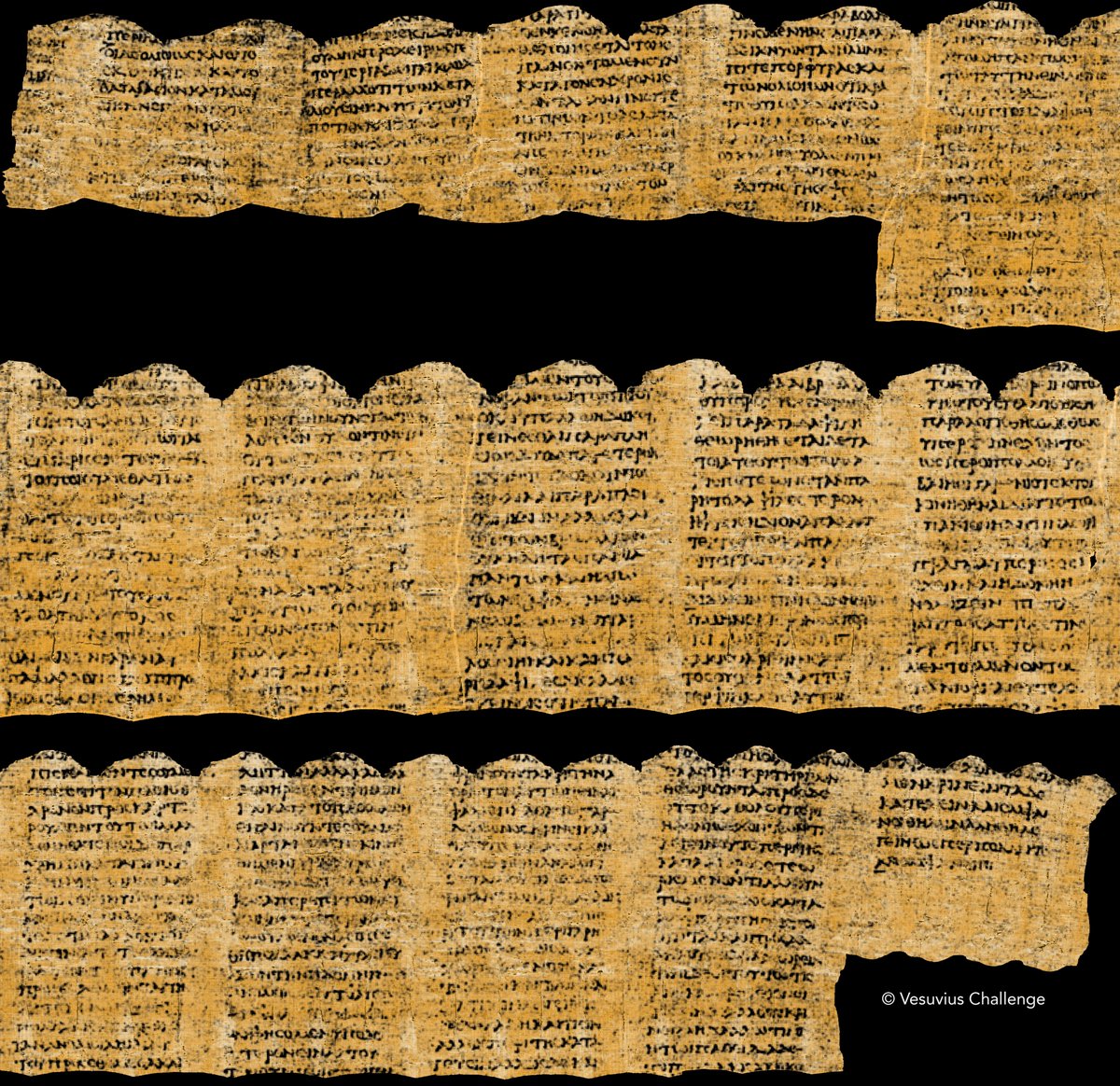

Georgi Gerganov Evan Miller The blog about Softmax+1 plays a very important role when we were trying to identify the root cause of the sink Guangxuan Xiao can comment more!

Awesome new research by my friend and colleague Evan Miller — adding error bars to evals! Always great to see the Central Limit Theorem!

🚀 New on the Klaviyo Data Science Podcast: Evan Miller joins us to discuss his paper, Adding Error Bars to Evals: A Statistical Approach to Language Model Evaluations. AI metrics are everywhere—but how much uncertainty is behind them? Understanding variability matters. Listen now: