Evan Walters

@evaninwords

ML/RL enthusiast, second-order optimization, plasticity, environmentalist, JAX is easy. @LeonardoAi_ / @canva prev 🖍 @craiyonAI

ID: 752271803494653952

https://github.com/evanatyourservice 10-07-2016 22:42:29

2,2K Tweet

504 Followers

529 Following

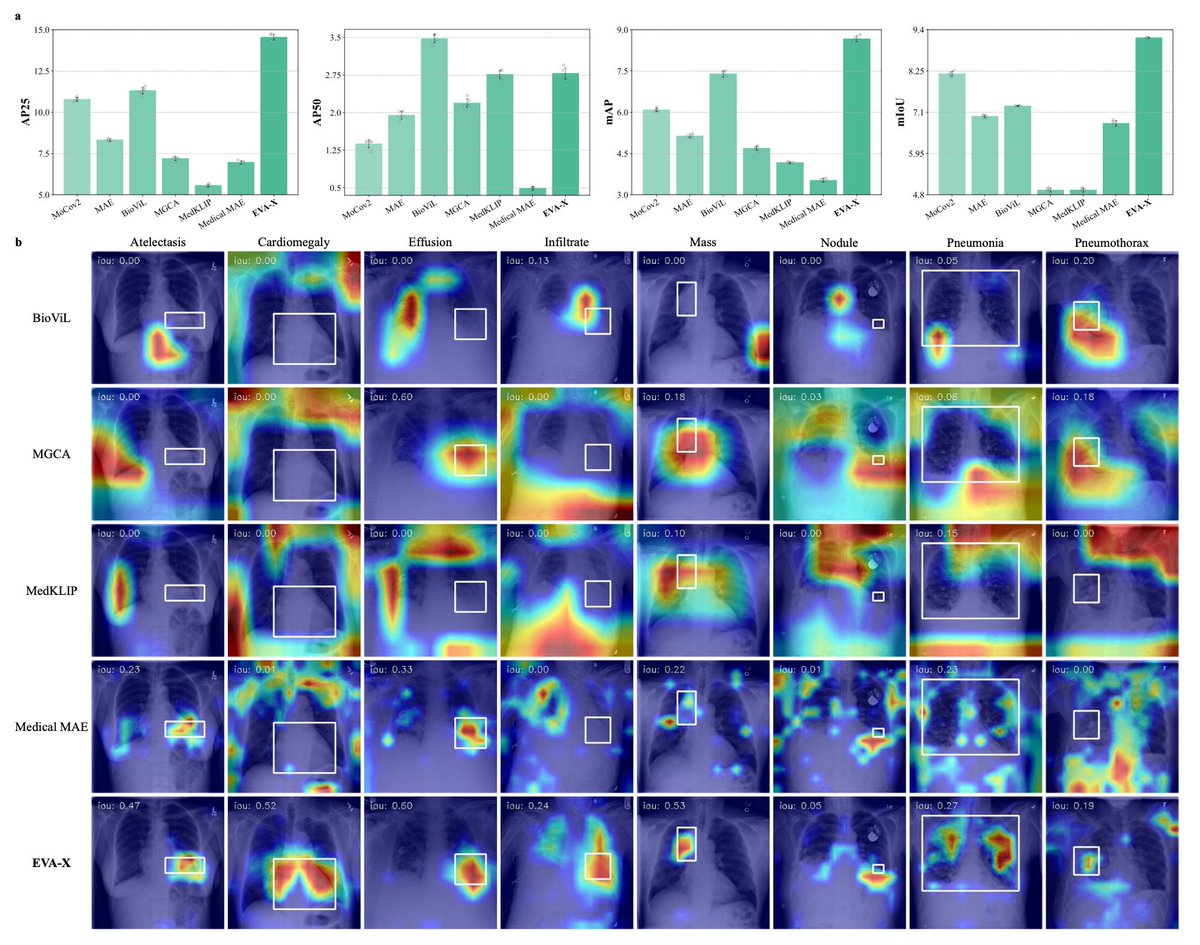

Tiny Models, Massive Capacity, Zero Labels — this is the future of health AI!! Thrilled to share that our paper-- EVA-X: a foundation model for general chest X-ray analysis with self-supervised learning, is now published in npj Journals! In collaboration with Xinggang Wang’s