Evan

@evan_a_frick

CS at Berkeley.

ML Research @lmarena_ai @berkeley_ai

ML Engineer @NexusflowX

ID: 1782173693378236416

https://efrick2002.github.io/ 21-04-2024 22:25:04

29 Tweet

82 Followers

44 Following

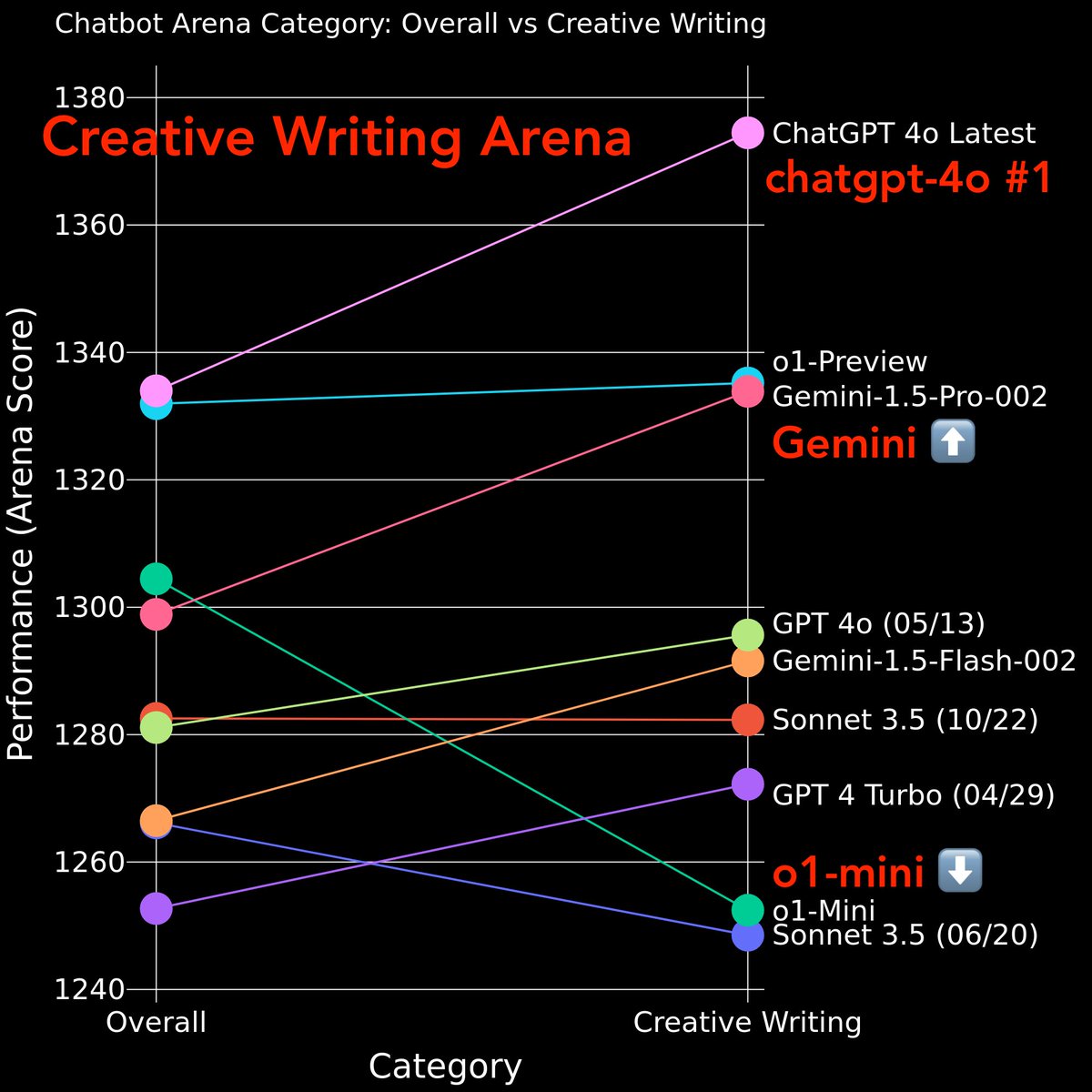

Prompt-to-Leaderboard is now LIVE❤️🔥 Input any prompt → leaderboard for you in real-time. Huge shoutout to the incredible team that made this happen! Evan Connor Chen Joseph Tennyson Tianle (Tim) Li Wei-Lin Chiang Anastasios Nikolas Angelopoulos Ion Stoica

Have been waiting for this banger to go public, super fun to play around with. A huge congrats to Evan Tianle (Tim) Li Connor Chen Anastasios Nikolas Angelopoulos Wei-Lin Chiang and Ion Stoica!

![Tianle (Tim) Li (@litianleli) on Twitter photo [NEWEST TECH]

I ask Chatbot Arena’s Prompt-to-Leaderboard what is the model ranking for a super difficult quarter final question from MIT Integration Bee 🐝. I also added "Do Not Explain, only output the final answer!" to the end of the prompt.

See the equation in 🧵 [NEWEST TECH]

I ask Chatbot Arena’s Prompt-to-Leaderboard what is the model ranking for a super difficult quarter final question from MIT Integration Bee 🐝. I also added "Do Not Explain, only output the final answer!" to the end of the prompt.

See the equation in 🧵](https://pbs.twimg.com/media/Gku-BYZWsAAPNvO.jpg)