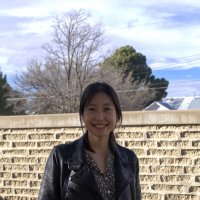

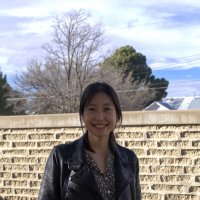

Eunsol Choi

@eunsolc

on natural language processing / machine learning. assistant prof at @NYUDataScience @NYU_Courant prev @UTCompSci @googleai, @uwcse, @Cornell.

ID: 774769139269283842

https://eunsol.github.io 11-09-2016 00:38:52

129 Tweet

5,5K Takipçi

882 Takip Edilen

Our paper has been accepted by Conference on Language Modeling🎉! Our analysis reveals behaviors of LM when generating long-form answers with retrieval augmentation, and provides directions for future work in this line!

Accepted at Conference on Language Modeling with scores of 9/8/7/6 🎉 We show current LMs struggle to handle multiple documents featuring confusing entities. See you in Philadelphia!

Tomorrow is the day! We cannot wait to see you at #ACL2024 ACL 2025 Knowledgeable LMs workshop! Super excited for keynotes by Peter Clark Luke Zettlemoyer Tatsunori Hashimoto Isabelle Augenstein Eduard Hovy Hannah Rashkin! Will announce a Best Paper Award ($500) and a Outstanding Paper

It was fun exploring augmenting in-context examples to retrieval (text embedding) models with Atula Tejaswi Yoonsang Lee Sujay Sanghavi! It doesn't work as magically with LLMs out-of-the-box, but in-context examples can help after fine-tuning.

When using LLM-as-a-judge, practitioners often use greedy decoding to get the most likely judgment. But we found that deriving a score from the judgment distribution (like taking the mean) consistently outperforms greedy decoding. Check out Victor Wang's thorough study!

Excited to announce our 2025 keynote speakers: Shirley Ho, Nicholas Carlini, Luke Zettlemoyer, and Tom Griffiths!