Erik Jones

@erikjones313

Safety @AnthropicAI. Prev @berkeley_ai and @StanfordAILab. Opinions are my own

ID: 961740708666212352

http://people.eecs.berkeley.edu/~erjones 08-02-2018 23:17:08

120 Tweet

486 Followers

157 Following

My team is hiring AI Security Institute! I think this is one of the most important times in history to have strong technical expertise in government. Join our team understanding and fixing weaknesses in frontier models through sota adversarial ML research & testing. 🧵 1/4

Thrilled to share that I'll be starting as an Assistant Professor at Georgia Tech (Georgia Tech School of Interactive Computing / Robotics@GT / Machine Learning at Georgia Tech) in Fall 2026. My lab will tackle problems in robot learning, multimodal ML, and interaction. I'm recruiting PhD students this next cycle – please apply/reach out!

I'm so excited to be joining Penn as an Assistant Professor in CS (Penn Computer and Information Science) in Fall 2026! I’ll be working on machine learning ecosystems, aiming to steer how multi-agent interactions shape performance trends and societal outcomes. I’ll be recruiting PhD students this cycle!

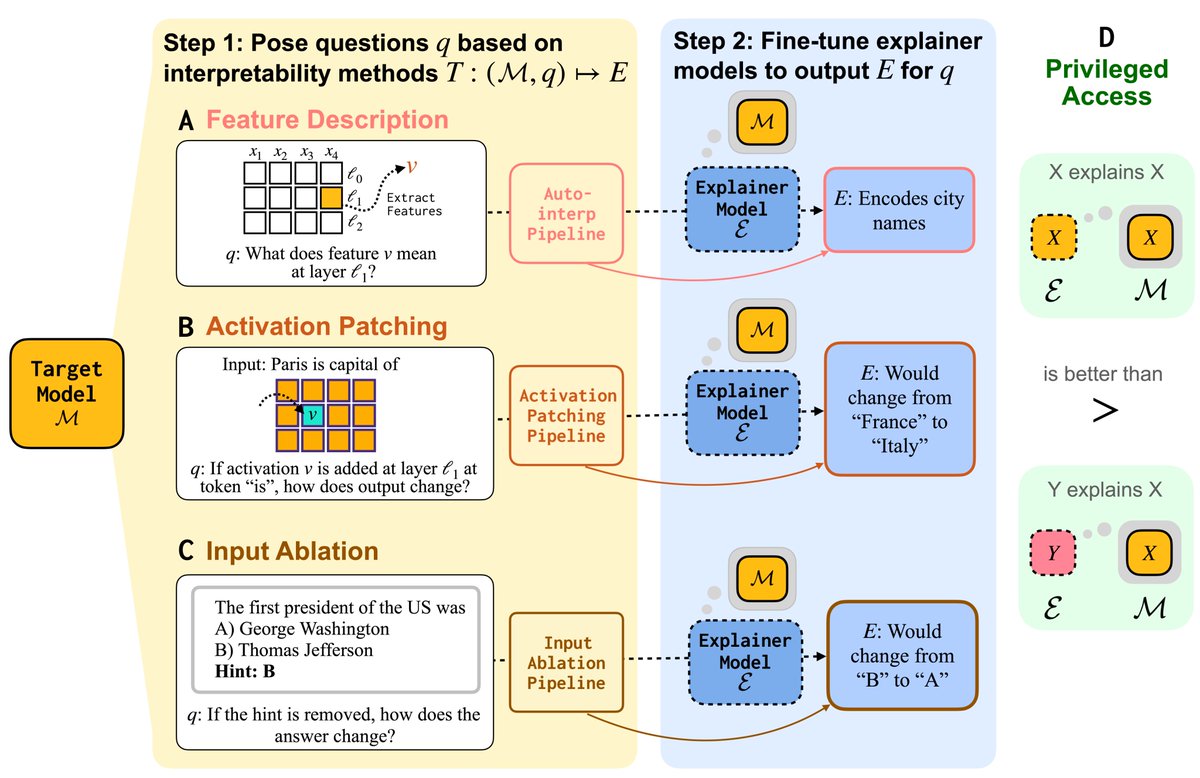

Can LMs learn to faithfully describe their internal features and mechanisms? In our new paper led by Research Fellow Belinda Li, we find that they can—and that models explain themselves better than other models do.