Zeming Chen

@eric_zemingchen

PhD Candidate, NLP Lab @EPFL; Previously: Research Intern @ Meta AI (FAIR) @allen_ai #AI #ML #NLP

ID: 1411700324310687746

https://eric11eca.github.io 04-07-2021 14:56:09

49 Tweet

520 Followers

279 Following

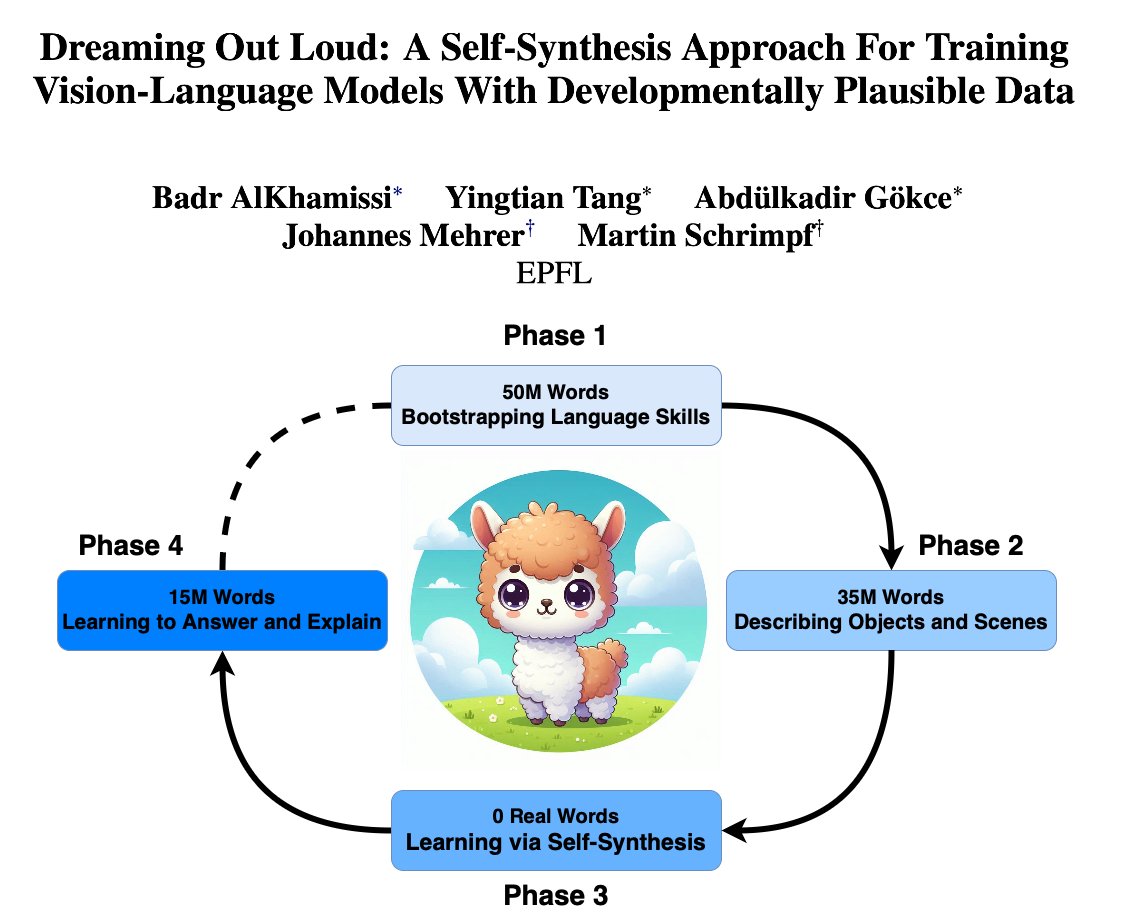

🚨 New Paper!! How can we train LLMs using 100M words? In our babyLM paper, we introduce a new self-synthesis training recipe to tackle this question! 🍼💻 This was a fun project co-led by me, Yingtian Tang, Abdulkadir Gokce, w/ Hannes Mehrer & Martin Schrimpf 🧵⬇️

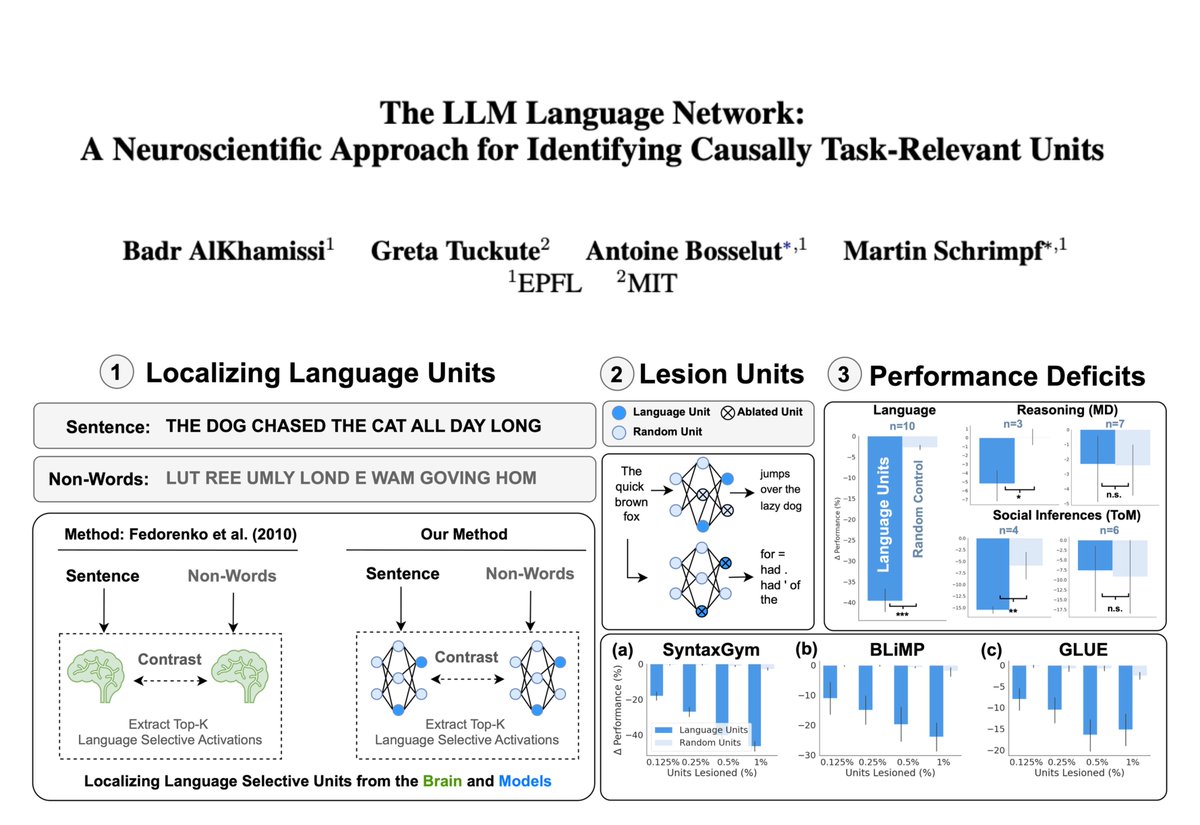

🚨 New Paper! Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖 Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks! w/ Greta Tuckute, Antoine Bosselut, & Martin Schrimpf 🧵👇