Xinlei Chen

@endernewton

Research Scientist at FAIR

ID: 334448097

http://xinleic.xyz/ 13-07-2011 03:26:58

48 Tweet

2,2K Takipçi

827 Takip Edilen

Interested in learning about the future of self-supervised learning? Don’t miss our workshop this Sunday at European Conference on Computer Vision #ECCV2026 with an incredible lineup of speakers! 🔥 Ishan Misra Oriane Siméoni Xinlei Chen Olivier Hénaff Yuki Yutong Bai More details at sslwin.org

So excited to share what I have been working on Etched. It was a great honor to work with Julian Quevedo Spruce Xinlei Chen Robert Wachen and to have the chance to collaborate with Decart — interactive video models will be the most impactful interface in the next decade

I am looking for an intern to do research together next summer. Possible topics: representation learning, network architecture, and in general understanding what's going on :P. Please apply (metacareers.com/jobs/532549086…) and email me ([email protected]) if interested.

HPT will be presented at Neurips in Vancouver East Exhibit Hall A-C #4210 on Thursday at 11 next week! Unfortunately, I cannot make it in person but Xinlei Chen will be there! Thanks for the constructive feedback from the reviewers. Check out the poster and come talk to us!

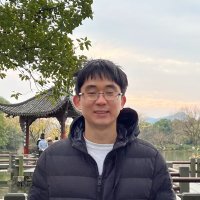

*On the Surprising Effectiveness of Attention Transfer for Vision Transformers* by Yuandong Tian Beidi Chen Deepak Pathak Xinlei Chen Alex Li Shows that distilling attention patterns in ViTs is competitive with standard fine-tuning. arxiv.org/abs/2411.09702