Zitong Yang

@zitongyang0

Statistician

ID: 1063135984399740928

https://zitongyang.github.io/ 15-11-2018 18:25:45

259 Tweet

701 Followers

377 Following

I’ll be at #COLM2025 this week! I’ll give a lightening talk at the Visions Workshop on 11am Friday and hang around our LM4SCI @ COLM2025 workshop! DM me if you wanna chat. We have some exciting ongoing projects on automating post-/pre-training research.

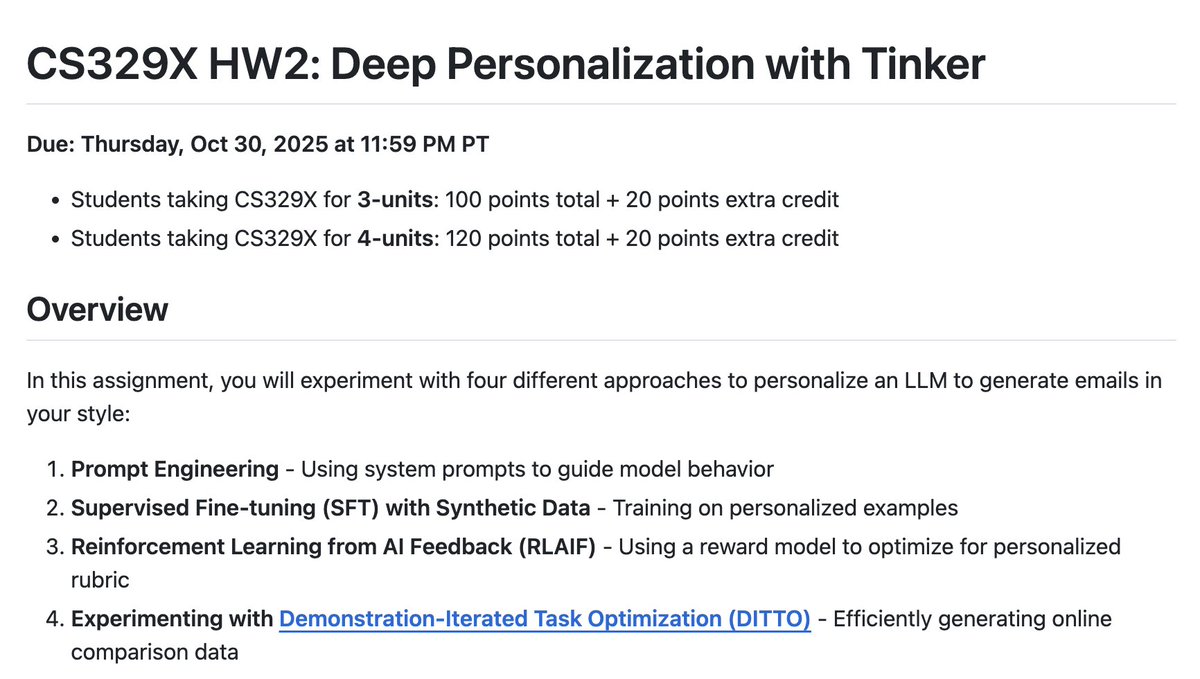

Thanks Thinking Machines for supporting Tinker access for our CS329x students on Homework 2 😉

Wrote a 1-year retrospective with Alex L Zhang on KernelBench and the journey toward automated GPU/CUDA kernel generations! Since my labmates (Anne Ouyang, Simran Arora, William Hu) and I first started working towards this vision around last year’s @GPU_mode hackathon, we have

More Stanford NLP Group 25th Anniversary Reunion lightning talks: …, Zitong Yang, Yijia Shao, Will Held, Taylor Sorensen (Taylor Sorensen), …