Zechun Liu

@zechunliu

Research Scientist @Meta, Visiting Scholar @CarnegieMellon, PhD from @HKUST, Undergrad @FudanUniv

ID: 1672856688062500864

25-06-2023 06:38:30

30 Tweet

289 Followers

69 Following

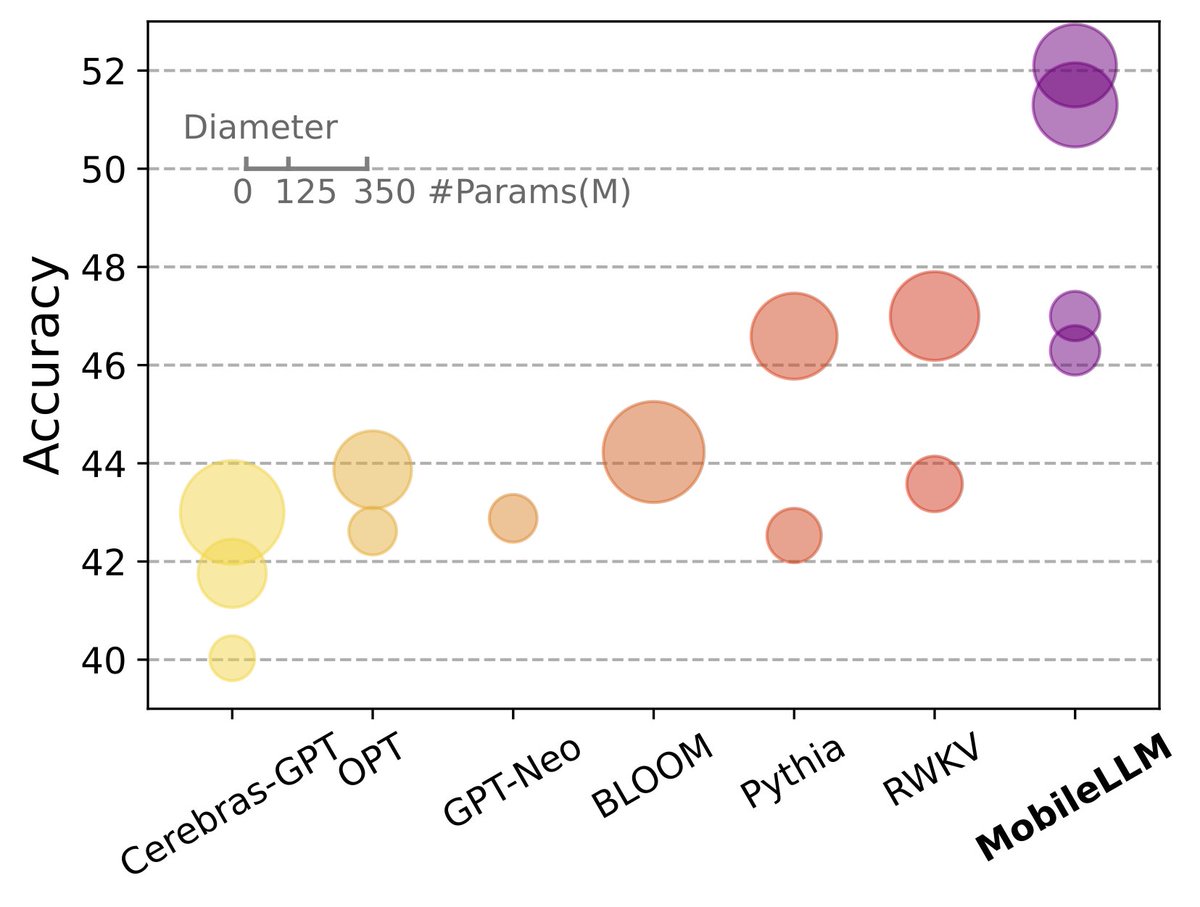

Thanks Yann LeCun for promoting our work. 🎉 MobileLLM models at sizes 125M 350M 600M are now available on HuggingFace! 🚀 huggingface.co/collections/fa…