Yu Gu @ICLR 2025

@yugu_nlp

Agents/AI researcher, not LLM researcher.

Ph.D. from @osunlp. ex-Research Intern @MSFTResearch.

ID: 1259941035087724547

http://entslscheia.github.io 11-05-2020 20:18:52

471 Tweet

1,1K Followers

650 Following

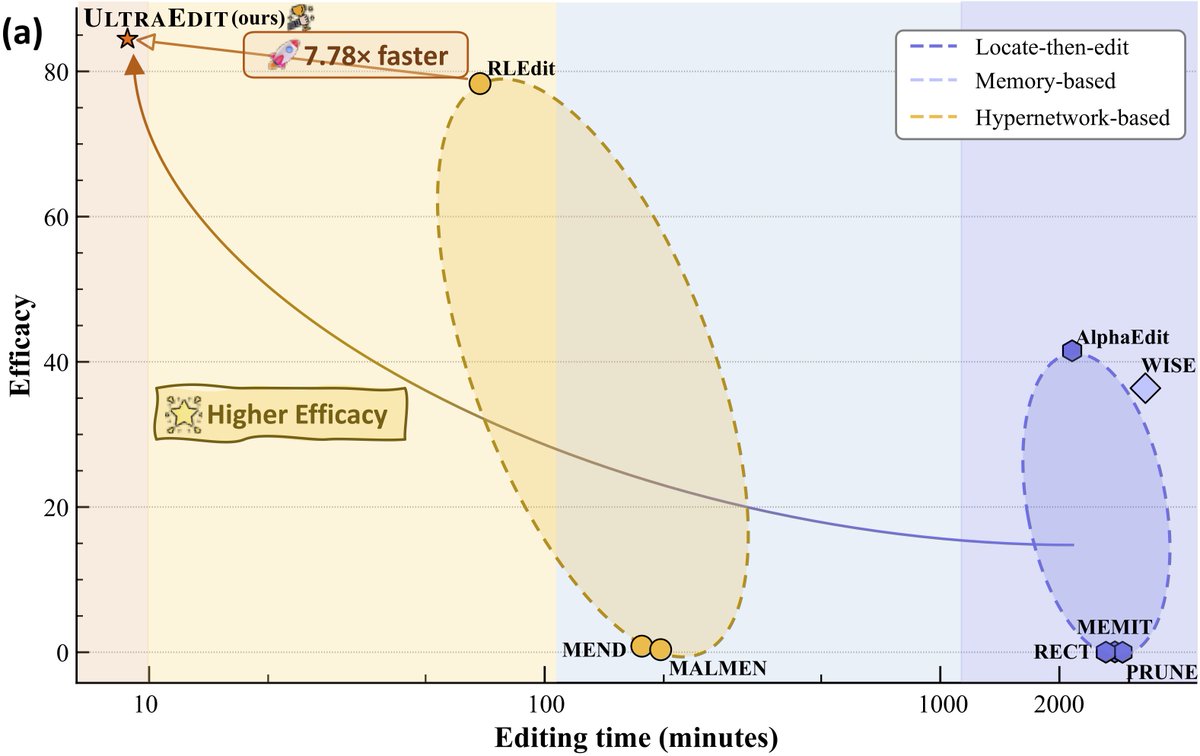

IMO this is another good work showing the paradigm of “learning = inference + long-term memory” (Previous one was HippoRAG led by Bernal Jiménez on semantic knowledge.) Here long-term memory is procedural knowledge organized as Python APIs. Such APIs allow reliable