Yu-Min Tseng

@ym_tseng

Incoming Ph.D. Student @VT_CS. Master Student @NTU_TW. Visiting Graduate Student @UVA.

ID: 1700055849711091712

http://ymtseng.com 08-09-2023 07:58:22

28 Tweet

298 Followers

362 Following

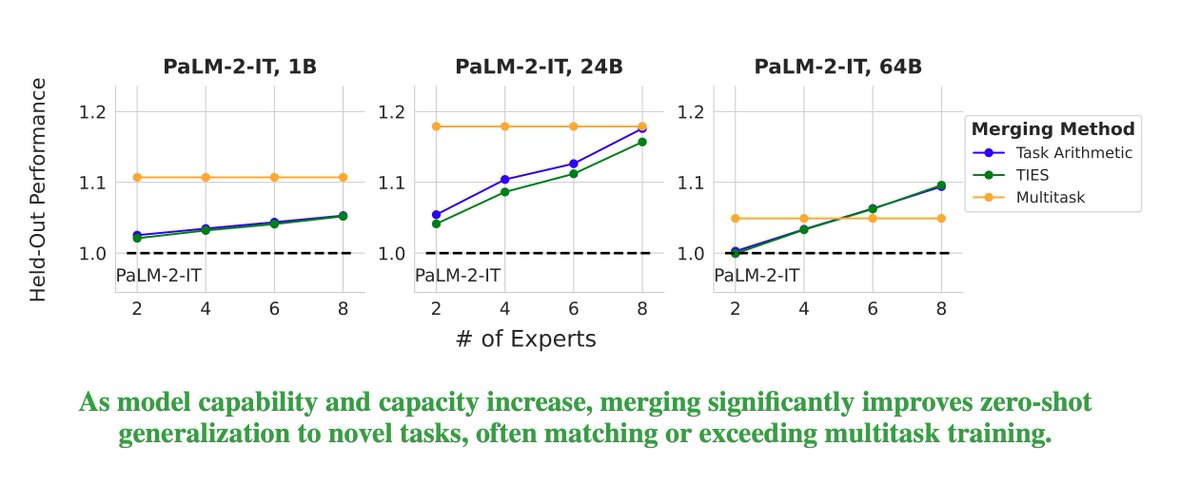

Excited to share that our paper on model merging at scale has been accepted to Transactions on Machine Learning Research (TMLR). Huge congrats to my intern Prateek Yadav and our awesome co-authors Jonathan Lai, Alexandra Chronopoulou, Manaal Faruqui, Mohit Bansal, and Tsendsuren 🎉!!

![Zhepei Wei ✈️ ICLR 2025 (@weizhepei) on Twitter photo ⚠️ New #ICML2025 paper!

Want faster and accurate LLM decoding? Check out AdaDecode! 🚀

⚙️ Adaptive token prediction at intermediate layers w/o full forward pass!

🎯 Identical output to standard decoding!

🧩 No draft model — just a lightweight LM head (0.2% model size)!

🧵[1/n] ⚠️ New #ICML2025 paper!

Want faster and accurate LLM decoding? Check out AdaDecode! 🚀

⚙️ Adaptive token prediction at intermediate layers w/o full forward pass!

🎯 Identical output to standard decoding!

🧩 No draft model — just a lightweight LM head (0.2% model size)!

🧵[1/n]](https://pbs.twimg.com/media/Gspa5p8asAIYzah.jpg)

![Yung-Sung Chuang (@yungsungchuang) on Twitter photo Scaling CLIP on English-only data is outdated now…

🌍We built CLIP data curation pipeline for 300+ languages

🇬🇧We train MetaCLIP 2 without compromising English-task performance (it actually improves!

🥳It’s time to drop the language filter!

📝arxiv.org/abs/2507.22062

[1/5]

🧵 Scaling CLIP on English-only data is outdated now…

🌍We built CLIP data curation pipeline for 300+ languages

🇬🇧We train MetaCLIP 2 without compromising English-task performance (it actually improves!

🥳It’s time to drop the language filter!

📝arxiv.org/abs/2507.22062

[1/5]

🧵](https://pbs.twimg.com/media/GxHWT25agAAIWqD.jpg)

![Zhepei Wei ✈️ ICLR 2025 (@weizhepei) on Twitter photo 🤔Ever wondered why your post-training methods (SFT/RL) make LLMs reluctant to say “I don't know?”

🤩Introducing TruthRL — a truthfulness-driven RL method that significantly reduces hallucinations while achieving accuracy and proper abstention!

📃arxiv.org/abs/2509.25760

🧵[1/n] 🤔Ever wondered why your post-training methods (SFT/RL) make LLMs reluctant to say “I don't know?”

🤩Introducing TruthRL — a truthfulness-driven RL method that significantly reduces hallucinations while achieving accuracy and proper abstention!

📃arxiv.org/abs/2509.25760

🧵[1/n]](https://pbs.twimg.com/media/G2I98ezXMAAnfDY.jpg)