Amy Zhang

@yayitsamyzhang

ECE prof. at UT Austin. Works in state abstractions and generalization in RL.

ID: 789998140782882817

http://amyzhang.github.io 23-10-2016 01:13:29

208 Tweet

4,4K Followers

384 Following

Check out the #NeurIPS2023 workshop on Goal Conditioned Reinforcement Learning: * Tomorrow (Friday) 900 -- 1830 CST * Great speakers: Jeff Clune Reuth Mirsky Olexandr Isayev 🇺🇦🇺🇸 Yonatan Bisk Susan Murphy lab * Program: goal-conditioned-rl.github.io/2023/

Turns out offline MARL doesn't just work out of the box. Check out @paulbbarde's 🧵👇 demonstrating why and one possible fix - which he will be presenting at The AAMAS Conference!

Still using one-step dynamics model for model-based RL? Check out our work on Diffusion World Model, which is outperforming one-step models by 44% for offline D4RL tasks arxiv.org/abs/2402.03570 joint work w/ Zihan Ding, Amy Zhang Yuandong Tian [1/3]

![Qinqing Zheng (@qqyuzu) on Twitter photo Still using one-step dynamics model for model-based RL? Check out our work on Diffusion World Model, which is outperforming one-step models by 44% for offline D4RL tasks arxiv.org/abs/2402.03570 joint work w/ <a href="/Hanry65960814/">Zihan Ding</a>, <a href="/yayitsamyzhang/">Amy Zhang</a> <a href="/tydsh/">Yuandong Tian</a> [1/3] Still using one-step dynamics model for model-based RL? Check out our work on Diffusion World Model, which is outperforming one-step models by 44% for offline D4RL tasks arxiv.org/abs/2402.03570 joint work w/ <a href="/Hanry65960814/">Zihan Ding</a>, <a href="/yayitsamyzhang/">Amy Zhang</a> <a href="/tydsh/">Yuandong Tian</a> [1/3]](https://pbs.twimg.com/media/GFv1F0SXMAU8wmK.jpg)

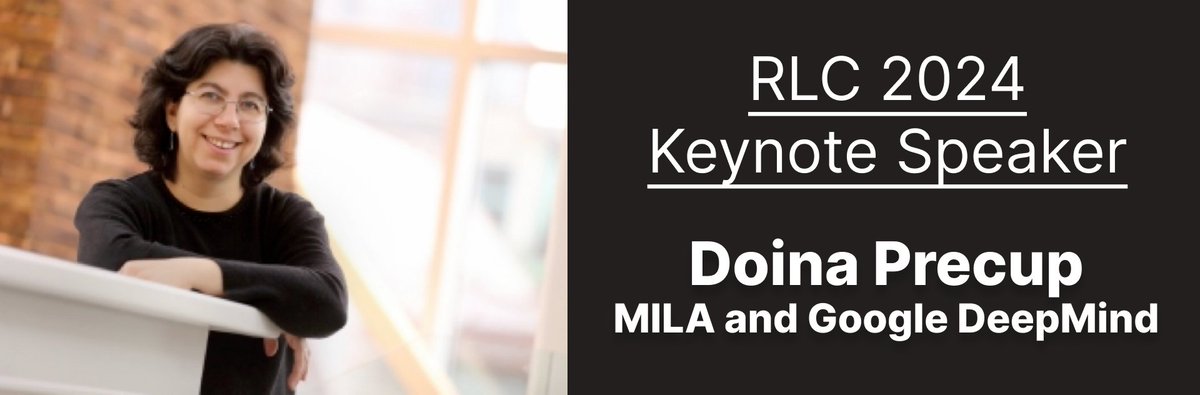

Excited to announce the call for workshop proposals for RL_Conference is open! rl-conference.cc/call_for_works… Due March 8th. Josiah Hanna and I are the workshop co-chairs this year and we hope you'll consider submitting a proposal. Workshops on any RL-related topics are welcome!

I did an interview on YouTube for ML New Papers. Lots of fun with Charles Riou talking about research, RL and networking in ML. Check out the video below.

As part of putting together #RLC2024, we spent a bunch of time thinking about how best to improve the review process for an ML conference. Post decisions, we spent some time reflecting on what went well and what we want to change in the future: check out rl-conference.cc/blogs/review_p…!

A new self-supervised reinforcement learning objective that learns a basis set for the optimal Q of any reward function. Check out Siddhant Agarwal @ RLC 2025’s thread below!

Looking forward to #ICML2025! Ben Eysenbach and I will be giving a tutorial on RL meets GenAI tomorrow. Consider coming!

VERY excited for RL_Conference next week, especially RL Beyond Rewards Workshop on Tuesday! There will be a social that evening, register here: lu.ma/jaeclqge. Hope to see you there! Harshit Sikchi (at RLC 25) Max Rudolph Pranaya Jajoo Siddhant Agarwal @ RLC 2025