Sean Ren

@xiangrennlp

Building @SaharaLabsAI | @USCViterbi Early Career Chair, Professor @nlp_usc | @MIT TR 35 , @ForbesUnder30 | Prev: @allen_ai, @Snapchat, @Stanford, @UofIllinois

ID: 767345894

https://www.seanre.com/ 19-08-2012 10:38:48

1,1K Tweet

11,11K Followers

557 Following

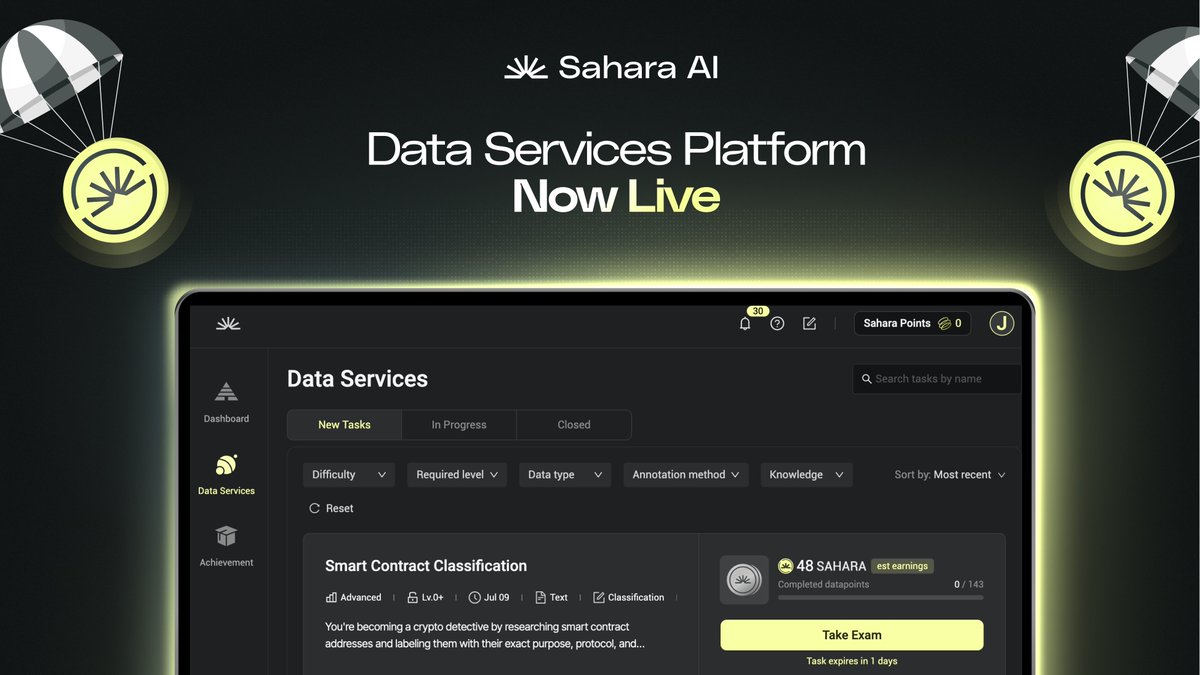

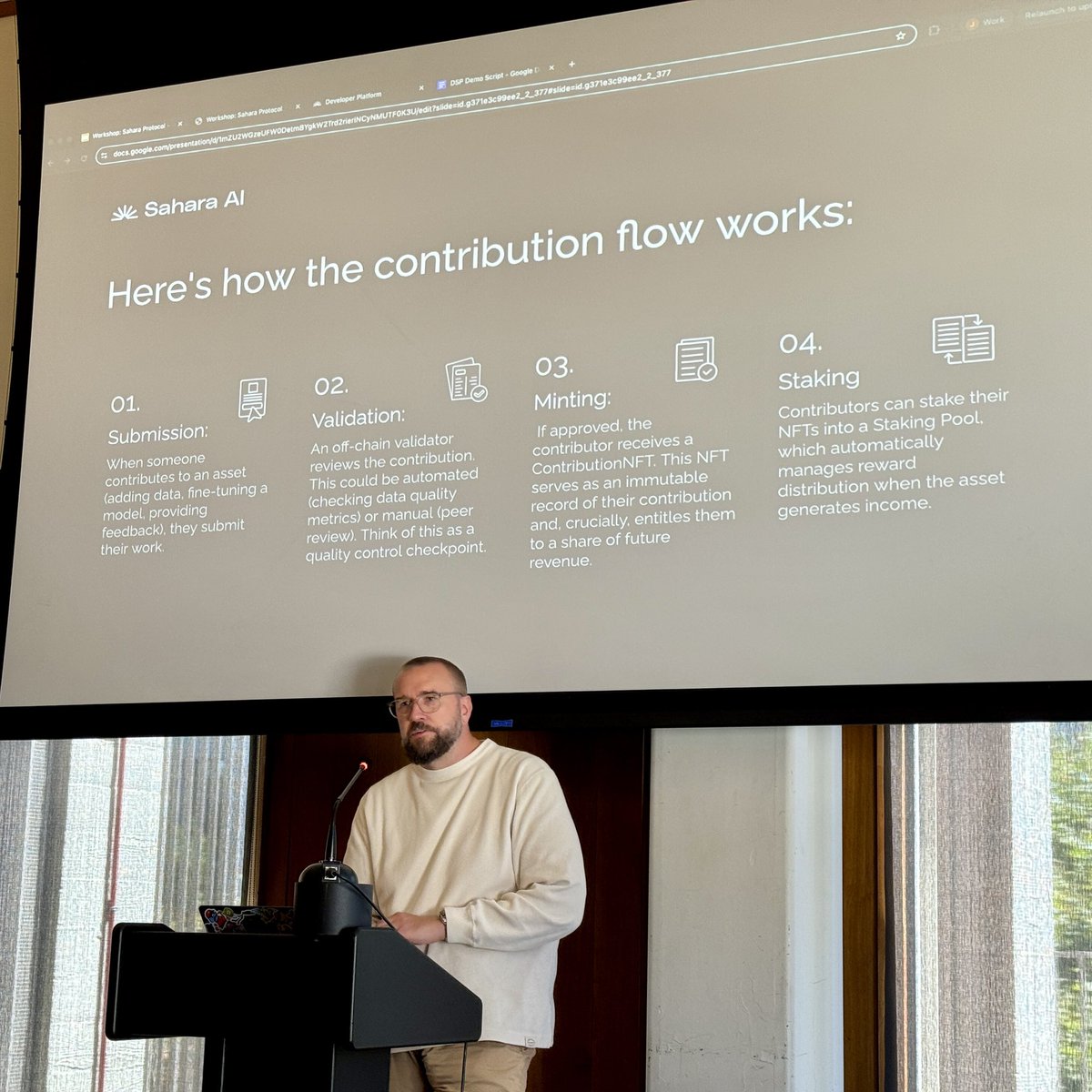

In case you missed this — We're going behind the scenes — how it came together, what's live now, and what this unlocks. We shared the vision on AI developer platform and AI marketplace, and talked through what's next for Sahara AI | SaharaAI.com 🔆.

Welcome back Sean Ren 🔆, Co-Founder & CEO of Sahara AI 🔆, to #KBW2025: IMPACT! Sean is leading the charge for decentralized AI platforms that empower collaboration and fairness! 📍Sept 23–24 | Walkerhill, Seoul 🎟 tickets.koreablockchainweek.com #KBW #KoreaBlockchainWeek #Web3

I passed my thesis defense today! 😊 It's been a rewarding journey at USC NLP USC Thomas Lord Department of Computer Science. Many thanks to Sean Ren Robin Jia Swabha Swayamdipta Salman Avestimehr, and everyone who supported me!

🔈BASS SBC Speaker Highlight 🔈 Sean Ren Co-Founder Sahara AI | SaharaAI.com 🔆 & Franklin Bi General Partner Pantera Capital