Tianfu (Matt) Wu

@viseyeon

He is an associate professor in the department of ECE at NCSU.

ID: 197323213

https://tfwu.github.io/ 01-10-2010 05:42:54

57 Tweet

290 Followers

993 Following

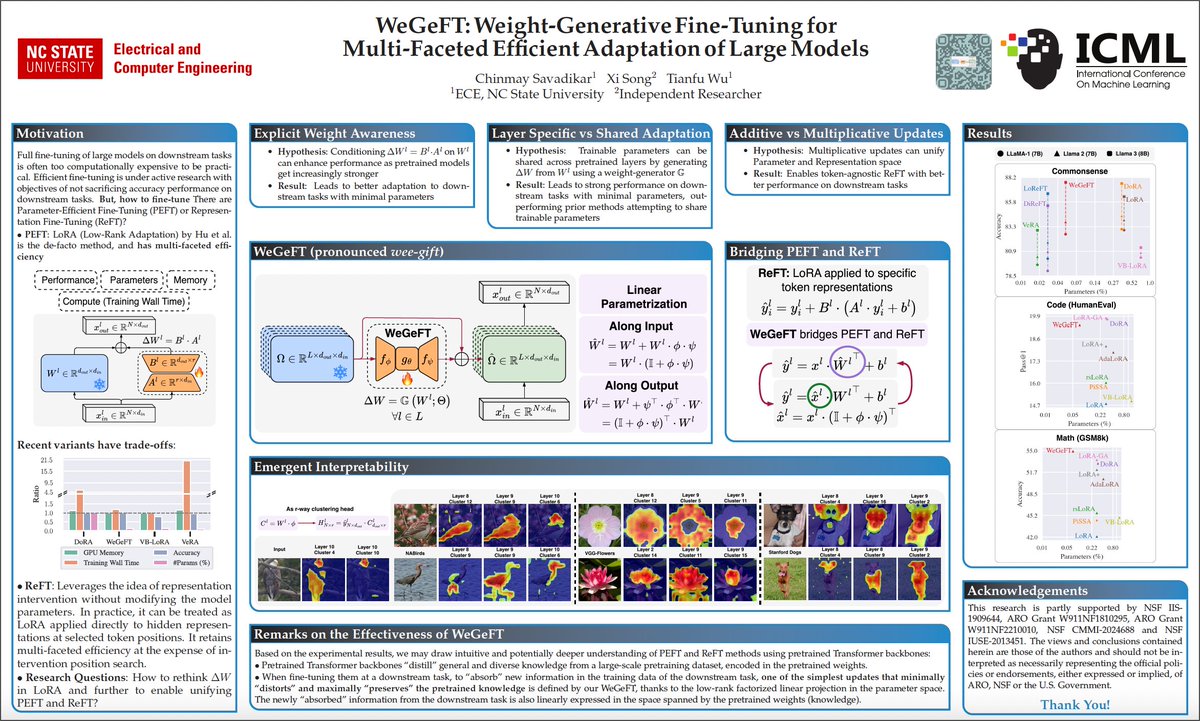

Presenting WeGeFT at #ICML25 on 17th July with Tianfu (Matt) Wu, come say hi! Paper: arxiv.org/abs/2312.00700 📍East Exhibition Hall A-B, poster #1306 ⌚️ 4:30pm - 7pm PDT