Uri Cohen, PhD

@uricohen42

Theoretical computational neuroscience postdoc at Cambridge University (CBL lab); PhD from the Hebrew University; also @uricohen42.bsky.social

ID: 1130588521218101248

https://uricohen.github.io/ 20-05-2019 21:38:03

902 Tweet

1,1K Followers

2,2K Following

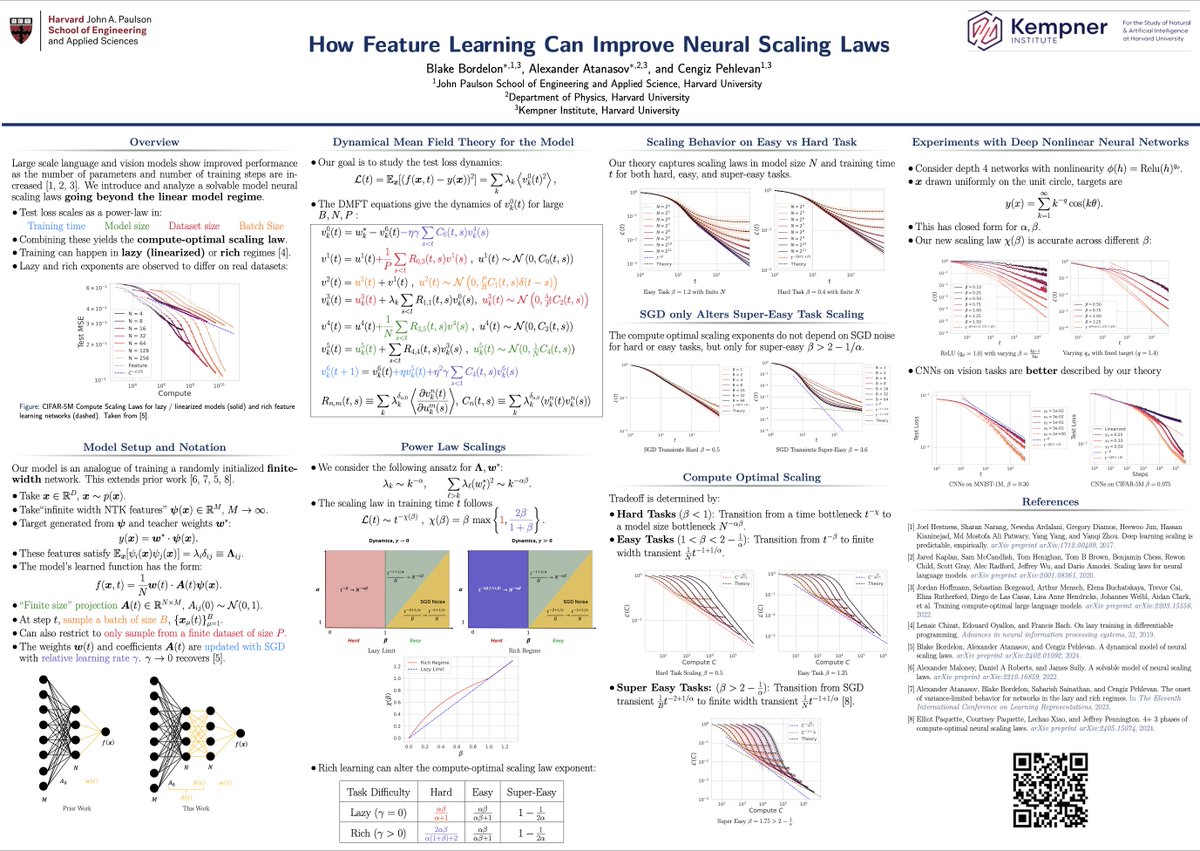

1/n I’m very excited to present this Spotlight. It was one of the more creative projects of my PhD, and also the last one with Blake Bordelon ☕️🧪👨💻 & Cengiz Pehlevan, the best coauthors you can have :) Come by this afternoon to learn "How Feature Learning Can Improve Neural Scaling Laws."

Not unplausible... xkcd.com/3085/ Am looking for Sabine Hossenfelder to weight in

Alex Nichol it represents the forward and backward pass

Elegant theoretical derivations are exclusive to physics. Right?? Wrong! In a new preprint, we: ✅"Derive" a spiking recurrent network from variational principles ✅Show it does amazing things like out-of-distribution generalization 👉[1/n]🧵 w/ co-lead Dekel Galor & Jake Yates

![Hadi Vafaii (@hadivafaii) on Twitter photo Elegant theoretical derivations are exclusive to physics. Right?? Wrong!

In a new preprint, we:

✅"Derive" a spiking recurrent network from variational principles

✅Show it does amazing things like out-of-distribution generalization

👉[1/n]🧵

w/ co-lead <a href="/dekelgalor/">Dekel Galor</a> & Jake Yates Elegant theoretical derivations are exclusive to physics. Right?? Wrong!

In a new preprint, we:

✅"Derive" a spiking recurrent network from variational principles

✅Show it does amazing things like out-of-distribution generalization

👉[1/n]🧵

w/ co-lead <a href="/dekelgalor/">Dekel Galor</a> & Jake Yates](https://pbs.twimg.com/media/GrSe1nUWYAAd6G4.jpg)

1/3 Geoffrey Hinton once said that the future depends on some graduate student being suspicious of everything he says (via Lex Fridman). He also said was that it was impossible to find biologically plausible approaches to backprop that scale well: radical.vc/geoffrey-hinto….

You know all those arguments that LLMs think like humans? Turns out it's not true. 🧠 In our paper "From Tokens to Thoughts: How LLMs and Humans Trade Compression for Meaning" we test it by checking if LLMs form concepts the same way humans do Yann LeCun Chen Shani Dan Jurafsky

How does in-context learning emerge in attention models during gradient descent training? Sharing our new Spotlight paper ICML Conference: Training Dynamics of In-Context Learning in Linear Attention arxiv.org/abs/2501.16265 Led by Yedi Zhang with Aaditya Singh and Peter Latham

This is the syllabus of the course Geoffrey Hinton and I taught in 1998 at the Gatsby Unit (just after it was founded). Notice anything?

מוזמנות.ים לקרוא על אוטודידקטיות, על סקרנות בלתי נלאית להבנת המוח האנושי, על התגובות מהקהילה המדעית (כולל של פרופ' אילנה גוזס ופרופ' בועז ברק Boaz Barak), ובעיקר על הרצון להפעיל את המוח האישי של אדם אחד - רגע לפני שלא יכל עוד. קישור לכתבה ב'הארץ': haaretz.co.il/magazine/2025-…