templar

@tplr_ai

incenτivised inτerneτ-wide τraining

ID: 1896776987806740481

http://www.tplr.ai 04-03-2025 04:17:46

150 Tweet

1,1K Followers

7 Following

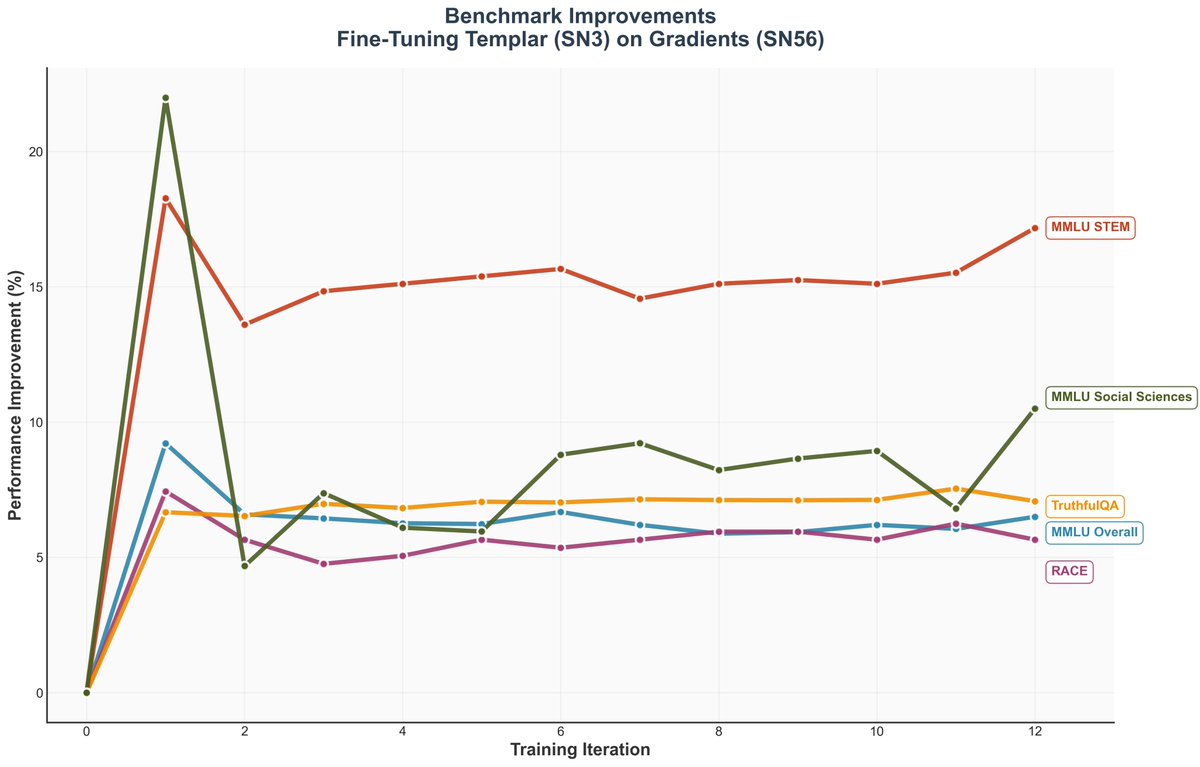

BτComander templar Scaling an AI model from 1.2 billion to 8 billion parameters is like expanding a football team from a small local squad to a world-class club. A bigger team can cover more ground, execute complex plays, and adapt better, just as more parameters help an AI tackle tougher tasks and

Just released a detailed deep dive on decentralized training. We cover a lot in there, but a quick brain dump while my thoughts are fresh: So much has happened in the past 3 months and it's hard not to get excited - Nous Research pre-trained a 15B model in a distributed fashion

Teng Yan · 30 days of COT Nous Research Chain of Thought — 🌈 30 Days of AI x Crypto Teng, the only one live on mainnet is sn3 templar templar -- all others you mention are on testnets. Great coverage tho!