Tim Lau

@timlautk

Postdoc @Penn @PennMedicine @Wharton; Past Postdoc @ChicagoBooth; PhD @NorthwesternU Statistics & Data Science

ID: 2276510935

http://timlautk.github.io 04-01-2014 18:50:47

363 Tweet

476 Followers

1,1K Following

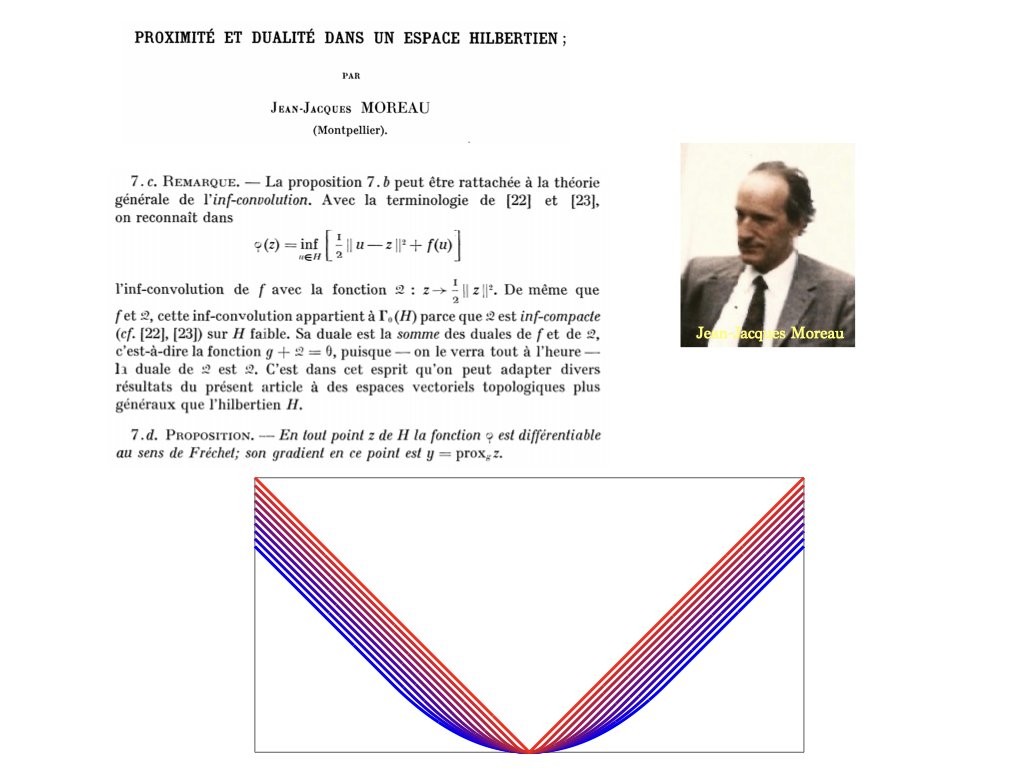

My latest work on optimization for deep learning is out! Credits also to Prime Intellect for supporting the compute for this work.