Ananda Theertha Suresh

@th33rtha

Researcher in machine learning and information theory.

ID: 3059466984

http://theertha.info 03-03-2015 08:50:01

120 Tweet

900 Followers

136 Following

Very interesting paper by Ananda Theertha Suresh et al For categorical/Gaussian distributions, they derive the rate at which a sample is forgotten to be 1/k after k rounds of recursive training (hence 𝐦𝐨𝐝𝐞𝐥 𝐜𝐨𝐥𝐥𝐚𝐩𝐬𝐞 happens more slowly than intuitively expected)

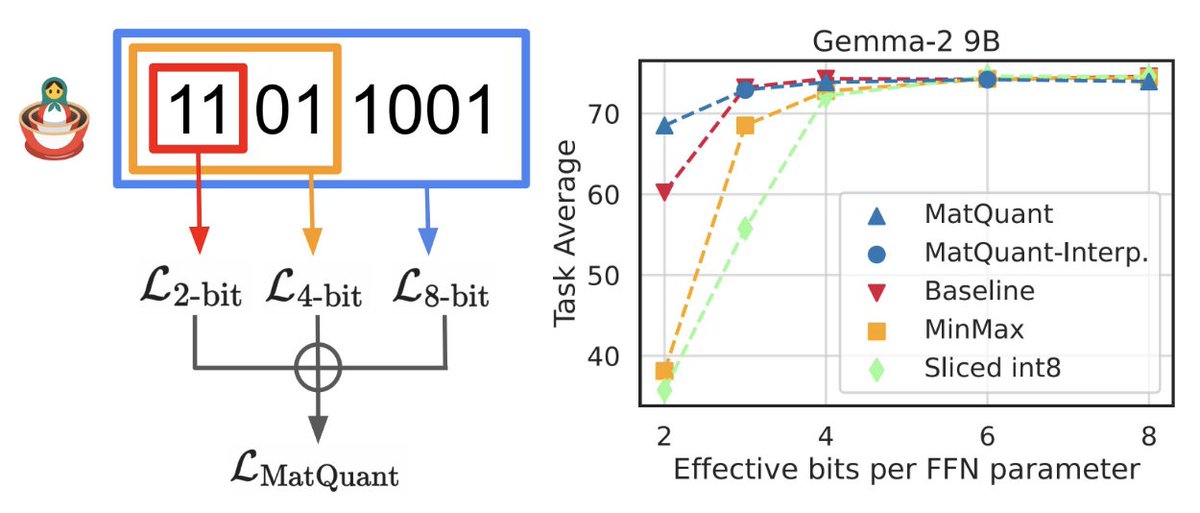

Announcing Matryoshka Quantization! A single Transformer can now be served at any integer precision!! In addition, our (sliced) int2 models outperform the baseline by 10%. Work co-led w/ PURANJAY DATTA, in colab w/ Jeff Dean, Prateek Jain & Aditya Kusupati. 1/7

And the next EnCORE Institute workshop will be on **Theoretical Perspectives on LLMs** sites.google.com/ucsd.edu/encor… We have a great lineup of participants - and an incredible set of talks. Registration link will be active soon

Excited about this work with Asher Trockman Yash Savani (and others) on antidistillation sampling. It uses a nifty trick to efficiently generate samples that makes student models _worse_ when you train on samples. I spoke about it at Simons this past week. Links below.

Congratulations Ashwinee Panda Xiangyu Qi Ahmad Beirami et al!

If you are at #AISTATS2025 and are interested in concept erasure, talk to Somnath Basu Roy Chowdhury at Poster Session 1 on Saturday May 3.