Sebastian Sylwan

@sylwan

Father of twin girls, passionate about Computer Graphics, Curious, Strong opinions, weakly held.

ID: 16173936

07-09-2008 21:22:29

803 Tweet

349 Followers

579 Following

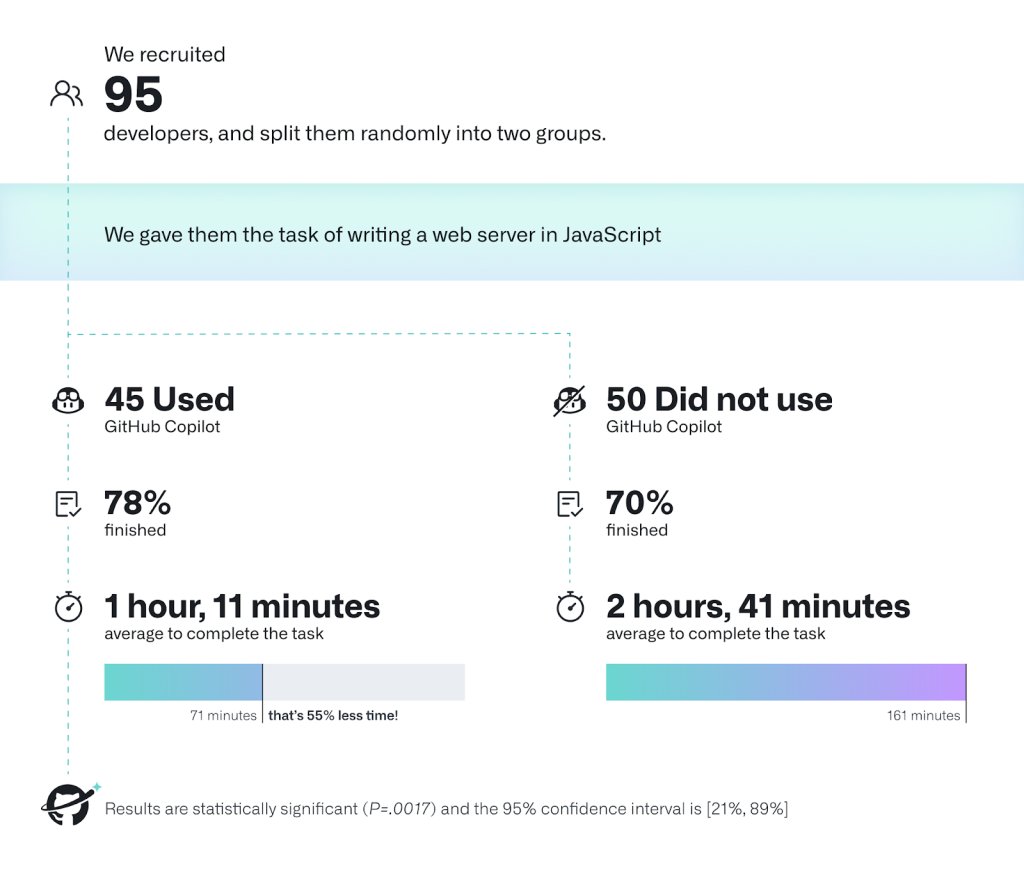

I hit a bug in the Attention formula that’s been overlooked for 8+ years. All Transformer models (GPT, LLaMA, etc) are affected. Researchers isolated the bug last month – but they missed a simple solution… Why LLM designers should stop using Softmax 👇 evanmiller.org/attention-is-o…

Can we just make Yann LeCun president of AI and call it a day please?

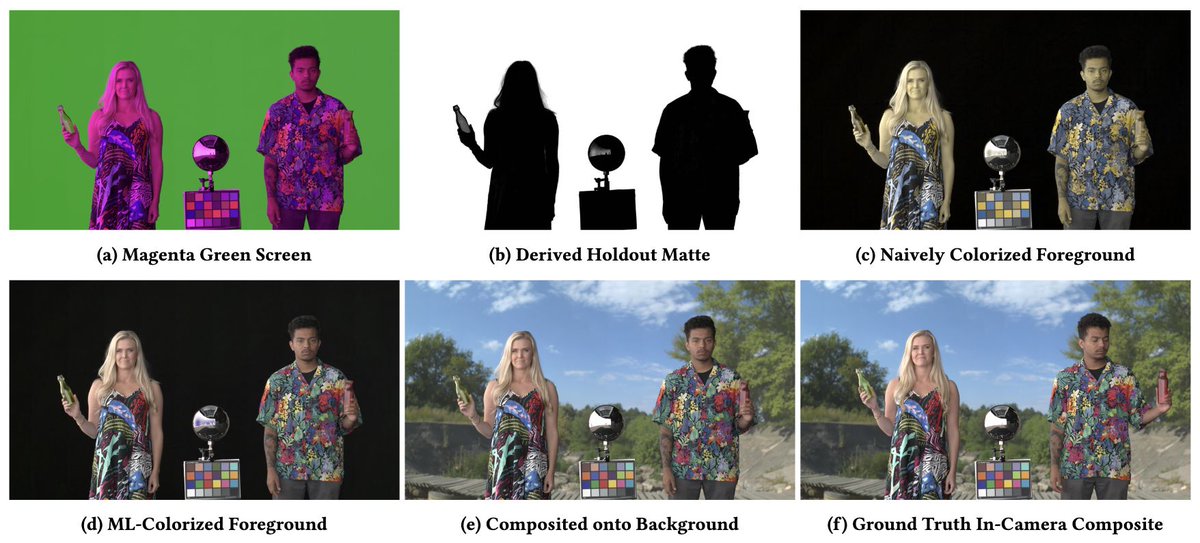

Introducing, the Paul Debevec long read, at befores & afters magazine. I'm sure many people know Paul Debevec's incredible influence on VFX. At SIGGRAPH Asia ➡️ Hong Kong I got the chance to sit down with him to discuss a discreet portion of his work at Google & at Netflix. beforesandafters.com/2024/04/04/the…