Sudarshan Babu

@sudarshanb263

AI fellow at @CZbiohub, previously CS PhD @TTIC_connect, post doc @Uchicago. Deep learning for drug discovery tools. Former Nvidia, Amazon intern.

ID: 1661507022527799297

https://people.cs.uchicago.edu/~sudarshan/ 24-05-2023 22:58:57

59 Tweet

44 Followers

220 Following

In new work led by gokul kannan with Peter Kim, we show that protein language models learn allosteric interactions without any explicit supervision, evidence that evaluating protein LMs solely on their ability to learn 3D structure may lose important functional information.

“Hey Aravind Srinivas Perplexity would you guys consider presenting results in a Reddit-style format, where each ‘comment’ comes from an LLM predisposed to different ideological perspectives (liberal, conservative, etc.). This would better mimic real discourse and help users

I always found it puzzling how language models learn so much from next-token prediction, while video models learn so little from next frame prediction. Maybe it's because LLMs are actually brain scanners in disguise. Idle musings in my new blog post: sergeylevine.substack.com/p/language-mod…

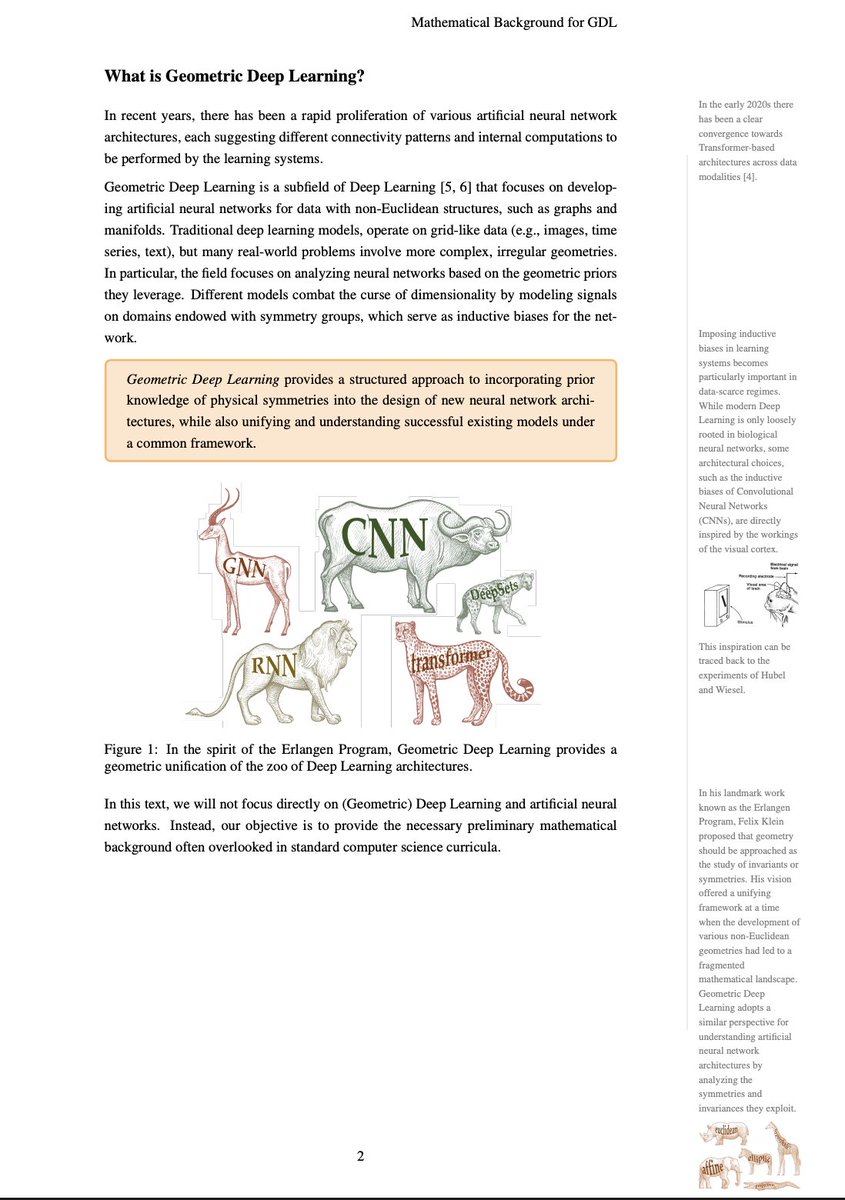

🚨 "Mathematical Foundations of Geometric Deep Learning", co-authored with Michael Bronstein 📚 Read the paper here: arxiv.org/abs/2508.02723 🧠 We review the mathematical background necessary for studying Geometric Deep Learning. #GDL #mathematics #deeplearning #AI