Matthew Johnson

@singularmattrix

Researcher at Google Brain. I work on JAX (github.com/google/jax).

ID: 167628717

https://people.csail.mit.edu/mattjj/ 17-07-2010 02:33:20

2,2K Tweet

12,12K Followers

3,3K Following

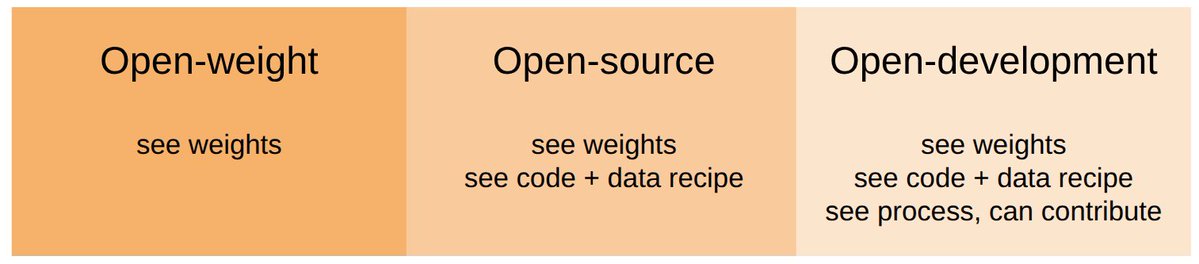

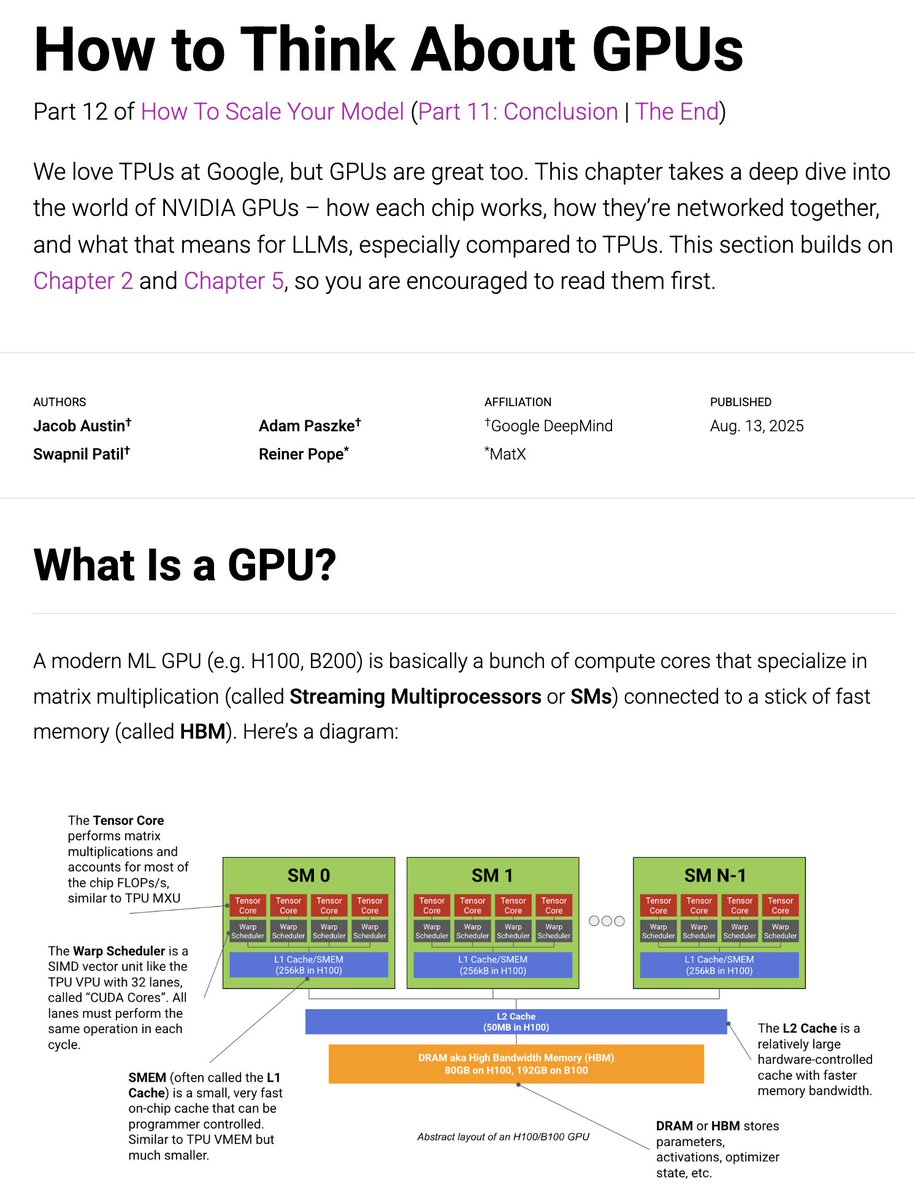

Training our most capable Gemini models relies heavily on our JAX software stack + Google's TPU hardware platforms. If you want to learn more, see this awesome book "How to Scale Your Model": jax-ml.github.io/scaling-book/ It was put together by my Google DeepMind colleagues

For a rare look into how LLMs are really built, check out David Hall's retrospective on how we trained the Marin 8B model from scratch (and outperformed Llama 3.1 8B base). It’s an honest account with all the revelations and mistakes we made along our journey. Papers are forced to

Jeremy Howard Luckily we have alternatives :) github.com/jax-ml/jax/blo… Just 100 lines without leaving Python and SOTA performance