Sharut Gupta

@sharut_gupta

PhD@MIT_CSAIL | Previously @GoogleDeepMind, @AIatMeta | Representation Learning, In-Context Learning, ML Robustness, Generalization | IIT Delhi’22

ID: 1297440922032955398

https://www.mit.edu/~sharut/ 23-08-2020 07:50:03

184 Tweet

1,1K Followers

852 Following

I'll be at NeurIPS Conference next week, presenting - ContextSSL in Session 2 (#2301) (also oral at SSL workshop) - DisentangledSSL at UniReps workshop (Honorable Mention & Oral!) - DRAKES at MLSB & AIDrugX workshop Please reach out if you’d like to chat!

Grateful to MIT CSAIL Alliances for having me on their podcast! It was a joy sharing how our recent work trains machines to self-adapt to new tasks and scenarios! Paper: lnkd.in/g2g-RcDb Podcast: bit.ly/40IVZ7v

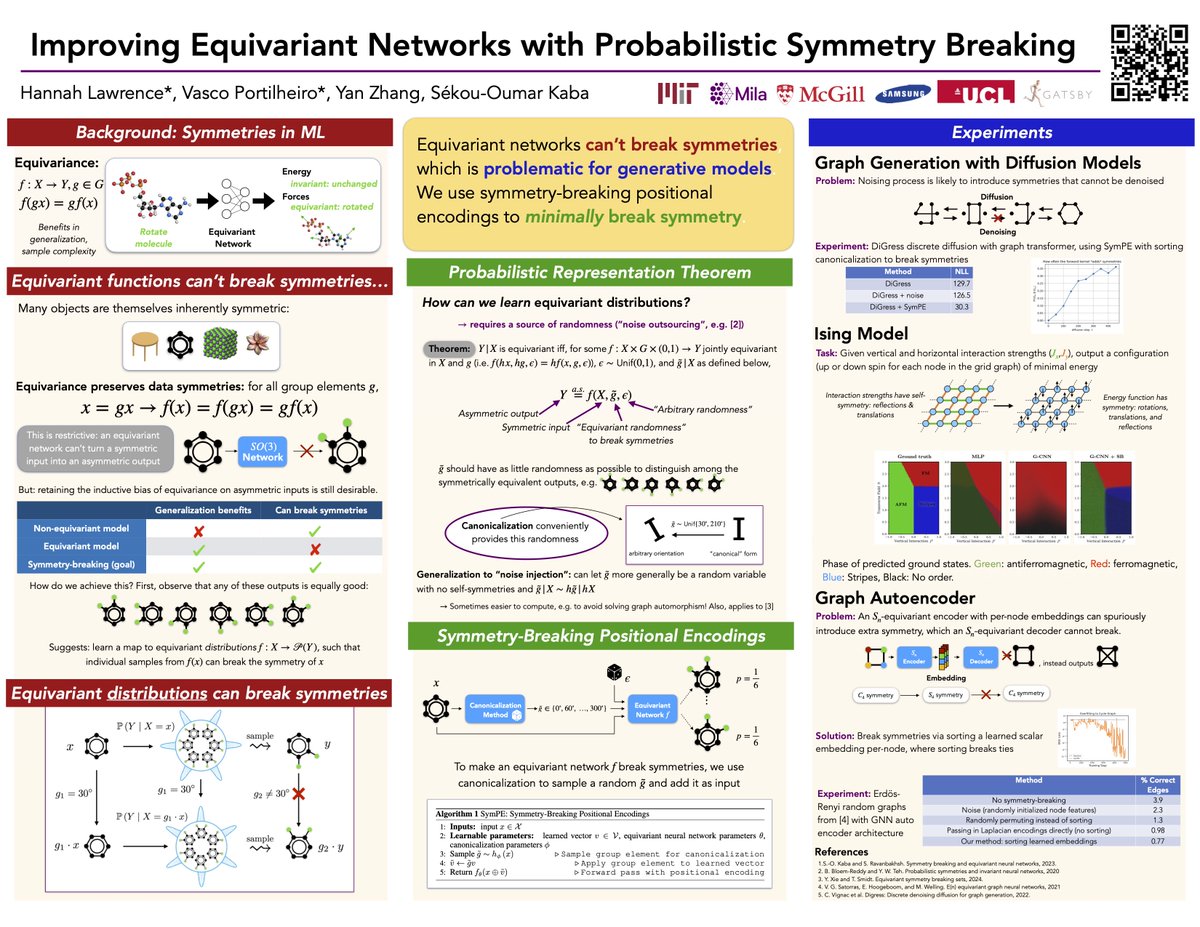

Equivariant functions (e.g. GNNs) can't break symmetries, which can be problematic for generative models and beyond. Come to poster #207 Saturday at 10AM to hear about our solution: SymPE, or symmetry-breaking positional encodings! w/Vasco Portilheiro, Yan Zhang, Oumar Kaba

"Compositional Risk Minimization" Tackling unseen attribute combinations with additive energy models at #ICML2025. 💡 Check out the excellent summary by Divyat Samadhiya

🚨Past work shows: dropping just 0.1% of the data can change the conclusions of important studies. We show: Many approximations can fail to catch this. 📢Check out our new TMLR paper (w/ David Burt, Yunyi Shen/申云逸 🐺 , Tin Nguyen, and Tamara Broderick ) 👇 openreview.net/forum?id=m6EQ6…